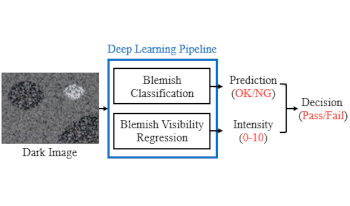

Nowadays, the quality of low-light pictures is becoming a competitive edge in mobile phones. To ensure this, the necessity to filter out dark defects that cause abnormalities in dark photos in advance is emerging, especially for dark blemish. However, high manpower is required to separate dark blemish patterns due to the low consistency problem of the existing scoring method. This paper proposes a novel deep learning-based screening method to solve this problem. The proposed pipeline uses two ResNet-D models with different depths to perform classification and regression of visibility, respectively. Then it derives a new score that combines the outputs of both models into one. In addition, we collect the large-scale image set from real manufacturing processes to train models and configure the dataset with two types of label systems suitable for each model. Experimental results show the performance of the deep learning models trained and validated with the presented datasets. Our classification model has significantly improved screening performance with respect to its accuracy and F1-score compared to the conventional handcraft method. Also, the visibility regression method shows a high Pearson correlation coefficient with 30 expert engineers, and the inference output of our regression model is consistent with it.

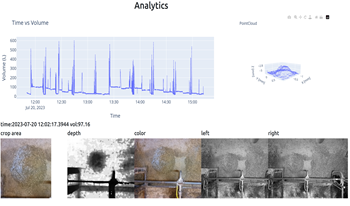

Accurate measurements of daily feed consumption for dairy cattle is an important metric for determining animal health and feed efficiency. Traditionally, manual measurements or average feed consumption for groups of animals have been employed which leads to human error and overall inconsistent measurements for the individual. Therefore, we developed a scalable non-invasive analytics system that leverages depth information derived from stereo cameras to consistently measure feed offered and report findings throughout the day. A top-down array of cameras faces the available feed, measures feed depth, projects depth to a 3-dimensional (3D) mesh, and finally estimates feed volume from the 3D projection. Our successful experiments at the Purdue University Dairy, that houses 230 cows, demonstrates its robustness and scalability for larger operations holding significant potential for optimizing feed management in dairy farms, thereby improving animal health and sustainability in the dairy industry.

Speech emotions (SEs) are an essential component of human interactions and an efficient way of persuading human behavior. The recognition of emotions from the speech is an emergent but challenging area of digital signal processing (DSP). Healthcare professionals are always looking for the best ways to understand patient voices for better diagnosis and treatment. Speech emotion recognition (SER) from the human voice, particularly in a person with neurological disorders like Parkinson's disease (PD), can expedite the diagnostic process. Patients with PD are primarily passed through diagnosis via expensive tests and continuous monitoring that is time-consuming and costly. This research aims to develop a system that can accurately identify common SEs which are important for PD patients, such as anger, happiness, normal, and sadness. We proposed a novel lightweight deep model to predict common SEs. The adaptive wavelet thresholding method is employed for pre-processing the audio data. Furthermore, we generated spectrograms from the speech data instead of directly processing voice data to extract more discriminative features. The proposed method is trained on generated spectrograms of the IEMOCAP dataset. The suggested deep learning method contains convolution layers for learning discriminative features from spectrograms. The performance of the proposed framework is evaluated on standard performance metrics, which show promising real-time results for PD patients.

As facial authentication systems become an increasingly advantageous technology, the subtle inaccuracy under certain subgroups grows in importance. As researchers perform data augmentation to increase subgroup accuracies, it is critical that the data augmentation approaches are understood. We specifically research the impact that the data augmentation method of racial transformation has upon the identity of the individual according to a facial authentication network. This demonstrates whether the racial transformation maintains critical aspects to an individual identity or whether the data augmentation method creates the equivalence of an entirely new individual for networks to train upon. We demonstrate our method for racial transformation based on other top research articles methods, display the embedding distance distribution of augmented faces compared with the embedding distance of non-augmented faces and explain to what extent racial transformation maintains critical aspects to an individual’s identity.

Accurate diagnosis of microcalcification (MC) lesions in mammograms as benign or malignant is a challenging clinical task. In this study we investigate the potential discriminative power of deep learning features in MC lesion diagnosis. We consider two types of deep learning networks, of which one is a convolutional neural network developed for MC detection and the other is a denoising autoencoder network. In the experiments, we evaluated both the separability between malignant and benign lesions and the classification performance of image features from these two networks using Fisher's linear discriminant analysis on a set of mammographic images. The results demonstrate that the deep learning features from the MC detection network are most discriminative for classification of MC lesions when compared to both features from the autoencoder network and traditional handcrafted texture features.

Classification of degraded images is very important in practice because images are usually degraded by compression, noise, blurring, etc. Nevertheless, most of the research in image classification only focuses on clean images without any degradation. Some papers have already proposed deep convolutional neural networks composed of an image restoration network and a classification network to classify degraded images. This paper proposes an alternative approach in which we use a degraded image and an additional degradation parameter for classification. The proposed classification network has two inputs which are the degraded image and the degradation parameter. The estimation network of degradation parameters is also incorporated if degradation parameters of degraded images are unknown. The experimental results showed that the proposed method outperforms a straightforward approach where the classification network is trained with degraded images only.

In this paper, we propose a patch-based system to classify non-small cell lung cancer (NSCLC) diagnostic whole slide images (WSIs) into two major histopathological subtypes: adenocarcinoma (LUAD) and squamous cell carcinoma (LUSC). Classifying patients accurately is important for prognosis and therapy decisions. The proposed system was trained and tested on 876 subtyped NSCLC gigapixel-resolution diagnostic WSIs from 805 patients – 664 in the training set and 141 in the test set. The algorithm has modules for: 1) auto-generated tumor/non-tumor masking using a trained residual neural network (ResNet34), 2) cell-density map generation (based on color deconvolution, local drain segmentation, and watershed transformation), 3) patch-level feature extraction using a pre-trained ResNet34, 4) a tower of linear SVMs for different cell ranges, and 5) a majority voting module for aggregating subtype predictions in unseen testing WSIs. The proposed system was trained and tested on several WSI magnifications ranging from x4 to x40 with a best ROC AUC of 0.95 and an accuracy of 0.86 in test samples. This fully-automated histopathology subtyping method outperforms similar published state-of-the-art methods for diagnostic WSIs.

Overweight vehicles are a common source of pavement and bridge damage. Especially mobile crane vehicles are often beyond legal per-axle weight limits, carrying their lifting blocks and ballast on the vehicle instead of on a separate trailer. To prevent road deterioration, the detection of overweight cranes is desirable for law enforcement. As the source of crane weight is visible, we propose a camera-based detection system based on convolutional neural networks. We iteratively label our dataset to vastly reduce labeling and extensively investigate the impact of image resolution, network depth and dataset size to choose optimal parameters during iterative labeling. We show that iterative labeling with intelligently chosen image resolutions and network depths can vastly improve (up to 70×) the speed at which data can be labeled, to train classification systems for practical surveillance applications. The experiments provide an estimate of the optimal amount of data required to train an effective classification system, which is valuable for classification problems in general. The proposed system achieves an AUC score of 0.985 for distinguishing cranes from other vehicles and an AUC of 0.92 and 0.77 on lifting block and ballast classification, respectively. The proposed classification system enables effective road monitoring for semi-automatic law enforcement and is attractive for rare-class extraction in general surveillance classification problems.

Cosmologists are facing the problem of the analysis of a huge quantity of data when observing the sky. The methods used in cosmology are, for the most of them, relying on astrophysical models, and thus, for the classification, they usually use a machine learning approach in two-steps, which consists in, first, extracting features, and second, using a classifier. In this paper, we are specifically studying the supernovae phenomenon and especially the binary classification “I.a supernovae versus not-I.a supernovae”. We present two Convolutional Neural Networks (CNNs) defeating the current state-of-the-art. The first one is adapted to time series and thus to the treatment of supernovae light-curves. The second one is based on a Siamese CNN and is suited to the nature of data, i.e. their sparsity and their weak quantity (small learning database).

Recently, the use of neural networks for image classification has become widely spread. Thanks to the availability of increased computational power, better performing architectures have been designed, such as the Deep Neural networks. In this work, we propose a novel image representation framework exploiting the Deep p-Fibonacci scattering network. The architecture is based on the structured p-Fibonacci scattering over graph data. This approach allows to provide good accuracy in classification while reducing the computational complexity. Experimental results demonstrate that the performance of the proposed method is comparable to state-of-the-art unsupervised methods while being computationally more efficient.