Artificial Intelligence (AI) contributes significantly to the development of autonomous vehicles in an unmatched way. This paper outlines techniques and algorithms for the implementation of Intelligent Autonomous vehicles (IAV) leveraging AI algorithms for traffic perception, decision-making and control in autonomous vehicles through merging traffic scenario detection, traffic lane detection, semantic segmentation, pedestrian detection, and traffic sign classification and detection. The modern computer vision and deep neural networks-based algorithms enable the real-time analysis of different vehicle data through artificial intelligence. The vehicle dynamics are constituted through AI in vehicle control systems for increased safety and efficiency to ensure that they are optimized with time. In addition, the paper will also discuss challenges and possible future directions, underscore how AI has the potential of driving autonomous vehicles towards safer and more reliable as well as intelligent transportation systems. This is the hope of the future whereby mobility is intelligent, sustainable, and accessible with the combination of AI with autonomous vehicles.

Anomalous behavior detection is a challenging research area within computer vision. One such behavior is throwing action in traffic flow, which is one of the unique requirements of our Smart City project to enhance public safety. This paper proposes a solution for throwing action detection in surveillance videos using deep learning. At present, datasets for throwing actions are not publicly available. To address the use-case of our Smart City project, we first generate the novel public 'Throwing Action' dataset, consisting of 271 videos of throwing actions performed by traffic participants, such as pedestrians, bicyclists, and car drivers, and 130 normal videos without throwing actions. Second, we compare the performance of different feature extractors for our anomaly detection method on the UCF-Crime and Throwing-Action datasets. Finally, we improve the performance of the anomaly detection algorithm by applying the Adam optimizer instead of Adadelta, and we propose a mean normal loss function that yields better anomaly detection performance. The experimental results reach an area under the ROC curve of 86.10 for the Throwing-Action dataset, and 80.13 on the combined UCF-Crime+Throwing dataset, respectively.

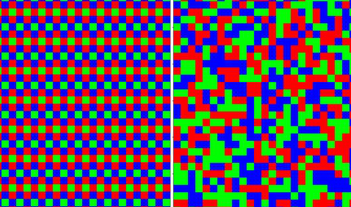

A convolutional neural network is trained in auto/hetero-associative mode for reconstructing RGB components from a randomly mosaicked color image. The trained network was shown to perform equally well when images are sampled periodically or with a different random mosaic. Therefore, this model is able to generalize on every type of color filter array. We attribute this property of universal demosaicking to the network learning the statistical structure of color images independently of the mosaic pattern arrangement.

In this paper, we propose a novel and standardized approach to the problem of camera-quality assessment on portrait scenes. Our goal is to evaluate the capacity of smartphone front cameras to preserve texture details on faces. We introduce a new portrait setup and an automated texture measurement. The setup includes two custom-built lifelike mannequin heads, shot in a controlled lab environment. The automated texture measurement includes a Region-of-interest (ROI) detection and a deep neural network. To this aim, we create a realistic mannequins database, which contains images from different cameras, shot in several lighting conditions. The ground-truth is based on a novel pairwise comparison technology where the scores are generated in terms of Just-Noticeable-differences (JND). In terms of methodology, we propose a Multi-Scale CNN architecture with random crop augmentation, to overcome overfitting and to get a low-level feature extraction. We validate our approach by comparing its performance with several baselines inspired by the Image Quality Assessment (IQA) literature.

360-degree Image quality assessment (IQA) is facing the major challenge of lack of ground-truth databases. This problem is accentuated for deep learning based approaches where the performances are as good as the available data. In this context, only two databases are used to train and validate deep learning-based IQA models. To compensate this lack, a dataaugmentation technique is investigated in this paper. We use visual scan-path to increase the learning examples from existing training data. Multiple scan-paths are predicted to account for the diversity of human observers. These scan-paths are then used to select viewports from the spherical representation. The results of the data-augmentation training scheme showed an improvement over not using it. We also try to answer the question of using the MOS obtained for the 360-degree image as the quality anchor for the whole set of extracted viewports in comparison to 2D blind quality metrics. The comparison showed the superiority of using the MOS when adopting a patch-based learning.

The aim of colour constancy is to discount the effect of the scene illumination from the image colours and restore the colours of the objects as captured under a ‘white’ illuminant. For the majority of colour constancy methods, the first step is to estimate the scene illuminant colour. Generally, it is assumed that the illumination is uniform in the scene. However, real world scenes have multiple illuminants, like sunlight and spot lights all together in one scene. We present in this paper a simple yet very effective framework using a deep CNN-based method to estimate and use multiple illuminants for colour constancy. Our approach works well in both the multi and single illuminant cases. The output of the CNN method is a region-wise estimate map of the scene which is smoothed and divided out from the image to perform colour constancy. The method that we propose outperforms other recent and state of the art methods and has promising visual results.

Vehicle re-identification (re-ID) is based on identity matching of vehicles across non-overlapping camera views. Recently, the research on vehicle re-ID attracts increased attention, mainly due to its prominent industrial applications, such as post-crime analysis, traffic flow analysis, and wide-area vehicle tracking. However, despite the increased interest, the problem remains to be challenging. One of the most significant difficulties of vehicle re-ID is the large viewpoint variations due to non-standardized camera placements. In this study, to improve re-ID robustness against viewpoint variations while preserving algorithm efficiency, we exploit the use of vehicle orientation information. First, we analyze and benchmark various deep learning architectures in terms of performance, memory use, and cost on applicability to orientation classification. Secondly, the extracted orientation information is utilized to improve the vehicle re-ID task. For this, we propose a viewpoint-aware multi-branch network that improves the vehicle re-ID performance without increasing the forward inference time. Third, we introduce a viewpoint-aware mini-batching approach which yields improved training and higher re-ID performance. The experiments show an increase of 4.0% mAP and 4.4% rank-1 score on the popular VeRi dataset with the proposed mini-batching strategy, and overall, an increase of 2.2% mAP and 3.8% rank-1 score compared to the ResNet-50 baseline.

In this paper, we propose a new fire monitoring system that automatically detect fire flames in night-time using a CCD camera. The proposed system consists of two cascading steps to reliably detect fire regions. First, ELASTIC-YOLOv3 is proposed to better detect a small fires. The main role of ELASTIC-YOLOv3 is to find fire candidate regions in images as the first step. The candidate fire regions are passed to the second verification step to detect more reliable fire region results. The second step takes into account the dynamic characteristic of the fire. To do this, we construct fire-tubes by connecting the fire candidate regions detected in several frames, and extract the histogram of optical flow (HOF) from the fire-tube. However, because the extracted HOF feature vector has a considerably large size, the feature vector is reduced by applying a predefined bag of feature (BOF) and then applied to the fast random forest classifier to verify the final fire regions instead of heavy recurrent neural network (RNN). The proposed method has been experimentally shown a faster processing time and higher fire detection accuracy with lower missing and false alarm.

Image deconvolution has been an important issue recently. It has two kinds of approaches: non-blind and blind. Non-blind deconvolution is a classic problem of image deblurring, which assumes that the PSF is known and does not change universally in space. Recently, Convolutional Neural Network (CNN) has been used for non-blind deconvolution. Though CNNs can deal with complex changes for unknown images, some CNN-based conventional methods can only handle small PSFs and does not consider the use of large PSFs in the real world. In this paper we propose a non-blind deconvolution framework based on a CNN that can remove large scale ringing in a deblurred image. Our method has three key points. The first is that our network architecture is able to preserve both large and small features in the image. The second is that the training dataset is created to preserve the details. The third is that we extend the images to minimize the effects of large ringing on the image borders. In our experiments, we used three kinds of large PSFs and were able to observe high-precision results from our method both quantitatively and qualitatively.

Over-exposure happens often in daily-life photography due to the range of light far exceeding the capabilities of the limited dynamic range of current imaging sensors. Correcting overexposure aims to recover the fine details from the input. Most of the existing methods are based on manual image pixel manipulation, and therefore are often tedious and time-consuming. In this paper, we present the first convolutional neural network (CNN) capable of inferring the photo-realistic natural image for the single over-exposed photograph. To achieve this, we propose a simple and lightweight Over-Exposure Correction CNN, namely OEC-cnn, and construct a synthesized dataset that covers various scenes and exposure rates to facilitate training. By doing so, we effectively replace the manual fixing operations with an end-toend automatic correction process. Experiments on both synthesized and real-world datasets demonstrate that the proposed approach performs significantly better than existing methods and its simplicity and robustness make it a very useful tool for practical over-exposure correction. Our code and synthesized dataset will be made publicly available.