The future of Extended Reality (XR) technologies is revolutionising our interactions with digital content, transforming how we perceive reality, and enhancing our problem-solving capabilities. However, many XR applications remain technology-driven, often disregarding the broader context of their use and failing to address fundamental human needs. In this paper, we present a teaching-led design project that asks postgraduate design students to explore the future of XR through low-fidelity, screen-free prototypes with a focus on observed human needs derived from six specific locations in central London, UK. By looking at the city and built environment as lenses for exploring everyday scenarios, the project encourages design provocations rooted in real-world challenges. Through this exploration, we aim to inspire new perspectives on the future states of XR, advocating for human-centred, inclusive, and accessible solutions. By bridging the gap between technological innovation and lived experience, this project outlines a pathway toward XR technologies that prioritise societal benefit and address real human needs.

Virtual Reality (VR) technology has experienced remarkable growth, steadily establishing itself within mainstream consumer markets. This rapid expansion presents exciting opportunities for innovation and application across various fields, from entertainment to education and beyond. However, it also underscores a pressing need for more comprehensive research into user interfaces and human-computer interaction within VR environments. Understanding how users engage with VR systems and how their experiences can be optimized is crucial for further advancing the field and unlocking its full potential. This project introduces ScryVR, an innovative infrastructure designed to simplify and accelerate the development, implementation, and management of user studies in VR. By providing researchers with a robust framework for conducting studies, ScryVR aims to reduce the technical barriers often associated with VR research, such as complex data collection, hardware compatibility, and system integration challenges. Its goal is to empower researchers to focus more on study design and analysis, minimizing the time spent troubleshooting technical issues. By addressing these challenges, ScryVR has the potential to become a pivotal tool for advancing VR research methodologies. Its continued refinement will enable researchers to conduct more reliable and scalable studies, leading to deeper insights into user behavior and interaction within virtual environments. This, in turn, will drive the development of more immersive, intuitive, and impactful VR experiences.

Intelligence assistance applications hold enormous potential to extend the range of tasks people can perform, increase the speed and accuracy of task performance and provide high quality documentation for record keeping. However, the computational complexity of modern perception and reasoning techniques based on massive foundation model networks cannot run on devices at the edge. A remote server can be used to offload computation but latency and security concerns often rule this out. Distillation and quantization can compress networks but we still face the challenge of obtaining sufficient training data for all possible task executions. We propose a hybrid ensemble architecture that combines intelligent switching of special purpose networks and a symbolic reasoner to provide assistance on modest hardware while still allowing robust and sophisticated reasoning. The rich reasoner representations can also be to identify mistakes in complex procedures. Since system inferences are still imperfect, users can be confused about what the system expects and get frustrated. An interface which makes the capabilities and limitations of perception and reasoning transparent to users dramatically improves the usability of the system. Importantly, our interface provides feedback without compromising situational awareness through well designed audio cues and compact icon-based feedback.

Virtual and augmented reality technologies have significantly advanced and come down in price during the last few years. These technologies can provide a great tool for highly interactive visualization approaches of a variety of data types. In addition, setting up and managing a virtual and augmented reality laboratory can be quite involved, particularly with large-screen display systems. Thus, this keynote presentation will outline some of the key elements to make this more manageable by discussing the frameworks and components needed to integrate the hardware and software into a more manageable package. Examples for visualizations and their applications using this environment will be discussed from a variety of disciplines to illustrate the versatility of the virtual and augmented reality environment available in the laboratories that are available to faculty and students to perform their research.

Many extended reality systems use controllers, e.g. near-infrared motion trackers or magnetic coil-based hand-tracking devices for users to interact with virtual objects. These interfaces lack tangible sensation, especially during walking, running, crawling, and manipulating an object. Special devices such as the Tesla suit and omnidirectional treadmills can improve tangible interaction. However, they are not flexible for broader applications, builky, and expensive. In this study, we developed a configurable multi-modal sensor fusion interface for extended reality applications. The system includes wearable IMU motion sensors, gait classification, gesture tracking, and data streaming interfaces to AR/VR systems. This system has several advantages: First, it is reconfigurable for multiple dynamic tangible interactions such as walking, running, crawling, and operating with an actual physical object without any controllers. Second, it fuses multi-modal sensor data from the IMU and sensors on the AR/VR headset such as floor detection. And third, it is more affordable than many existing solutions. We have prototyped tangible extended reality in several applications, including medical helicopter preflight walking around checkups, firefighter search and rescue training, and tool tracking for airway intubation training with haptic interaction with a physical mannequin.

We have developed an assistive technology for people with vision disabilities of central field loss (CFL) and low contrast sensitivity (LCS). Our technology includes a pair of holographic AR glasses with enhanced image magnification and contrast, for example, highlighting objects, and detecting signs, and words. In contrast to prevailing AR technologies which project either mixed reality objects or virtual objects to the glasses, Our solution fuses real-time sensory information and enhances images from reality. The AR glasses technology has two advantages: it’s relatively ‘fail-safe.” If the battery dies or the processor crashes, the glasses can still function because it is transparent. The AR glasses can also be transformed into a VR or AR simulator when it overlays virtual objects such as pedestrians or vehicles onto the glasses for simulation. The real-time visual enhancement and alert information are overlaid on the transparent glasses. The visual enhancement modules include zooming, Fourier filters, contrast enhancement, and contour overlay. Our preliminary tests with low-vision patients show that the AR glass indeed improved patients' vision and mobility, for example, from 20/80 to 20/25 or 20/30.

We present a head-mounted holographic display system for thermographic image overlay, biometric sensing, and wireless telemetry. The system is lightweight and reconfigurable for multiple field applications, including object contour detection and enhancement, breathing rate detection, and telemetry over a mobile phone for peer-to-peer communication and incident commanding dashboard. Due to the constraints of the limited computing power of an embedded system, we developed a lightweight image processing algorithm for edge detection and breath rate detection, as well as an image compression codec. The system can be integrated into a helmet or personal protection equipment such as a face shield or goggles. It can be applied to firefighting, medical emergency response, and other first-response operations. Finally, we present a case study of "Cold Trailing" for forest fire prevention in the wild.

Incident Command Dashboard (ICD) plays an essential role in Emergency Support Functions (ESF). They are centralized with a massive amount of live data. In this project, we explore a decentralized mobile incident commanding dashboard (MIC-D) with an improved mobile augmented reality (AR) user interface (UI) that can access and display multimodal live IoT data streams in phones, tablets, and inexpensive HUDs on the first responder’s helmets. The new platform is designed to work in the field and to share live data streams among team members. It also enables users to view the 3D LiDAR scan data on the location, live thermal video data, and vital sign data on the 3D map. We have built a virtual medical helicopter communication center and tested the launchpad on fire and remote fire extinguishing scenarios. We have also tested the wildfire prevention scenario “Cold Trailing” in the outdoor environment.

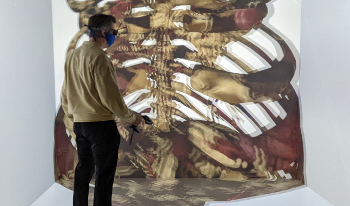

Simulation is a recognized and much-appreciated tool in healthcare and education. Advances in simulation have led to the burgeoning of various technologies. In recent years, one such technological advancement has been Augmented Reality (AR). Augmented Reality simulations have been implemented in healthcare on various fronts with the help of a plethora of devices including cellphones, tablets, and wearable AR headsets. AR headsets offer the most immersive experience of the AR simulation as they are head-mounted and offer a stereoscopic view of the superimposed 3D models through the attached goggles overlaid on real-world surfaces. To this effect, it is important to understand the performance capabilities of the AR headsets based on workload. In this paper, our objective is to compare the performances of two prominent AR headsets of today, the Microsoft Hololens and the Magic Leap One. We use surgical AR software that allows the surgeons to show internal structures, such as the rib cage, to assist in the surgery as a reference application to obtain performance numbers for those AR devices. Based on our research, there are no performance measurements and recommendations available for these types of devices in general yet.

Color appearance of transparent objects is not adequately described by colorimetry or color appearance models. Despite the fact that the retinal projection of a transparent object is a combination of its color and the background, measurements of this physical combination fail to predict the saliency with which we perceive the object's color. When the perceive color forms in the mind, awareness of their physical relationship separates the physical combination into two unique perceptions. This is known as color scissioning. In this paper a psychophysical experiment utilizing a seethrough augmented reality display to compare virtual transparent color samples to real color samples is described and confirms the scissioning effect for lightness and chroma attributes. A previous model of color scissioning for AR viewing conditions is tested against this new data and does not satisfactorily predict the observers' perceptions. However, the model is still found to be a useful tool for analyzing the color scissioning and provides valuable insight on future research directions.