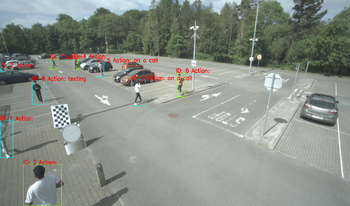

In this paper, we present a database consisting of the annotations of videos showing a number of people performing several actions in a parking lot. The chosen actions represent situations in which the pedestrian could be distracted and not fully aware of her surroundings. Those are “looking behind”, “on a call”, and “texting”, with another one labeled as “no action” when none of the previous actions is performed by the person. In addition to actions, also the speed of the person is labeled. There are three possible values for such speed: “standing”, “walking” and “running”. Bounding boxes of people present in each frame are also provided, along with a unique identifier for each person. The main goal is to provide the research community with examples of actions that can be of interest for surveillance or safe autonomous driving. The addition of the speed of the person when performing the action can also be of interest, as it can be treated as a more dangerous behavior “running” than “waking”, when “on a call” or “looking behind”, for example, providing the researchers with richer information.

Hand hygiene is essential for food safety and food handlers. Maintaining proper hand hygiene can improve food safety and promote public welfare. However, traditional methods of evaluating hygiene during food handling process, such as visual auditing by human experts, can be costly and inefficient compared to a computer vision system. Because of the varying conditions and locations of real-world food processing sites, computer vision systems for recognizing handwashing actions can be susceptible to changes in lighting and environments. Therefore, we design a robust and generalizable video system that is based on ResNet50 that includes a hand extraction method and a 2-stream network for classifying handwashing actions. More specifically, our hand extraction method eliminates the background and helps the classifier focus on hand regions under changing lighting conditions and environments. Our results demonstrate our system with the hand extraction method can improve action recognition accuracy and be more generalizable when evaluated on completely unseen data by achieving over 20% improvement on the overall classification accuracy.

Skeleton based action recognition is playing a critical role in computer vision research, its applications have been widely deployed in many areas. Currently, benefiting from the graph convolutional networks (GCN), the performance of this task is dramatically improved due to the powerful ability of GCN for modeling the Non-Euclidean data. However, most of these works are designed for the clean skeleton data while one unavoidable drawback is such data is usually noisy in reality, since most of such data is obtained using depth camera or even estimated from RGB camera, rather than recorded by the high quality but extremely costly Motion Capture (MoCap) [1] system. Under this circumstance, we propose a novel GCN framework with adversarial training to deal with the noisy skeleton data. With the guiding of the clean data in the semantic level, a reliable graph embedding can be extracted for noisy skeleton data. Besides, a discriminator is introduced such that the feature representation could further improved since it is learned with an adversarial learning fashion. We empirically demonstrate the proposed framework based on two current largest scale skeleton-based action recognition datasets. Comparison results show the superiority of our method when compared to the state-of-the-art methods under the noisy settings.

In cattle farm, it is important to monitor activity of cattle to know their health condition and prevent accidents. Sensors were used by conventional methods to recognize activity of cattle, but attachment of sensors to the animal may cause stress. Camera was used to recognize activity of cattle, but it is difficult to identify cattle because cattle have similar appearance, especially for black or brown cattle. We propose a new method to identify cattle and recognize their activity by surveillance camera. The cattle are recognized at first by CNN deep learning method. Face and body areas of cattle, sitting and standing state are recognized separately at same time. Image samples of day and night were collected for learning model to recognize cattle for 24-hours. Among the recognized cattle, initial ID numbers are set at first frame of the video to identify the animal. Then particle filter object tracking is used to track the cattle. Combing cattle recognition and tracking results, ID numbers of the cattle are kept to the following frames of the video. Cattle activity is recognized by using multi-frame of the video. In areas of face and body of cattle, active or static activities are recognized. Activity times for the areas are outputted as cattle activity recognition results. Cattle identification and activity recognition experiments were made in a cattle farm by wide angle surveillance cameras. Evaluation results demonstrate effectiveness of our proposed method.