Selecting the optimal resolution and post-processing techniques of 3D objects for cultural heritage documentation is one of the most distinguishable challenges within 3D imaging. Many techniques exist to document a tangible object at very high objective accuracy, but there also exist techniques that can visualize a similar perceptual accuracy without documenting the objective values. The application difference between storage of complex geometric data and the visualization of it could be fundamentally different, and if the two methods are not disassociated it could lead to either false or inaccurate digital documentation of a cultural heritage object. In this investigation we compare several different metrics for evaluating the quality of a 3D object, both objectively and perceptually, and look at how the different approaches might report greatly different outputs based on the post-processing of a 3D object. We also provide some insight in how to interpret the output of various metrics, and how to compare them.

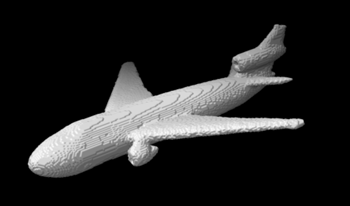

In this paper, we introduce silhouette tomography, a novel formulation of X-ray computed tomography that relies only on the geometry of the imaging system. We formulate silhouette tomography mathematically and provide a simple method for obtaining a particular solution to the problem, assuming that any solution exists. We then propose a supervised reconstruction approach that uses a deep neural network to solve the silhouette tomography problem. We present experimental results on a synthetic dataset that demonstrate the effectiveness of the proposed method.

Scientific and technological advances during the last decade in the fields of image acquisition, data processing, telecommunications, and computer graphics have contributed to the emergence of new multimedia, especially 3D digital data. Modern 3D imaging technologies allow for the acquisition of 3D and 4D (3D video) data at higher speeds, resolutions, and accuracies. With the ability to capture increasingly complex 3D/4D information, advancements have also been made in the areas of 3D data processing (e.g., filtering, reconstruction, compression). As such, 3D/4D technologies are now being used in a large variety of applications, such as medicine, forensic science, cultural heritage, manufacturing, autonomous vehicles, security, and bioinformatics. Further, with mixed reality (AR, VR, XR), 3D/4D technologies may also change the ways we work, play, and communicate with each other every day.

Scientific and technological advances during the last decade in the fields of image acquisition, data processing, telecommunications, and computer graphics have contributed to the emergence of new multimedia, especially 3D digital data. Modern 3D imaging technologies allow for the acquisition of 3D and 4D (3D video) data at higher speeds, resolutions, and accuracies. With the ability to capture increasingly complex 3D/4D information, advancements have also been made in the areas of 3D data processing (e.g., filtering, reconstruction, compression). As such, 3D/4D technologies are now being used in a large variety of applications, such as medicine, forensic science, cultural heritage, manufacturing, autonomous vehicles, security, and bioinformatics. Further, with mixed reality (AR, VR, XR), 3D/4D technologies may also change the ways we work, play, and communicate with each other every day.

In recent years, behavioral biometrics authentication, which uses the habit of behavioral characteristics for personal authentication, has attracted attention as an authentication method with higher security since behavioral biometrics cannot mimic as fingerprint and face authentications. As the behavioral biometrics, many researches were performed on voiceprints. However, there are few authentication technologies that utilize the habits of hand and finger movements during hand gestures. Only either color images or depth images are used for hand gesture authentication in the conventional methods. In the research, therefore, we propose to find individual habits from RGB-D images of finger movements and create a personal authentication system. 3D CNN, which is a deep learning-based network, is used to extract individual habits. An F-measure of 0.97 is achieved when rock-paper-scissors are used as the authentication operation. An F-measure of 0.97 is achieved when the disinfection operation is used. These results show the effectiveness of using RGB-D video for personal authentication.

This paper presents a novel method for accurately encoding 3D range geometry within the color channels of a 2D RGB image that allows the encoding frequency—and therefore the encoding precision—to be uniquely determined for each coordinate. The proposed method can thus be used to balance between encoding precision and file size by encoding geometry along a normal distribution; encoding more precisely where the density of data is high and less precisely where the density is low. Alternative distributions may be followed to produce encodings optimized for specific applications. In general, the nature of the proposed encoding method is such that the precision of each point can be freely controlled or derived from an arbitrary distribution, ideally enabling this method for use within a wide range of applications.

This paper describes the development of a low-cost, lowpower, accurate sensor designed for precise, feedback control of an autonomous vehicle to a hitch. The solution that has been developed uses an active stereo vision system, combining classical stereo vision with a low cost, low power laser speckle projection system, which solves the correspondence problem experienced by classic stereo vision sensors. A third camera is added to the sensor for texture mapping. A model test of the hitching problem was developed using an RC car and a target to represent a hitch. A control system is implemented to precisely control the vehicle to the hitch. The system can successfully control the vehicle from within 35° of perpendicular to the hitch, to a final position with an overall standard deviation of 3.0 m m of lateral error and 1.5° of angular error.

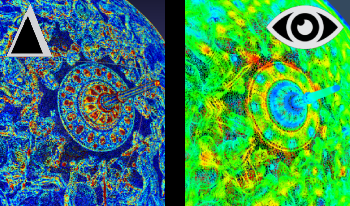

X-ray phase contrast tomography (XPCT) is widely used for 3D imaging of objects with weak contrast in X-ray absorption index but strong contrast in refractive index decrement. To reconstruct an object imaged using XPCT, phase retrieval algorithms are first used to estimate the X-ray phase projections, which is the 2D projection of the refractive index decrement, at each view. Phase retrieval is followed by refractive index decrement reconstruction from the phase projections using an algorithm such as filtered back projection (FBP). In practice, phase retrieval is most commonly solved by approximating it as a linear inverse problem. However, this linear approximation often results in artifacts and blurring when the conditions for the approximation are violated. In this paper, we formulate phase retrieval as a non-linear inverse problem, where we solve for the transmission function, which is the negative exponential of the projections, from XPCT measurements. We use a constraint to enforce proportionality between phase and absorption projections. We do not use constraints such as large Fresnel number, slowly varying phase, or Born/Rytov approximations. Our approach also does not require any regularization parameter tuning since there is no explicit sparsity enforcing regularization function. We validate the performance of our non-linear phase retrieval (NLPR) method using both simulated and real synchrotron datasets. We compare NLPR with a popular linear phase retrieval (LPR) approach and show that NLPR achieves sharper reconstructions with higher quantitative accuracy.

In this paper, we present a novel Lidar imaging system for heads-up display. The imaging system consists of the onedimensional laser distance sensor and IMU sensors, including an accelerometer and gyroscope. By fusing the sensory data when the user moves their head, it creates a three-dimensional point cloud for mapping the space around. Compared to prevailing 2D and 3D Lidar imaging systems, the proposed system has no moving parts; it’s simple, light-weight, and affordable. Our tests show that the horizontal and vertical profile accuracy of the points versus the floor plan is 3 cm on average. For the bump detection the minimal detectable step height is 2.5 cm. The system can be applied to first responses such as firefighting, and to detect bumps on pavement for lowvision pedestrians.