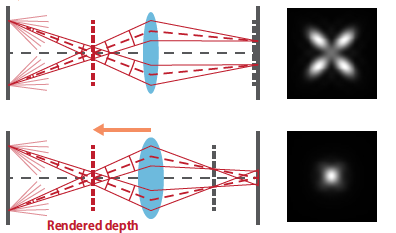

Light field (LF) displays are a promising 3D display technology to mitigate the vergence-accommodation conflict. Recently, we have proposed a simulation framework to model an LF display system. It has predicted that the in-focus optical resolution on the retina would drop as the relative depth of a rendered image to the display-specific optical reference depth grows. In this study, we examine the empirical optical resolution of a near-eye LF display prototype by capturing rendered test images and compare it to simulation results based on the previously developed computational model. We use an LF display prototype that employs a time-multiplexing technique and achieves a high angular resolution of 6-by-6 viewpoints in the eyebox. The test image is rendered at various depths ranging 0–3 diopters, and the optical resolution of the best-focus images is analyzed from images captured by a camera. Additionally, we compare the measurement results to the simulation results, discussing theoretical and practical limitations of LF displays.

A layered light-field display is composed of several liquid crystal layers located in front of a backlight. The light rays emitted from the backlight intersect with different pixels on the layers depending on the outgoing directions. Therefore, this display can show multi-view images (a light field) in accordance with the viewing direction. This type of displays can also be used for head-mounted displays (HMDs) thanks to its dense angular resolution. The angular resolution is an important factor, because sufficiently dense angular resolution can provide accommodation cues, preventing visual discomfort caused by vergence accommodation conflict. To further enhance the angular resolution of a layered display, we propose to replace some of the layers with monochrome layers. While keeping the pixel size unchanged, our method can achieve three times higher resolution than baseline architecture in the horizontal direction. To obtain a set of color and monochrome layer patterns for a target light field, we developed two computation methods based on non-negative tensor factorization and a convolutional neural network, respectively.

We propose 3D display that scans light rays from projector and enables stereoscopic display by arranging a number of long and thin mirror scanners with a gap and spinning each mirror scanner. This proposal aims at large-screen 3D display that allows multiple people to observe simultaneously with the naked eye. In previous study, multi-projection type 3D display was proposed as largescreen 3D display. However, many projectors make installation and adjustment complicated. Therefore, we have proposed 3D display that can display large screen with single projector in the past. However, there is a problem that the screen vibrates due to the screen swing mechanism, the scanning speed cannot be increased, and the displayed image appears to flicker. Our new proposed method can reduce the screen vibration by the spin mechanism, increase the scanning speed, and prevent the displayed image from flickering. Computer simulation was performed to confirm the principle of the proposed method, and it was confirmed that appropriate parallax could be presented. The necessary conditions and problems when manufacturing the actual machine were considered, and the prototype was designed.

We propose a large screen 3D display which enables multiple viewers to see simultaneously without special glasses. In prior researches, methods of using a projector array or a swinging screen were proposed. However, the former has difficulty in installing and adjusting a large number of projectors and the latter cases occurrence of vibration and noise because of the mechanical motion of the screen. Our proposed display consists of a wavelength modulation projector and a spectroscopic screen. The screen shows images of which color depends on viewing points. The projector projects binary images to the screen in time-division according to wavelength of projection light. The wavelength of the light changes at high-speed with time. Therefore, the system can show 3D images to multiple viewers simultaneously by projecting proper images according to each viewing points. The installation of the display is easy and vibration or noise are not occurred because only one projector is used and the screen has no mechanical motion. We conducted simulation and confirmed that the proposed display can show 3D images to multiple viewers simultaneously.

In recent years, light field technology has attracted the interest of academia and industry, thanks to the possibility of rendering 3D scenes in a more realistic and immersive way. In particular, light field displays have been consistently investigated for their ability to offer a glass-free 3D viewing experience. Among others, tensor displays represent a promising way to render light field contents. However, only a few prototypes of such type of displays have been implemented and are available to the scientific community. As a direct consequence, the visual quality of such displays has not been rigorously investigated. In this paper, we propose a new framework to assess the visual quality of light field tensor displays on conventional 2D screens. The multilayer components of the tensor displays are virtually rendered on a typical 2D monitor through the use of a GUI, and different viewing angles can be accessed by simple mouse interactions. Both single and double stimulus methodologies for subjective quality assessment of light field contents are supported in this framework, while the total time of interaction is recorded for every stimulus. Results obtained in two different laboratory settings demonstrate that the framework can be successfully used to perform subjective quality assessment of different compression solutions for light field tensor displays.

We are studying a three-dimensional (3D) TV system based on a spatial imaging method for the development of a new type of broadcasting that delivers a strong sense of presence. This spatial imaging method can reproduce natural glasses-free 3D images in accordance with the viewer’s position by faithfully reproducing light rays from an object. One challenge to overcome is that the 3D TV system based on spatial imaging requires a huge number of pixels to obtain high-quality 3D images. Therefore, we applied ultra-high-definition video technologies to a 3D TV system to improve the image quality. We developed a 3D camera system to capture multi-view images of large moving objects and calculate high-precision light rays for reproducing the 3D images. We also developed a 3D display using multiple high-definition display devices to reproduce light rays of high-resolution 3D images. The results show that our 3D display can display full-parallax 3D images with a resolution of more than 330,000 pixels.

We propose a virtual-image head-up display (HUD) based on the super multiview (SMV) display technology. Implementation-wise, the HUD provides a compact solution, consisting of a thin form-factor SMV display and a combiner placed on the windshield of the vehicle. Since the utilized display is at most few centimeters thick, it does not need extra installation space that is usually required by most of the existing virtual image HUDs. We analyze the capabilities of the proposed system in terms of several HUD related quality factors such as resolution, eyebox width, and target image depth. Subsequently, we verify the analysis results through experiments carried out using our SMVHUD demonstrator. We show that the proposed system is capable of visualizing images at the typical virtual image HUD depths of 2–3m, in a reasonably large eyebox, which is slightly over 30cm in our demonstrator. For an image at the target virtual image depth of 2.5m, the field of view of the developed system is 11°x16° and the spatial resolution is around 240x60 pixels in vertical and horizontal directions, respectively. There is, however, plenty of room for improvement regarding the resolution, as we actually utilize an LCD at moderate resolution (216ppi) and off-the-shelf lenticular sheet in our demonstrator.

We propose shearlet decomposition based light field (LF) reconstruction and filtering techniques for mitigating artifacts in the visualized contents of 3D multiview displays. Using the LF reconstruction capability, we first obtain the densely sampled light field (DSLF) of the scene from a sparse set of view images. We design the filter via tiling the Fourier domain of epipolar image by shearlet atoms that are directionally and spatially localized versions of the desired display passband. In this way, it becomes possible to process the DSLF in a depth-dependent manner. That is, the problematic areas in the 3D scene that are outside of the display depth of field (DoF) can be selectively filtered without sacrificing high details in the areas near the display, i.e. inside the DoF. The proposed approach is tested on a synthetic scene and the improvements achieved by means of the quality of the visualized content are verified, where the visualization process is simulated using a geometrical optics model of the human eye.