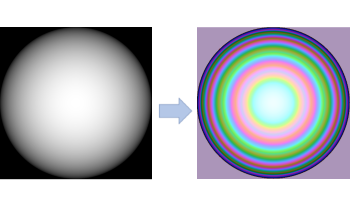

Recent advancements in 3D data capture have enabled the real-time acquisition of high-resolution 3D range data, even in mobile devices. However, this type of high bit-depth data remains difficult to efficiently transmit over a standard broadband connection. The most successful techniques for tackling this data problem thus far have been image-based depth encoding schemes that leverage modern image and video codecs. To our knowledge, no published work has directly optimized the end-to-end losses of a depth encoding scheme passing through a lossy image compression codec. In contrast, our compression-resilient neural depth encoding method leverages deep learning to efficiently encode depth maps into 24-bit RGB representations that minimize end-to-end depth reconstruction errors when compressed with JPEG. Our approach employs a fully differentiable pipeline, including a differentiable approximation of JPEG, allowing it to be trained end-to-end on the FlyingThings3D dataset with randomized JPEG qualities. On a Microsoft Azure Kinect depth recording, the neural depth encoding method was able to significantly outperform an existing state-of-the-art depth encoding method in terms of both root-mean-square error (RMSE) and mean absolute error (MAE) in a wide range of image qualities, all with over 20% lower average file sizes. Our method offers an efficient solution for emerging 3D streaming and 3D telepresence applications, enabling high-quality 3D depth data storage and transmission.

Recently, volumetric video based communications have gained a lot of attention, especially due to the emergence of devices that can capture scenes with 3D spatial information and display mixed reality environments. Nevertheless, capturing the world in 3D is not an easy task, with capture systems being usually composed by arrays of image sensors, which sometimes are paired with depth sensors. Unfortunately, these arrays are not easy to assembly and calibrate by non-specialists, making their use in volumetric video applications a challenge. Additionally, the cost of these systems is still high, which limits their popularity in mainstream communication applications. This work proposes a system that provides a way to reconstruct the head of a human speaker from single view frames captured using a single RGB-D camera (e.g. Microsoft?s Kinect 2 device). The proposed system generates volumetric video frames with a minimum number of occluded and missing areas. To achieve a good quality, the system prioritizes the data corresponding to the participants? face, therefore preserving important information from speakers facial expressions. Our ultimate goal is to design an inexpensive system that can be used in volumetric video telepresence applications and even on volumetric video talk-shows broadcasting applications.