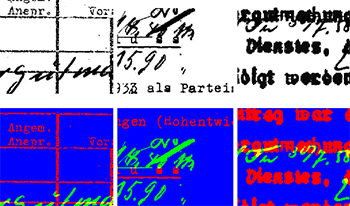

Historical archival records present many challenges for OCR systems to correctly encode their content, due to visual complexity, e.g. mixed printed text and handwritten annotations, paper degradation, and faded ink. This paper addresses the problem of automatic identification and separation of handwritten and printed text in historical archival documents, including the creation of an artificial pixel-level annotated dataset and the presentation of a new FCN-based model trained on historical data. Initial test results indicate 18% IoU performance improvement on recognition of printed pixels and 10% IoU performance improvement on recognition of handwritten pixels in synthesised data when compared to the state-of-the-art trained on modern documents. Furthermore, an extrinsic OCR-based evaluation on the printed layer extracted from real historical documents shows 26% performance increase.

This work discusses document security, use of OCR, and integrity verification related to printed documents. Since the underlying applications are usually documents containing sensitive personal data, a solution that does not require the entire data to be stored in a database is the most compatible. In order to allow verification to be performed by anyone, it is necessary that all the data required for this is contained on the document itself. The approach must be able to cope with different layouts so that the layout does not have to be adapted for each document. In the following, we present a concept and its implementation that allows every smartphone user to verify the authenticity and integrity of a document.