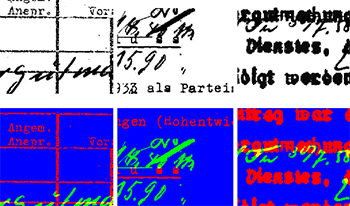

Historical archival records present many challenges for OCR systems to correctly encode their content, due to visual complexity, e.g. mixed printed text and handwritten annotations, paper degradation, and faded ink. This paper addresses the problem of automatic identification and separation of handwritten and printed text in historical archival documents, including the creation of an artificial pixel-level annotated dataset and the presentation of a new FCN-based model trained on historical data. Initial test results indicate 18% IoU performance improvement on recognition of printed pixels and 10% IoU performance improvement on recognition of handwritten pixels in synthesised data when compared to the state-of-the-art trained on modern documents. Furthermore, an extrinsic OCR-based evaluation on the printed layer extracted from real historical documents shows 26% performance increase.

Mahsa Vafaie, Oleksandra Bruns, Nastasja Pilz, Jörg Waitelonis, Harald Sack, "Handwritten and Printed Text Identification in Historical Archival Documents" in Archiving Conference, 2022, pp 15 - 20, https://doi.org/10.2352/issn.2168-3204.2022.19.1.4

Find this author on Google Scholar

Find this author on Google Scholar Find this author on PubMed

Find this author on PubMed