While RGB is the status quo in machine vision, other color spaces offer higher utility in distinct visual tasks. Here, the authors have investigated the impact of color spaces on the encoding capacity of a visual system that is subject to information compression, specifically variational autoencoders (VAEs) with a bottleneck constraint. To this end, they propose a framework—color conversion—that allows a fair comparison of color spaces. They systematically investigated several ColourConvNets, i.e. VAEs with different input–output color spaces, e.g. from RGB to CIE L∗a∗b∗ (in total five color spaces were examined). Their evaluations demonstrate that, in comparison to the baseline network (whose input and output are RGB), ColourConvNets with a color-opponent output space produce higher quality images. This is also evident quantitatively: (i) in pixel-wise low-level metrics such as color difference (ΔE), peak signal-to-noise ratio (PSNR) and structural similarity index measure (SSIM); and (ii) in high-level visual tasks such as image classification (on ImageNet dataset) and scene segmentation (on COCO dataset) where the global content of reconstruction matters. These findings offer a promising line of investigation for other applications of VAEs. Furthermore, they provide empirical evidence on the benefits of color-opponent representation in a complex visual system and why it might have emerged in the human brain.

Recent developments in neural network image processing motivate the question, how these technologies might better serve visual artists. Research goals to date have largely focused on either pastiche interpretations of what is framed as artistic “style” or seek to divulge heretofore unimaginable dimensions of algorithmic “latent space,” but have failed to address the process an artist might actually pursue, when engaged in the reflective act of developing an image from imagination and lived experience. The tools, in other words, are constituted in research demonstrations rather than as tools of creative expression. In this article, the authors explore the phenomenology of the creative environment afforded by artificially intelligent image transformation and generation, drawn from autoethnographic reviews of the authors’ individual approaches to artificial intelligence (AI) art. They offer a post-phenomenology of “neural media” such that visual artists may begin to work with AI technologies in ways that support naturalistic processes of thinking about and interacting with computationally mediated interactive creation.

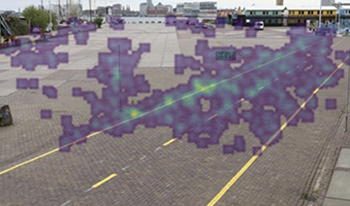

Targets that are well camouflaged under static conditions are often easily detected as soon as they start moving. We investigated and evaluated ways to design camouflage that dynamically adapts to the background and conceals the target while taking the variation in potential viewing directions into account. In a human observer experiment, recorded imagery was used to simulate moving (either walking or running) and static soldiers, equipped with different types of camouflage patterns and viewed from different directions. Participants were instructed to detect the soldier and to make a rapid response as soon as they have identified the soldier. Mean target detection rate was compared between soldiers in standard (Netherlands) Woodland uniform, in static camouflage (adapted to the local background) and in dynamically adapting camouflage. We investigated the effects of background type and variability on detection performance by varying the soldiers’ environment (such as bushland and urban). In general, detection was easier for dynamic soldiers compared to static soldiers, confirming that motion breaks camouflage. Interestingly, we show that motion onset and not motion itself is an important feature for capturing attention. Furthermore, camouflage performance of the static adaptive pattern was generally much better than for the standard Woodland pattern. Also, camouflage performance was found to be dependent on the background and the local structures around the soldier. Interestingly, our dynamic camouflage design outperformed a method which simply displays the ‘exact’ background on the camouflage suit (as if it was transparent), since it is better capable of taking the variability in viewing directions into account. By combining new adaptive camouflage technologies with dynamic adaptive camouflage designs such as the one presented here, it may become feasible to prevent detection of moving targets in the (near) future.