Back to articles

Regular Articles

Volume: 4 | Article ID: jpi0411-edi

From the Editors

DOI : 10.2352/J.Percept.Imaging.2021.4.1.010101 Published Online : January 2021

Journal Title : Journal of Perceptual Imaging

Publisher Name : Society for Imaging Science and Technology

Subject Areas :

Views 72

Downloads 12

Cite this article

Bernice Rogowitz, Thrasos Pappas, "From the Editors" in Journal of Perceptual Imaging, 2021, pp 010101-1 - 010101-1, https://doi.org/10.2352/J.Percept.Imaging.2021.4.1.010101

Copyright statement

Copyright © Society for Imaging Science and Technology 2021

The Journal of Perceptual Imaging launched with the promise that we would publish multidisciplinary papers, exploring the role of human perception and cognition over a large range of imaging and visualization technologies. Looking over our five-issue collection so far, we can say that we have fulfilled this expectation. Our authors have reported research in spatial and temporal perception; color theory, deficiencies, semantics and modeling; luminance contrast and lightness perception; texture and material perception; depth and stereopsis; motion and flicker perception, and aesthetic experience. They have used this lens to further our understanding of image quality, image compression, virtual and augmented reality systems, machine learning, image analysis, and a wide range of real-world applications including automotive and battlefield safety; product design; and planetarium, videoconference, and museum experiences. The papers are diverse in approach, including theoretical, experimental, and computational. Although we are a new journal, our articles are highly cited; we thank our Associate Editors and reviewers for their thoughtful reviews and keen focus on quality. We welcome you to submit your original research at the intersection of human vision/cognition and imaging technologies to the Journal of Perceptual Imaging. To submit your manuscript, go to:

https://jpi.msubmit.net/.

We are pleased to announce this recent issue.

The first article, by Gruening and Barth, introduces a convolutional neural network (CNN) model for image quality assessment. The novelty of their approach lies in the integration higher-level visual mechanisms. Their Feature Product Network multiplies the results of two different filters for each image, emulating physiological “end-stopped” units, and also includes a feature that emulates visual attention.

The next two papers apply principles of visual perception to real-world problems. The Glover, Gupta, Paulter and Bovik paper addresses the relationship between image quality and object identification, for portable X-ray systems used in the field to defuse IED bombs. Like the Gruening, et al paper, they test their metric against human-rated images in a database. For this specialized task, they provide a new database that includes pristine IED and benign objects, with various distortions, labeled by trained technicians. They show that performance in a target (IED) identification task is highly related to image quality.

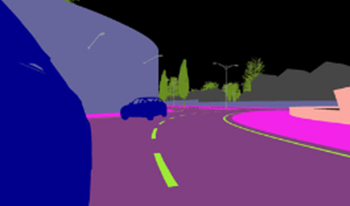

The Behmann, Weddige, and Blume paper examines a problem encountered in modern car design, perceived flicker on digital rear-view mirrors. This effect is caused by aliasing artifacts that arise because of the mismatch between sensor and LED sampling. Their study introduces synthetic flicker artifacts and measures their effect on the quality of the viewing experience. The resulting tuning curves can inform modern car design, and provide guidance for other technologies that use sensor/display systems, such as autonomous and augmented reality driving systems.

The final paper in this issue also addresses issues that arise when using digital displays in real-world settings. Hung, Callahan-Flintoft, Fedele, Fluitt, Waler, Vaughan and Wei ask how high luminance flashes affect the readability of low-contrast letters, simulating extreme luminance differences that can occur when we move our eyes to examine scenes in augmented reality. They find that letter detection is not strongly affected by high luminance flashes, and discuss implications for emerging divisive AR displays (ddAR).

Our Special Issue on Multisensory and Cross-Modality Interactions, edited by Lora Likova (lead), Fang Jiang, Noelle Stiles and Armand Tanguay, will appear this summer. Look forward to exciting papers exploring interactions in vision, audition, taste, touch and smell.

If you have an idea for a special issue, which can be accompanied by a devoted workshop at the IS&T Conference on Human Vision and Electronic Imaging, please contact the editors-in-chief at jpieditors@imaging.org.

Find this author on Google Scholar

Find this author on Google Scholar Find this author on PubMed

Find this author on PubMed