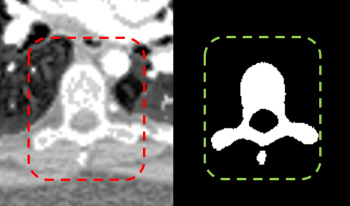

Spinal CT image segmentation is actively researched in the field of medical image processing. However, due to factors such as high variability among spinal CT slices and image artifacts, and so on, automatic and accurate spinal CT segmentation tasks are extremely challenging. To address these issues, we propose a cascaded U-shaped framework that combines multi-scale features and attention mechanisms (MA-WNet) for the automatic segmentation of spinal CT images. Specifically, our framework combines two U-shaped networks to achieve coarse and fine segmentation separately for spinal CT images. Within each U-shaped network, we add multi-scale feature extraction modules during both the encoding and decoding phases to address variations in spine shape across different slices. Additionally, various attention mechanisms are embedded to mitigate the effects of image artifacts and irrelevant information on segmentation outcomes. Experimental results show that our proposed method achieves average segmentation Dice similarity coefficients of 94.53% and 91.38% on the CSI 2014 and VerSe 2020 datasets, respectively, indicating highly accurate segmentation performance, which is valuable for potential clinical applications.

Bo Wang, Lu Lu, Renyuan Gao, Zongren Chen, Xuebin Huang, "Multi-scale and Attention-driven Network for Accurate Segmentation of Spinal CT Images" in Journal of Imaging Science and Technology, 2025, pp 1 - 10, https://doi.org/10.2352/J.ImagingSci.Technol.2025.69.6.060508

Find this author on Google Scholar

Find this author on Google Scholar Find this author on PubMed

Find this author on PubMed