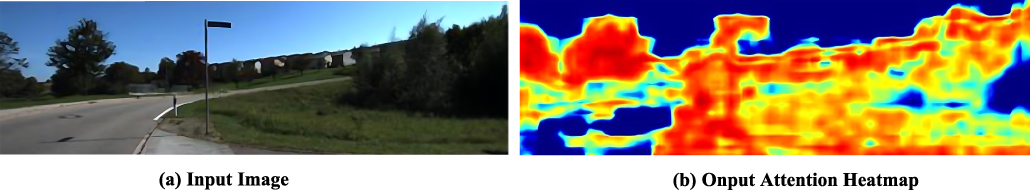

This paper presents a self-supervised monocular visual odometry (VO) method guided by attention feature maps, aimed at effectively mitigating the impact of redundant pixels during network training. Existing self-supervised VO methods typically treat all pixels equally when computing photometric error, which can lead to increased sensitivity to noise from irrelevant pixels and, consequently, training errors. To address this issue, the authors adopt a soft-attention mechanism to generate attention feature maps that allow the model to focus on more relevant pixels while downweighting the influence of disruptive ones. This approach enhances the robustness and accuracy of depth estimation and pose tracking. The proposed method achieves competitive results on the KITTI dataset, with Sequences 09 and 10 demonstrating relative rotation errors of 0.022 and 0.032 and relative translation errors of 5.56 and 7.29, respectively.

Bingfei Nan, "Attention Feature Map Guided Self-Supervised Monocular Visual Odometry" in Journal of Imaging Science and Technology, 2025, pp 1 - 8, https://doi.org/10.2352/J.ImagingSci.Technol.2025.69.6.060403

Find this author on Google Scholar

Find this author on Google Scholar Find this author on PubMed

Find this author on PubMed