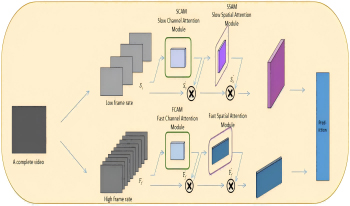

In modern life, with the explosive growth of video, images, and other data, the use of computers to automatically and efficiently classify and analyze human actions has become increasingly important. Action recognition, a problem of perceiving and understanding the behavioral state of objects in a dynamic scene, is a fundamental yet key task in the computer field. However, analyzing a video with multiple objects or a video with irregular shooting angles poses a significant challenge for existing action recognition algorithms. To address these problems, the authors propose a novel deep-learning-based method called SlowFast-Convolutional Block Attention Module (SlowFast-CBAM). Specifically, the training dataset is preprocessed using the YOLOX network, where individual frames of action videos are separately placed in slow and fast pathways. Then, CBAM is incorporated into both the slow and fast pathways to highlight features and dynamics in the surrounding environment. Subsequently, the authors establish a relationship between the convolutional attention mechanism and the SlowFast network, allowing them to focus on distinguishing features of objects and behaviors appearing before and after different actions, thereby enabling action detection and performer recognition. Experimental results demonstrate that this approach better emphasizes the features of action performers, leading to more accurate action labeling and improved action recognition accuracy.

Yuzhu Lin, Xutao Sun, Yonggong Ren, "Slow–Fast Convolutional Attention Network for Human Action Recognition" in Journal of Imaging Science and Technology, 2025, pp 1 - 10, https://doi.org/10.2352/J.ImagingSci.Technol.2025.69.5.050505

Find this author on Google Scholar

Find this author on Google Scholar Find this author on PubMed

Find this author on PubMed