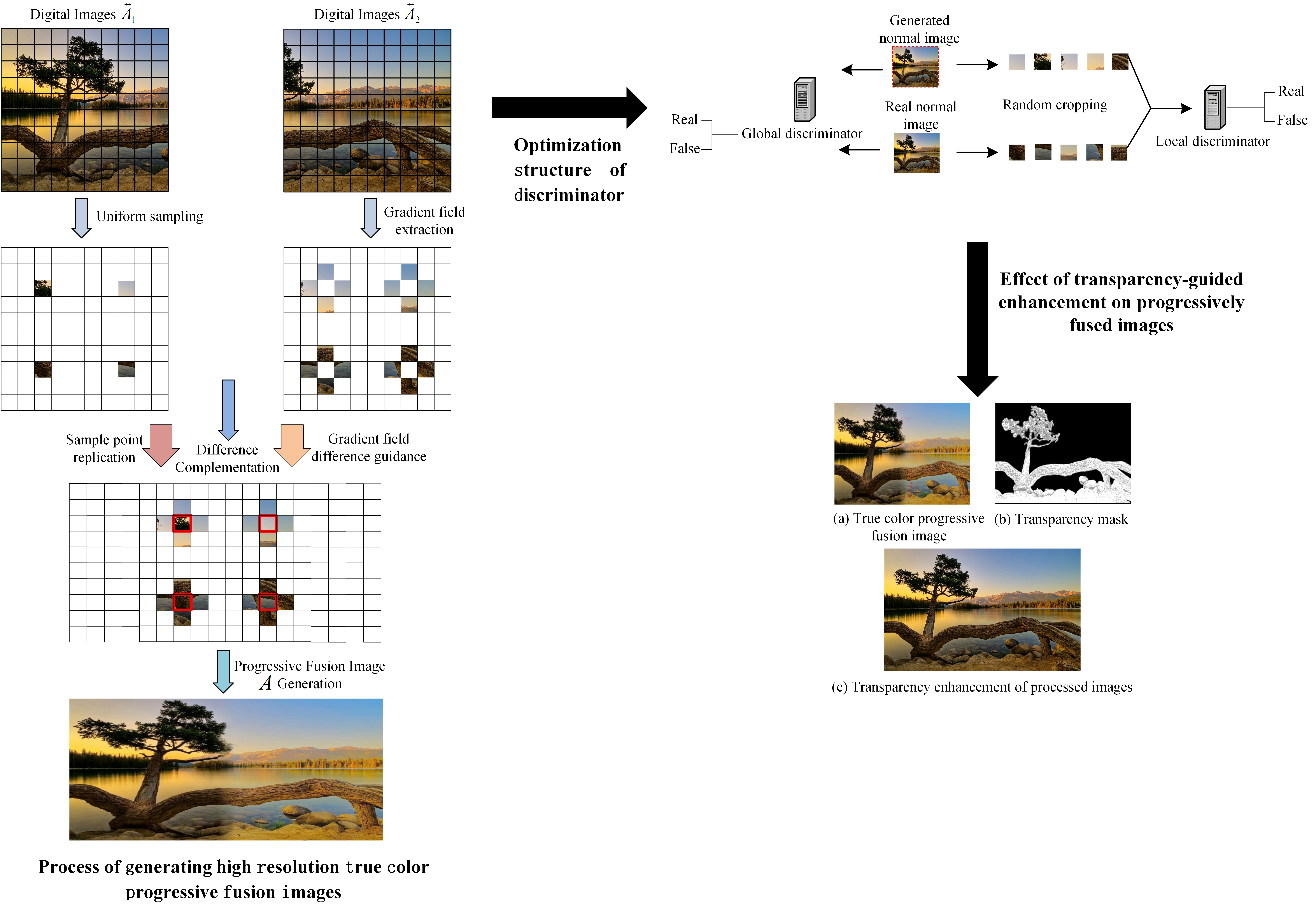

The progressive fusion algorithm enhances image boundary smoothness, preserves details, and improves visual harmony. However, issues with multi-scale fusion and improper color space conversion can lead to blurred details and color distortion, which do not meet modern image processing standards for high-quality output. Therefore, a progressive fusion image transparency-guided enhancement algorithm based on generative adversarial learning is proposed. The method combines wavelet transform with gradient field fusion to enhance image details, preserve spectral features, and generate high-resolution true-color fused images. It extracts the image mean, standard deviation, and smoothness features, and uses these along with the original image input to generate an adversarial network. The optimization design introduces global context, transparency mask prediction, and a dual-discriminator structure to enhance the transparency of progressively fused images. The experimental results showed that using the designed method, the information entropy was 7.638, the blind image quality index was 24.331, the natural image quality evaluator value was 3.611, and the processing time was 0.036 s. The overall evaluation indices were excellent, effectively restoring image detail information and spatial color while avoiding artifacts. The processed images exhibited high quality with complete detail preservation.

Le Wang, Xiaona Ding, Yinglin Zhu, "Progressive Fusion Image Transparency-Guided Enhancement Algorithm based on Generative Adversarial Learning" in Journal of Imaging Science and Technology, 2025, pp 1 - 10, https://doi.org/10.2352/J.ImagingSci.Technol.2025.69.5.050501

Find this author on Google Scholar

Find this author on Google Scholar Find this author on PubMed

Find this author on PubMed