References

1GigilashviliD.ThomasJ.-B.HardebergJ. Y.PedersenM.2021Translucency perception: a reviewJ. Vis.211411–4110.1167/jov.21.8.4

2LiaoC.SawayamaM.XiaoB.2022Crystal or jelly? effect of color on the perception of translucent materials with photographs of real-world objectsJ. Vis.221231–23

3TanakaM.TakanashiT.HoriuchiT.2020Glossiness-aware image coding in JPEG frameworkProc. IS&T CIC28: Twenty-Eighth Color and Imaging Conf.28708470–84IS&TSpringfield, VA10.2352/J.ImagingSci.Technol.2020.64.5.050409

4AketagawaK.TanakaM.HoriuchiT.2024Influence of display sub-pixel arrays on roughness appearanceJ. Imaging Sci. Technol.6810.2352/J.ImagingSci.Technol.2024.68.6.060404

5TanakaM.AketagawaK.HoriuchiT.2024Impact of display sub-pixel arrays on perceived gloss and transparencyJ. Imaging1022110.3390/jimaging10090221

6AketagawaK.TanakaM.HoriuchiT.2025Impact of display pixel–aperture ratio on perceived roughness, glossiness, and transparencyJ. Imaging1111810.3390/jimaging11040118

7Jimenez-NavarroS.Guerrero-ViuJ.Masiaand B.

8TanakaM.AraiR.HoriuchiT.2017PuRet: Material appearance enhancement considering pupil and retina behaviorsProc. IS&T CIC25: Twenty-Fifth Color and Imaging Conf.25404740–7IS&TSpringfield, VA

9ManabeY.TanakaM.HoriuchiT.2022Bumpy appearance editing of object surfaces in digital imagesJ. Imaging Sci. Technol.6605040310.2352/J.ImagingSci.Technol.2022.66.5.050403

10GigilashviliD.TrumpyG.2022Appearance manipulation in spatial augmented reality using image differencesProc. 11th Colour & Visual Computing Symposium (CVCS2022), CEUR Workshop Proceedings32711151–15CEUR-WS TeamAachen, Germany

11TrumpyG.GigilashviliD.2023Using spatial augmented reality to increase perceived translucency of real 3D objectsProc. EUROGRAPHICS Workshop on Graphics and Cultural Heritage858885–8The Eurographics AssociationEindhoven, The Netherlands

12NagaiT.KiyokawaH.KimJ.2025Top-down effects on translucency perception in relation to shape cuesPloS One201201–2010.1371/journal.pone.0314439

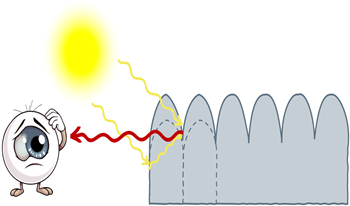

13HoY.-X.LandyM. S.MaloneyL. T.2006How direction of illumination affects visually perceived surface roughnessJ. Vis.6634648634–4810.1167/6.5.8

14PentlandA.1988Shape information from shading: a theory about human perception[1988 Proc.] Second Int’l. Conf. on Computer Vision404413404–13IEEEPiscataway, NJ

15NormanJ. F.ToddJ. T.OrbanG. A.2004Perception of three-dimensional shape from specular highlights, deformations of shading, and other types of visual informationPsychol. Sci.15565570565–7010.1111/j.0956-7976.2004.00720.x

16MarlowP. J.KimJ.AndersonB. L.2012The perception and misperception of specular surface reflectanceCurr. Biol.22190919131909–1310.1016/j.cub.2012.08.009

17HoY.-X.LandyM. S.MaloneyL. T.2008Conjoint measurement of gloss and surface texturePsychol. Sci.19196204196–20410.1111/j.1467-9280.2008.02067.x

18FlemingR. W.2014Visual perception of materials and their propertiesVis. Res.94627562–7510.1016/j.visres.2013.11.004

19PadillaS.DrbohlavO.GreenP. R.SpenceA.ChantlerM. J.2008Perceived roughness of 1/fβ noise surfacesVis. Res.48179117971791–710.1016/j.visres.2008.05.015

20ManabeY.TanakaM.HoriuchiT.2021Glossy appearance editing for heterogeneous material objects (jist-first)Electron. Imaging34

21BoyadzhievI.BalaK.ParisS.AdelsonE.2015Band-sifting decomposition for image-based material editingACM Trans. Graph. (TOG)341161–1610.1145/2809796

22ChowdhuryN. S.MarlowP. J.KimJ.2017Translucency and the perception of shapeJ. Vis.171141–1410.1167/17.3.17

23XiaoB.ZhaoS.GkioulekasI.BiW.BalaK.2020Effect of geometric sharpness on translucent material perceptionJ. Vis.201171–1710.1167/jov.20.6.1

24MarlowP. J.KimJ.AndersonB. L.2017Perception and misperception of surface opacityProc. Natl. Acad. Sci.114138401384513840–510.1073/pnas.1711416115

25MarlowP. J.de HeerB. P.AndersonB. L.2023The role of self-occluding contours in material perceptionCurr. Biol.33252825342528–3410.1016/j.cub.2023.04.056

26MotoyoshiI.2010Highlight–shading relationship as a cue for the perception of translucent and transparent materialsJ. Vis.101111–1110.1167/10.2.16

27KiyokawaH.NagaiT.YamauchiY.KimJ.2023The perception of translucency from surface glossVis. Res.20510814010.1016/j.visres.2022.108140

28HayashiH.UraM.MiyataK.2016The influence of human texture recognition and the spatial frequency of texturesITE Tech. Rep.40131613–6

29SawayamaM.DobashiY.OkabeM.HosokawaK.KoumuraT.SaarelaT. P.OlkkonenM.NishidaS.2022Visual discrimination of optical material properties: A large-scale studyJ. Vis.221241–24

30FuG.ZhangQ.LinQ.ZhuL.XiaoC.2020Learning to detect specular highlights from real-world imagesProc. 28th ACM Int’l. Conf. on Multimedia, MM ’20187318811873–81Association for Computing MachineryNew York, NY, USA

31

32BorbaJ.

33StobbeC. P.

34DragoF.MartensW.MyszkowskiK.SeidelH.-P.

35KadyrovaA.PedersenM.WestlandS.2022Effect of elevation and surface roughness on naturalness perception of 2.5D decor printsMaterials (Basel)15337210.3390/ma15093372

36Van NgoK.StorvikJ. Jr.DokkebergC. A.FarupI.PedersenM.2015QuickEval: a web application for psychometric scaling experimentsProc. SPIE939693960O

37WangZ.BovikA. C.2009Mean squared error: Love it or leave it? A new look at signal fidelity measuresIEEE Signal Process. Mag.269811798–11710.1109/MSP.2008.930649

38WangZ.BovikA. C.SheikhH. R.SimoncelliE. P.2004Image quality assessment: from error visibility to structural similarityIEEE Trans. Image Process.13600612600–1210.1109/TIP.2003.819861

39WangZ.SimoncelliE. P.BovikA. C.2003Multiscale structural similarity for image quality assessmentThe Thirty-Seventh Asilomar Conf. on Signals, Systems & Computers, 20032139814021398–402IEEEPiscataway, NJ

40ZhangL.ZhangL.MouX.ZhangD.2011FSIM: a feature similarity index for image quality assessmentIEEE Trans. Image Process.20237823862378–8610.1109/TIP.2011.2109730

41VaiopoulosA. D.2011Developing Matlab scripts for image analysis and quality assessmentProc. SPIE8181607160–71

42ReisenhoferR.BosseS.KutyniokG.WiegandT.2018A Haar wavelet-based perceptual similarity index for image quality assessmentSignal Process.: Image Commun.61334333–4310.1016/j.image.2017.11.001

43MobiniM.FarajiM. R.2024Multi-scale gradient wavelet-based image quality assessmentVis. Comput.40871387288713–2810.1007/s00371-024-03267-9

44CreteF.DolmiereT.LadretP.NicolasM.2007The blur effect: perception and estimation with a new no-reference perceptual blur metricProc. SPIE6492196206196–206

45NarvekarN. D.KaramL. J.2011A no-reference image blur metric based on the cumulative probability of blur detection (CPBD)IEEE Trans. Image Process.20267826832678–8310.1109/TIP.2011.2131660

46MittalA.MoorthyA. K.BovikA. C.2012No-reference image quality assessment in the spatial domainIEEE Trans. Image Process.21469547084695–70810.1109/TIP.2012.2214050

47MittalA.SoundararajanR.BovikA. C.2012Making a ‘completely blind’ image quality analyzerIEEE Signal Process. Lett.20209212209–1210.1109/LSP.2012.2227726

48VenkatanathN.PraneethD.ChannappayyaM. C. Bh. S. S.MedasaniS. S.2015Blind image quality evaluation using perception based features2015 21st National Conf. on Communications (NCC)161–6IEEEPiscataway, NJ10.1109/NCC.2015.7084843

49CardilloG.

50MahmoudiA.AbbasiM.YuanJ.LiL.2022Large-scale group decision-making (LSGDM) for performance measurement of healthcare construction projects: Ordinal priority approachAppl. Intell.52137811380213781–80210.1007/s10489-022-04094-y

Find this author on Google Scholar

Find this author on Google Scholar Find this author on PubMed

Find this author on PubMed

Open access

Open access