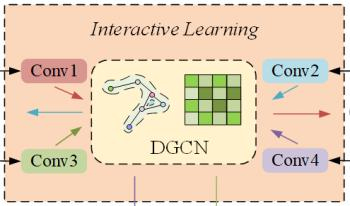

Accurate traffic flow forecasting plays a crucial role in alleviating road congestion and optimizing traffic management. Although numerous effective models have been proposed in existing research to predict future traffic flow, most models exhibit certain limitations in modeling spatiotemporal dependencies, especially in capturing multiscale spatiotemporal relationships. To address this, we propose a novel model called Spatiotemporal Augmented Interactive Learning and Temporal Attention (STAIL-TA) for traffic flow prediction, which is designed for dynamic and interactive adaptive modeling of spatiotemporal features in traffic flow data. Specifically, we first design a feature augmentation layer that enhances the interaction of time-based features. Next, we introduce an interactive dynamic graph convolutional network, which uses an interactive learning strategy to simultaneously capture spatiotemporal characteristics of traffic data. Additionally, a new dynamic graph generation method is employed to design a dynamic graph convolutional block, which is capable of capturing the spatial correlations that change dynamically within the traffic network. Finally, we construct a novel temporal attention mechanism that effectively leverages local contextual information and is specifically designed for transforming numerical sequence representations. This enables the prediction model to capture the dynamic temporal dependencies of traffic flow better, thus facilitating long-term forecasting. The experimental results show that the STAIL-TA model improves the mean absolute error and root mean squared error on the PEMS-BAY dataset by 7.75%, 3.68% and 5.59%, 2.72% in the 15-minute and 30-minute predictions, respectively, when compared to the existing optimal baseline method, MRA-BGCN.

Linlong Chen, Hongyan Wang, Linbiao Chen, Jian Zhao, "Spatiotemporal Augmented Interactive Learning and Temporal Attention for Traffic Flow Prediction" in Journal of Imaging Science and Technology, 2026, pp 1 - 13, https://doi.org/10.2352/J.ImagingSci.Technol.2026.70.2.020503

Find this author on Google Scholar

Find this author on Google Scholar Find this author on PubMed

Find this author on PubMed