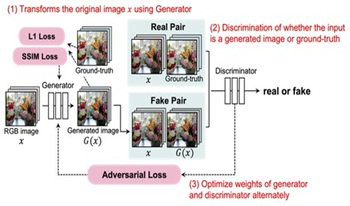

For solving the low completeness of scene reconstruction by existing methods in challenging areas such as weak texture, no texture, and non-diffuse reflection, this paper proposes a multiview stereo high-completeness network, which combined the light multiscale feature adaptive aggregation module (LightMFA2), SoftPool, and a sensitive global depth consistency checking method. In the proposed work, LightMFA2 is designed to adaptively learn critical information from the generated multiscale feature map, which can solve the troublesome problems of feature extraction in challenging areas. Furthermore, SoftPool is added to the regularization process to complete the downsampling of the 2D cost matching map, which reduces information redundancy, prevents the loss of useful information, and accelerates network computing. The purpose of the sensitive global depth consistency checking method is to filter the depth outliers. This method discards pixels with confidence less than 0.35 and uses the reprojection error calculation method to calculate the pixel reprojection error and depth reprojection error. The experimental results on the Technical University of Denmark dataset show that the proposed multiview stereo high-completeness 3D reconstruction network has significantly improved in terms of completeness and overall quality, with a completeness error of 0.2836 mm and an overall error of 0.3665 mm.

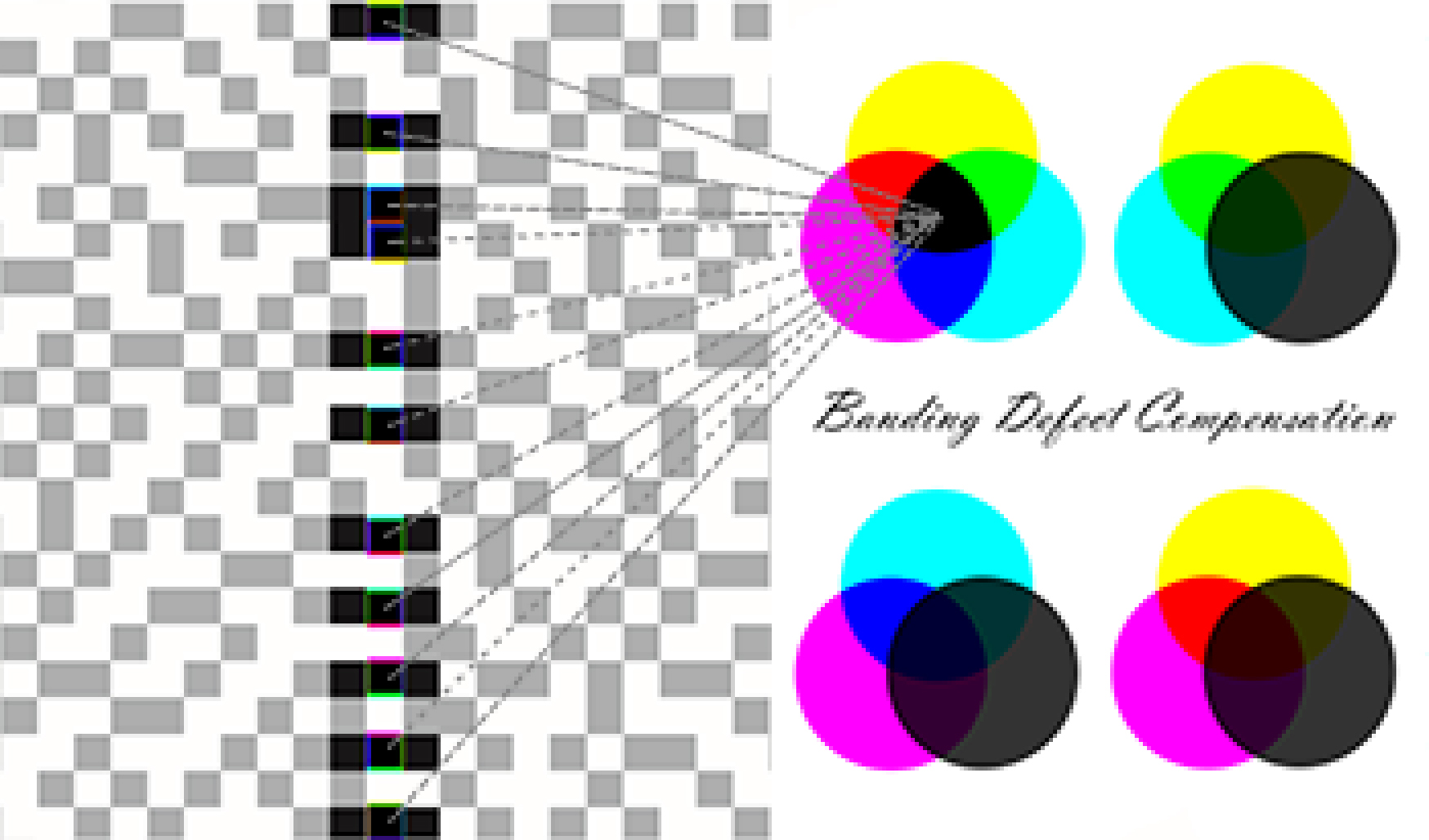

This study presents an innovative compensation technique for addressing banding defects in piezoelectric single-pass inkjet printing, a common issue stemming from nozzle malfunctions such as clogging, misfiring, and failure. The technique leverages visual perception models to dynamically optimize dot density and ink droplet size adjacent to the defective nozzle within the halftone image. It incorporates a color difference compensation algorithm based on the CIE2000 standard, ensuring uniform ink coloration across all channels and reducing density and color disparities. By meticulously adjusting the dot matrix for each printing channel at the malfunctioning nozzle, this method effectively minimizes color and density differences, making the banding defects nearly imperceptible. Experimental validation has shown a significant enhancement in the visual quality of printed outputs, applicable to both monochromatic and complex color images. Importantly, this compensation mechanism is hardware-independent, allowing for easy integration into existing digital printing workflows. This approach has the potential for broader applications beyond printing, wherever precise color and density control are critical.

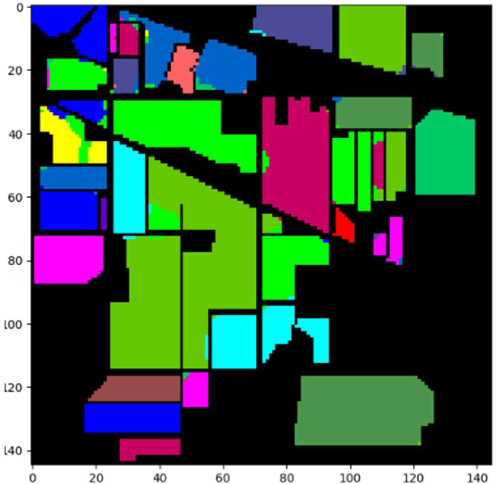

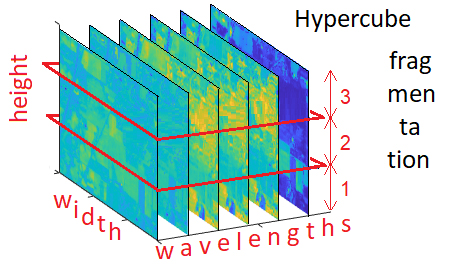

Hyperspectral imagery holds a significant level of importance as it provides detailed information about various objects owing to the acquisition of narrow-band information. A hyperspectral image encompasses multiple spectral bands and involves intricate processes for the identification and classification of objects manually. The existing hyperspectral image classification methods experience limited spatial resolution and reduced accuracy in the classification process. To overcome this issue, this research article presents a hybrid deep convolutional neural network (HDCNN) for the automatic processing and analysis of hyperspectral images. The HDCNN consists of fuzzy-based convolutional neural networks (FBCNNs) and variational autoencoders (VAEs). Furthermore, non-negative matrix factorization is utilized for the extraction of features and the reduction of dimensionality. In this approach, the FBCNN is employed for the automatic classification of hyperspectral images, taking into account the uncertainty and vagueness present in the data. The VAE is utilized for the detection of anomalies and the generation of new data with meaningful characteristics. Based on the experimental findings, it has been observed that the FBCNN yields enhanced accuracy in classification and exhibits superior performance in terms of accuracy, precision, sensitivity, and recall. The proposed FBCNN exhibits 97.1% of accuracy, 91.47% of sensitivity, 90.86% of precision, and 88.5% of recall.

The exponential growth of Earth observation (EO) data offers unprecedented support for spatial analysis and decision-making. However, it also presents significant challenges in data organization, management, and utilization. Organizing heterogeneous EO image data into analysis-ready tiles can significantly enhance data usage efficiency. However, existing methods lack customized approaches tailored to specific themes as well as effective update mechanisms. To address these challenges, this study proposes a thematic tile data cube model that is grounded in themes and utilizes Global Subdivision Grids within an adaptive data cube framework. The model employs a finite state machine approach for updating the tiles, allowing for the organization and updating of these tiles to precisely meet specific thematic needs, aiding in creating analysis-ready datasets. Using the prediction of cold-water coral distribution in the North Atlantic as a case study, this research demonstrates the standardization and tiling of environmental variable data as well as the simulation of batch data updates. Predictive analysis using the random forest model on two thematic tile data cubes shows a significant improvement in prediction accuracy following updates. The results validate the effectiveness of the thematic tile data cube model in data structuring and updating. This study presents a novel approach for the efficient organization and updating of image tile data, providing users with customized analysis-ready tile datasets and continuous updates.

As an unsupervised over-segmentation technique, superpixel decomposition is a popular preprocessing step that partitions a visual image into nonoverlapping regions, thereby improving the overall quality and clarity of the image. Despite various attempts being made to boost superpixel quality, most approaches still suffer from the limitation of conventional grid-sampling based seed initialization, which limits regional representation in several typical applications. In this work, the authors present a new sequential seeding strategy (SSS) to further optimize the segmentation performance of simple noniterative clustering (SNIC) superpixels. First, they employ conventional grid-level sampling (GLS) to establish an MEM by sowing half of the expected seeds. To capture more image details, the authors then sequentially sample new seeds from the midpoint of a pair of seeds that show the strongest correlation, which is quantified by a novel linear-path-based measurement. In this process, they describe the spatial relationship of all seeds via a dynamic region adjacency graph. Compared with the conventional GLS initialization, the SSS takes both the regularity of global distribution and the complexity of local context into consideration. Therefore, it is more accordant with the varying content of an image and suitable for many seed-demand superpixel generation frameworks. Extensive experiments verify that the SSS offers SNIC superpixels considerable improvement on regional description in terms of several quantitative metrics and number controllability. Furthermore, this integrated framework enables multiscale adaptation in generating superpixels as evidenced by its application in airport scene decomposition.

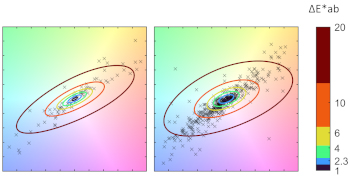

The Monk Skin Tone (MST) scale, comprising ten digitally defined colors, represents a diverse range of skin tones and is utilised by Google to promote social equity. The MST scale also has the potential to address health disparities in the medical field. However, these colors must be printed as physical charts for health practitioners and researchers to conduct visual comparisons. This study examines the colorimetric characteristics of the MST scale and establishes two per-patch acceptance criteria to determine the acceptability of a printed MST chart using a four-tier grading method. A software tool was developed to assist end users in printing accurate MST charts. The tool was tested with four printer/paper combinations, including inkjet, dye sublimation, and laser printers. Results show that a wide color gamut covering the brightest and darkest MST levels is crucial for producing an accurate MST chart, which can be achieved with a consumer-grade inkjet printer and glossy paper.