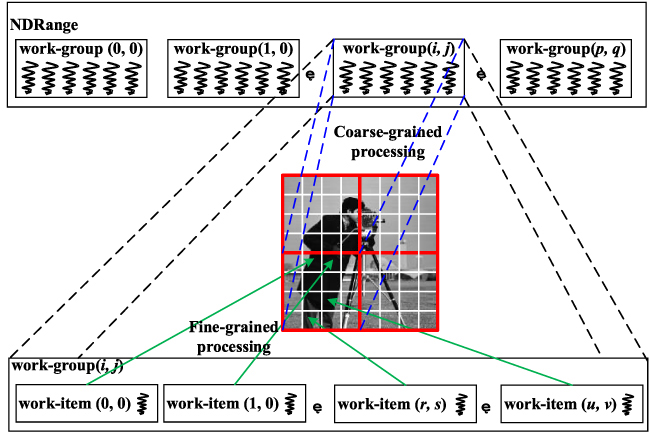

Edge detection algorithms are widely used in image segmentation, image fusion, computer vision, and other fields. The traditional LoG edge extraction algorithm has limitations, such as slow computing speed, occupying host resources, and so on. In order to overcome these limitations, a parallel algorithm of LoG edge extraction based on OpenCL is designed and implemented. First, the Gaussian filtering and Laplacian differential operation in the LoG algorithm are parallelized, and the parallelism of the LoG algorithm is improved from the algorithm structure. At the same time, the GPU’s many-core architecture is used to process each sub-image block in parallel, and each work-item is responsible for one pixel so that the processing objects can be processed in parallel. In addition, strategies such as memory access vectorization, local memory optimization, and resource optimization are adopted to further improve the performance of the kernel. The experimental results show that the parallel optimized LoG algorithm has achieved 21.9 times, 4 times, and 1.09 times speedup compared with the CPU serial algorithm, OpenMP parallel algorithm, and CUDA parallel algorithm, respectively. The feasibility, effectiveness, and portability of the algorithm are verified, and it has good prospects in engineering applications.

Huikang Tang, Han Xiao, Qinglei Zhou, Cailin Li, "Research on GPU Implementation and Optimization Technology of LoG Edge Extraction Algorithm" in Journal of Imaging Science and Technology, 2024, pp 1 - 16, https://doi.org/10.2352/J.ImagingSci.Technol.2024.68.1.010507

Find this author on Google Scholar

Find this author on Google Scholar Find this author on PubMed

Find this author on PubMed