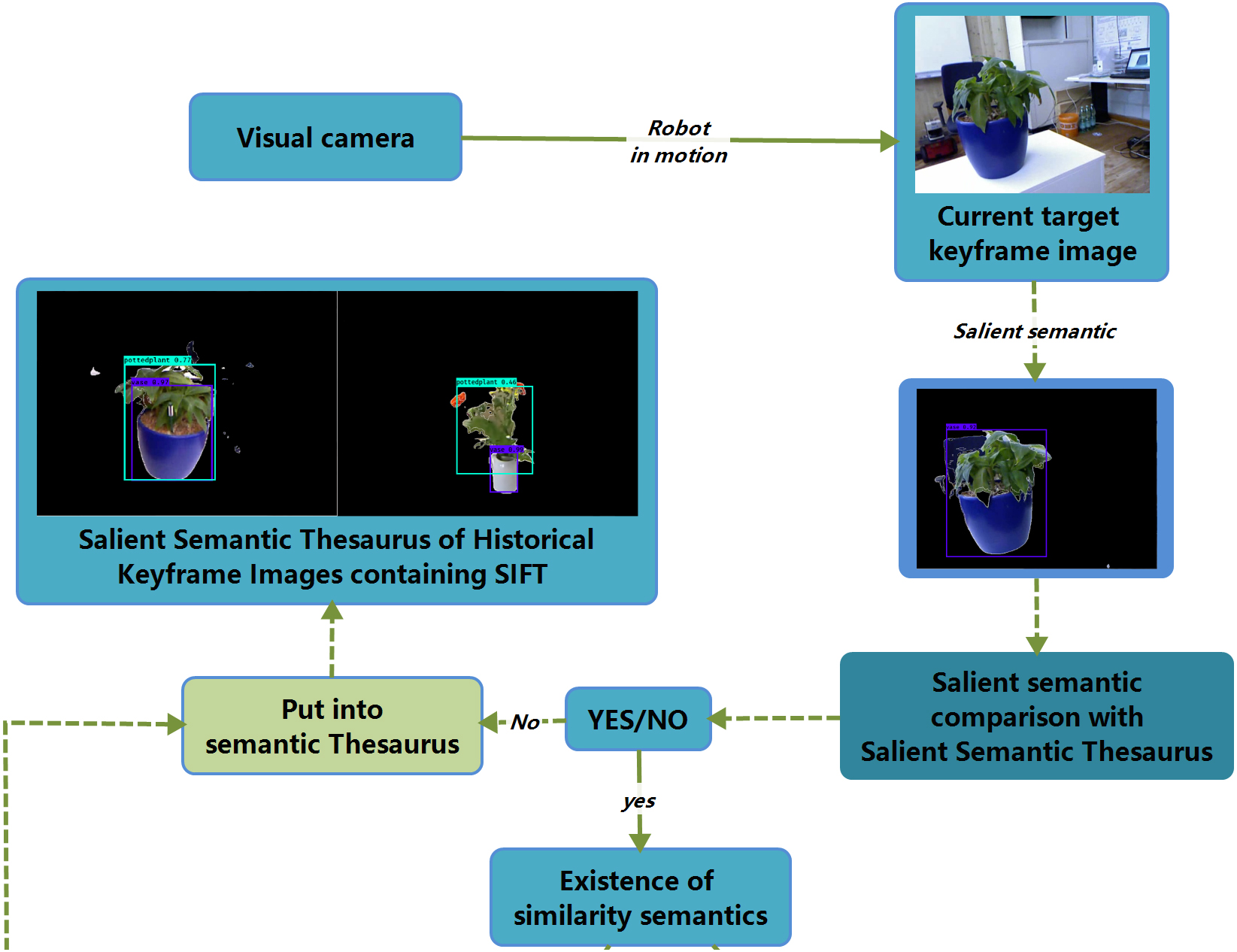

Vision-based closed-loop detection can effectively eliminate the cumulative error of the robot’s visual odometer. The main closed-loop detection BOVW and Semantic research Route faces some bottlenecks. We propose a salient Semantic-SIFT research idea for improving this task, including (1) the salient detection neural network filters excessive scene background information. (2) the neural network labels the semantics of salient objects. (3) comparing the tested salient semantics with the thesaurus to get the potential closed-loop scene. (4) the similarity is calculated based on SIFT features for similarity verification. Our algorithm is based on semantic and SIFT features for complementary advantages. Compared with the panoramic semantic and BOVW way, our algorithm saves computation effort by comparing only the scene’s salient semantics, simplifying semantic word bag construction and map description, as well as simplifying the SIFT similarity comparison of every pair of images. Experiments show that the proposed algorithm performs well in evaluation indicators and excellently in real time for closed-loop detection compared with some recent widely concerned works. The proposed semantic plus SIFT feature fusion from coarse to fine is a new research way for closed-loop detection.

Lihe Hu, Yi Zhang, Yang Wang, "Salient Semantic-SIFT for Robot Visual SLAM Closed-loop Detection" in Journal of Imaging Science and Technology, 2024, pp 1 - 16, https://doi.org/10.2352/J.ImagingSci.Technol.2024.68.1.010502

Find this author on Google Scholar

Find this author on Google Scholar Find this author on PubMed

Find this author on PubMed