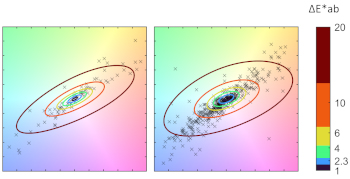

Algorithms for computational color constancy are usually compared in terms of the angular error between ground truth and estimated illuminant. Despite its wide adoption, there exists no well-defined consensus on acceptability and/or noticeability thresholds in angular errors. One of the main reasons for this lack of consensus is that angular error weighs all hues equally by performing the comparison in a non-perceptual color space, whereas the sensitivity of the human visual system is known to vary depending on the chromaticity. We therefore propose a visualization strategy that presents simultaneously the angular error (preserved due to its wide adoption in the field), and a perceptual error (to convey information about its actual perceived impact). This is achieved by exploiting the angle-retaining chromaticity diagram, which shows errors in chromaticities while encoding RGB angular distances as 2D Euclidean distances, and by embedding contour lines of perceptual color differences at standard predefined thresholds. Example applications are shown for different color constancy methods on two imaging devices.

Marco Buzzelli, "Visualizing Perceptual Differences in White Color Constancy" in Journal of Imaging Science and Technology, 2023, pp 1 - 6, https://doi.org/10.2352/J.ImagingSci.Technol.2023.67.5.050404

- received May 2023

- accepted August 2023

- PublishedSeptember 2023

| Camera | Method | Rec. Err. () | Rep. Err () | ΔE ∗ ab | Pearson corr. | ||||

|---|---|---|---|---|---|---|---|---|---|

| Mean | 99th p. | Mean | 99th p. | Mean | 99th p. | Rec./ΔE | Rep./ΔE | ||

| Canon EOS-1Ds | GE2 [19] | 3.754 | 14.668 | 4.464 | 16.729 | 11.280 | 40.103 | 0.9743 | 0.9533 |

| QU [20] | 3.644 | 13.517 | 4.496 | 18.078 | 10.439 | 39.852 | 0.9858 | 0.9796 | |

| FC4 [14] | 2.332 | 8.356 | 2.901 | 9.871 | 7.136 | 23.567 | 0.9763 | 0.9580 | |

| Canon EOS-5D | GE2 [19] | 4.127 | 14.310 | 5.242 | 18.227 | 11.934 | 41.426 | 0.9788 | 0.9623 |

| QU [20] | 2.853 | 13.915 | 3.678 | 18.324 | 8.257 | 38.007 | 0.9816 | 0.9570 | |

| FC4 [14] | 1.849 | 8.773 | 2.396 | 12.241 | 5.543 | 23.679 | 0.9864 | 0.9620 | |

Find this author on Google Scholar

Find this author on Google Scholar Find this author on PubMed

Find this author on PubMed

Open access

Open access