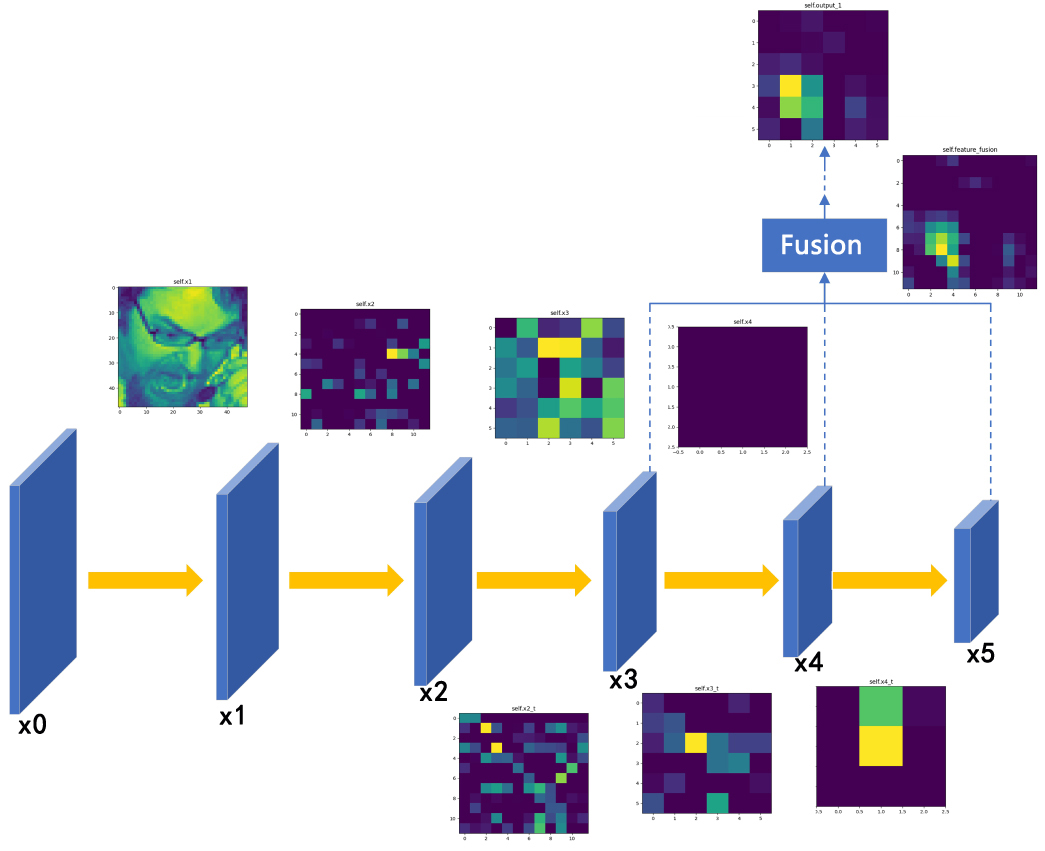

Facial expression recognition has a substantial and comprehensive application prospect in the field of affective computing, so it has become one of the research hotspots in computer vision. However, the resolution is low because facial region often occupies a relatively small area in the entire image. That leads to a low density of valuable information. In addition, the location distribution of critical regions of expression is diversified by the influence of pose, lighting changes, and occlusion. Therefore, we need to assemble the important information of expressions from multiple levels and dimensions, and propose a new network for adaptive feature fusion to solve these problems; multi-dimension and multi-level information fusion (MMIF) network. First, the low-level, mid-level, and high-level features extracted by the network were visualized and analysed. Considering the importance of mid-level features and the fact that high-level features contain vital semantic information, a fusion strategy was applied from mid-level to high-level features. At the same time, each up-sampled convolutional group was smoothed to aggregate multi-level features by residual learning. Then, the global attention mechanism (GAM) was introduced to realize cross-dimensional interactions of channels, spatial width, and spatial height. The proposed network focuses on the features of different levels and the salient regions of the image. The competitive experimental results were 68.39% on the FER2013 and 100% on the CK+.

Mei Bie, Huan Xu, Quanle Liu, Yan Gao, Xiangjiu Che, "Multi-dimension and Multi-level Information Fusion for Facial Expression Recognition" in Journal of Imaging Science and Technology, 2023, pp 1 - 11, https://doi.org/10.2352/J.ImagingSci.Technol.2023.67.4.040410

Find this author on Google Scholar

Find this author on Google Scholar Find this author on PubMed

Find this author on PubMed