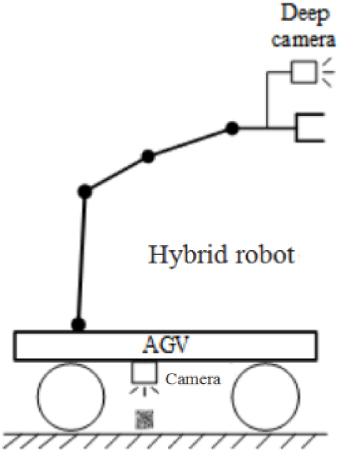

A hybrid robot fully integrates the merits of automated guided vehicle (AGV) and industrial manipulator. With the aid of computer vision algorithms, the camera on the AGV works like the eyes of the robot, making the robot highly intelligent. To promote the industrial application of the hybrid robot, it is necessary to enhance the navigation accuracy of the AGV and its ability to automatically handle materials in any pose. Therefore, this paper presents a fully automatic high-precision grasping system for the hybrid robot, which integrates the functions of high-precision visual positioning and automatic grasping. Both two-dimensional (2D) and three-dimensional (3D) features were employed to recognize the target. Specifically, the local features of the target were matched with those of the point cloud segment of the scene, and the pose transform matrix was obtained between the point cloud segment of the scene and the target, completing the recognition and positioning of the target. Experimental results show that the proposed method achieved a recognition rate of 97.6% in simple scenes, and 87.2% in complex, occluded scenes, and reduced the recognition time to merely 402.3 ms. The research results promote the application of the hybrid robot in industrial operations like automatic grasping, spraying, and stacking.

Xiao-Fang Zhao, Xue-Fang Chen, "An Intelligent Material Handling System for Hybrid Robot based on Visual Navigation" in Journal of Imaging Science and Technology, 2023, pp 1 - 7, https://doi.org/10.2352/J.ImagingSci.Technol.2023.67.4.040409

Find this author on Google Scholar

Find this author on Google Scholar Find this author on PubMed

Find this author on PubMed