Increasingly sophisticated algorithms, including trained artificial intelligence methods, are now widely employed to enhance image quality. Unfortunately, these algorithms often produce somewhat hallucinatory results, showing details that do not correspond to the actual scene content. It is not possible to avoid all hallucination, but by modeling pixel value error, it becomes feasible to recognize when a potential enhancement would generate image content that is statistically inconsistent with the image as captured. An image enhancement algorithm should never give a pixel a value that is outside of the error bounds for the value obtained from the sensor. More precisely, the repaired pixel values should have a high probability of accurately reflecting the true scene content. The current work investigates computation methods and properties of a class of pixel value error model that empirically maps a probability density function (PDF). The accuracy of maps created by various practical single-shot algorithms is compared to that obtained by analysis of many images captured under controlled circumstances. In addition to applications discussed in earlier work, the use of these PDFs to constrain AI-suggested modifications to an image is explored and evaluated.

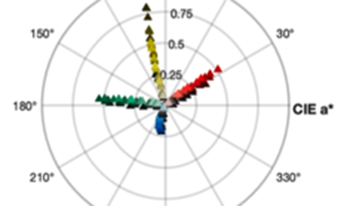

In this paper, we present a computationally-efficient gamut mapping algorithm designed for tone-mapped images, focusing on preserving hue fidelity while providing flexibility to retain either luminance or saturation for visually consistent results. The algorithm operates in both RGB and YUV color spaces, enabling practical implementation in hardware and software for real-time systems. We demonstrate that the proposed method effectively mitigates hue shifts during gamut mapping, offering a computationally viable alternative to more complex methods based on perceptually uniform color spaces.

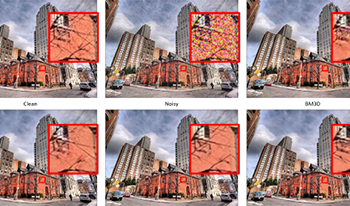

Image denoising is a crucial task in image processing, aiming to enhance image quality by effectively eliminating noise while preserving essential structural and textural details. In this paper, we introduce a novel denoising algorithm that integrates residual Swin transformer blocks (RSTB) with the concept of the classical non-local means (NLM) filtering. The proposed solution is aimed at striking a balance between performance and computation complexity and is structured into three main components: (1) Feature extraction utilizing a multi-scale approach to capture diverse image features using RSTB, (2) Multi-scale feature matching inspired by NLM that computes pixel similarity through learned embeddings enabling accurate noise reduction even in high-noise scenarios, and (3) Residual detail enhancement using the swin transformer block that recovers high-frequency details lost during denoising. Our extensive experiments demonstrate that the proposed model with 743k parameters achieves the best or competitive performance amongst the state-of-the-art models with comparable number of parameters. This makes the proposed solution a preferred option for applications prioritizing detail preservation with limited compute resources. Furthermore, the proposed solution is flexible enough to adapt to other image restoration problems like deblurring and super-resolution.

The focus of the work is to improve the reading performance of JAB Codes. JAB Code is a polychrome barcode that is ISO standardized. The weakness of the standardized decoding algorithm is the very low reading performance of under 10% for very large and rectangular codes. In many IT security applications, however, large and rectangular codes are required for the huge payload. In this paper, we present three different methods to improve the decoder. These methods aim at determining the version size of the JAB Code to be read. This is the step after the JAB Code has been located by the finder patterns and before the decoding can take place. The three methods have their advantages and disadvantages in their accuracy and performance. The evaluation compares detection rates and error performance for Baseline, Segmentation, Zero Crossing, and Local Sampling methods. The results show that Local Sampling achieves the highest detection rates, with 285 partial and 131 complete detections, while also maintaining the lowest error levels. The other methods perform significantly worse. The findings highlight that Local Sampling offers the best performance, effectively addressing the challenges of version size determination with improved accuracy and reliability.

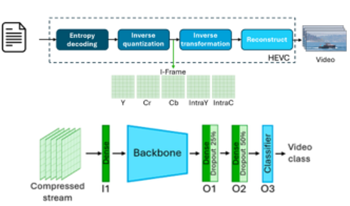

While conventional video fingerprinting methods act in the uncompressed domain (pixels and/or directly derived representations from pixels), the present paper establishes the proof of concepts for compressed domain video fingerprinting. Thus, visual content is processed at the level of compressed stream syntax elements (luma/chroma coefficients, and intra prediction modes) by a homemade NN-based solution backboned by conventional CNN models (ResNet and MobileNet). The experimental validations are obtained out of processing a state of the art and a homemade HEVC compressed video databases, and bring forth Accuracy, Precision and Recall values larger than 0.9.

Video streaming hits more than 80% of the carbon emissions generated by worldwide digital technologies consumption that, in their turn, account for 5% of worldwide carbon emissions. Hence, green video encoding emerges as a research field devoted to reducing the size of the video streams and the complexity of the decoding/encoding operations, while keeping a preestablished visual quality. Having the specific view of tracking green encoded video streams, the present paper studies the possibility of identifying the last video encoder considered in the case of multiple reencoding distribution scenarios. To this end, classification solutions backboned by the VGG, ResNet and MobileNet families are considered to discriminate among MPEG-4 AVC stream syntax elements, such as luma/chroma coefficients or intra prediction modes. The video content sums-up to 2 hours and is structured in two databases. Three encoders are alternatively studied, namely a proprietary green-encoder solution, and the two by-default encoders available on a large video sharing platform and on a popular social media, respectively. The quantitative results show classification accuracy ranging between 75% to 100%, according to the specific architecture, sub-set of classified elements, and dataset.

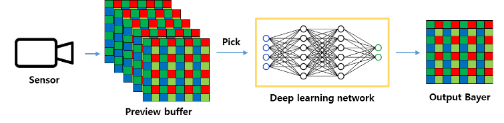

With the emergence of 200 mega pixel QxQ Bayer pattern image sensors, the remosaic technology that rearranges color filter arrays (CFAs) into Bayer patterns has become increasingly important. However, the limitations of the remosaic algorithm in the sensor often result in artifacts that degrade the details and textures of the images. In this paper, we propose a deep learning-based artifact correction method to enhance image quality within a mobile environment while minimizing shutter lag. We generated a dataset for training by utilizing a high-performance remosaic algorithm and trained a lightweight U-Net based network. The proposed network effectively removes these artifacts, thereby improving the overall image quality. Additionally, it only takes about 15 ms to process a 4000x3000 image on a Galaxy S22 Ultra, making it suitable for real-time applications.

Many lenses have significantly poorer sharpness in the corners of the image than they have at the center due to optical defects such as coma, astigmatism, and field curvature. In some circumstances, such a blur is not problematic. It could even be beneficial by helping to isolate the subject from the background. However, if there exists similar content in the scene that is not blurry, as happens commonly in landscapes or other scenes that have large textured regions, this type of defect can be extremely undesirable. The current work suggests that, in problematic circumstances where there exists visually similar sharp content, it should be possible to use that sharp content to synthesize detail to enhance the defectively blurry areas by overpainting. The new process is conceptually very similar to inpainting, but is overpainting in the same sense that the term is used in art restoration: it is attempting to enhance the underlying image by creating new content that is congruous with details seen in similar, uncorrupted, portions of the image. The kongsub (Kentucky’s cONGruity SUBstitution) software tool was created to explore this new approach. The algorithms used and various examples are presented, leading to a preliminary evaluation of the merits of this approach. The most obvious limitation is that this approach does not sharpen blurry regions for which there is no similar sharp content in the image.

Assessing distances between images and image datasets is a fundamental task in vision-based research. It is a challenging open problem in the literature and despite the criticism it receives, the most ubiquitous method remains the Fréchet Inception Distance. The Inception network is trained on a specific labeled dataset, ImageNet, which has caused the core of its criticism in the most recent research. Improvements were shown by moving to self-supervision learning over ImageNet, leaving the training data domain as an open question. We make that last leap and provide the first analysis on domain-specific feature training and its effects on feature distance, on the widely-researched facial image domain. We provide our findings and insights on this domain specialization for Fréchet distance and image neighborhoods, supported by extensive experiments and in-depth user studies.

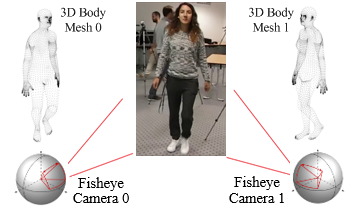

Fisheye cameras providing omnidirectional vision with up to 360° field-of-view (FoV) can cover a given space with fewer cameras for a multi-camera system. The main objective of the paper is to develop fast and accurate algorithms for automatic calibration of multiple fisheye cameras which fully utilize human semantic information without using predetermined calibration patterns or objects. The proposed automatic calibration method detects humans from each fisheye camera in equirectangular or spherical images. For each detected human, the portion of image defined by the bounding box will be converted to an undistorted image patch with normal FoV by a perspective mapping parameterized by the associated view angle. 3D human body meshes are then estimated by pretrained Human Mesh Recovery (HMR) model and the vertices of each 3D human body mesh are projected onto the 2D image plane for each corresponding image patch. Structure-from-Motion (SfM) algorithm is used to reconstruct 3D shapes from a pair of cameras and estimate the essential matrix. Camera extrinsic parameters can be calculated from the estimated essential matrix and the corresponding perspective mappings. By assuming one main camera’s pose in the world coordinate is known, the poses of all other cameras in the multi-camera system can be calculated. Fisheye camera pairs spinning different angles are evaluated using (1) 2D projection error and (2) rotation and translation errors as performance metrics. The proposed method is shown to perform more accurate calibration than methods using appearance-based feature extractors, e.g., Scale-Invariant Feature Transform (SIFT), and deep learning-based human joint estimators, e.g., MediaPipe.