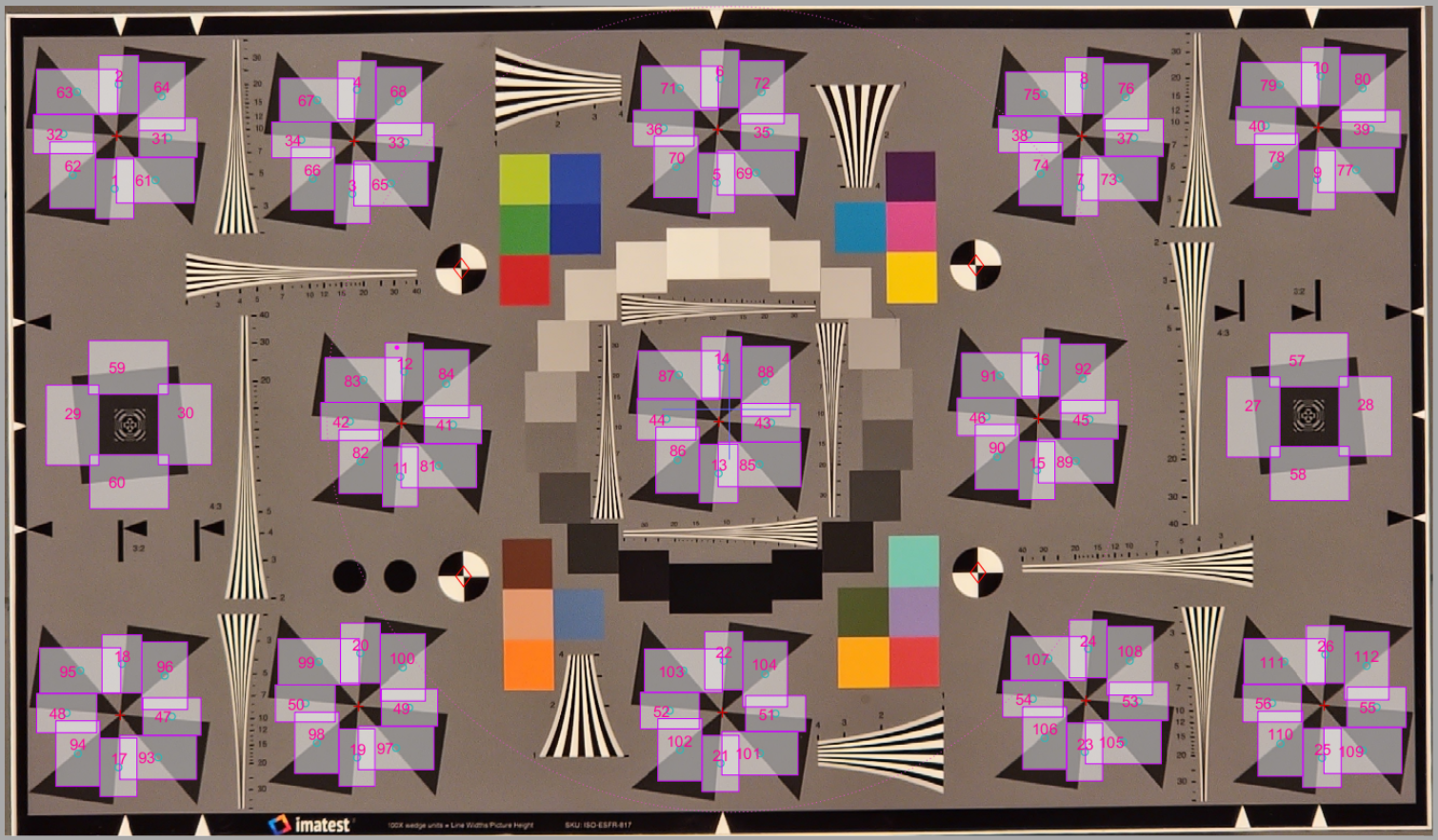

We present a comprehensive framework for conveniently measuring camera information capacity and related performance metrics from the widely used slanted-edge (e-SFR) test pattern. The goal of this work is to develop a set of image quality metrics that can predict the performance of Machine Vision (MV) and Artificial Intelligence (AI) systems, assist with camera selection, and use for designing electronic filters to optimize system performance. The new methods go far beyond the standard approach of estimating system performance based on sharpness and noise (or Signal-to-Noise Ratio) which often involves more art than science. Metrics include Noise Power Spectrum (NPS), Noise Equivalent Quanta (NEQ), and two metrics that quantify the detectability of objects and edges: Independent Observer Signal-to-Noise Ratio, SNRi, and Edge SNRi. We show how to use these metrics to design electronic filters that optimize object and edge detection performance. The new measurements can be used to solve several problems, including finding a camera that meets performance requirements with a minimum number of pixels important because fewer pixels mean faster processing and lower energy consumption as well as lower cost.

This paper investigates the relationship between image quality and computer vision performance. Two image quality metrics, as defined in the IEEE P2020 draft Standard for Image quality in automotive systems, are used to determine the impact of image quality on object detection. The IQ metrics used are (i) Modulation Transfer function (MTF), the most commonly utilized metric for measuring the sharpness of a camera; and (ii) Modulation and Contrast Transfer Accuracy (CTA), a newly defined, state-of-the-art metric for measuring image contrast. The results show that the MTF and CTA of an optical system are impacted by ISP tuning. Some correlation is shown to exist between MTF and object detection (OD) performance. A trend of improved AP5095 as MTF50 increases is observed in some models. Scenes with similar CTA scores can have widely varying object detection performance. For this reason, CTA is shown to be limited in its ability to predict object detection performance. Gaussian noise and edge enhancement produce similar CTA scores but different AP5095 scores. The results suggest MTF is a better predictor of ML performance than CTA.

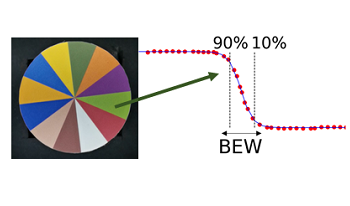

Event-based vision Sensors (EVS) utilize smart pixels capable of detecting whether relative illumination changes exceed a predefined temporal contrast threshold on a pixel level. As EVS asynchronously read these events, they provide low-latency and high-temporal resolution suitable for complementing conventional CMOS Image Sensors (CIS). Emerging hybrid CIS+EVS sensors fuse the high spatial resolution intensity frames with low latency event information to enhance applications such as deblur or video-frame interpolation (VFI) for slow-motion video capture. This paper employs an edge sharpness-based metric-Blurred Edge Width (BEW) to benchmark EVS-assisted slow-motion capture against CIS-only solutions. The EVS-assisted VFI interpolates a CIS video steam with a framerate of 120 fps by 64x, yielding an interpolated framerate of 7680 fps. We observed that the added information from EVS dramatically outperforms a 120 fps CIS-only VFI solution. Furthermore, the hybrid EVS+CIS-based VFI achieves comparable performance as high-speed CIS-only solutions that capture frames directly at 480 fps or 1920 fps and incorporate additional CIS-only VFI. These, however, do so at significantly lower data rates. In our study, factors 2.6 and 10.5 were observed.

Consumer cameras are indispensable tools for communication, content creation, and remote work, but image and video quality can be affected by various factors such as lighting, hardware, scene content, face detection, and automatic image processing algorithms. This paper investigates how web and phone camera systems perform in face-present scenes containing diverse skin tones, and how performance can be objectively measured using standard procedures and analyses. We closely examine image quality factors (IQFs) commonly impacted by scene content, emphasizing automatic white balance (AWB), automatic exposure (AE), and color reproduction according to Valued Camera Experience (VCX) standard procedures. Video tests are conducted for scenes containing standard compliant mannequin heads, and across a novel set of AI-generated faces with 10 additional skin tones based on the Monk Skin Tone Scale. Findings indicate that color shifts, exposure errors, and reduced overall image fidelity are unfortunately common for scenes containing darker skin tones, revealing a major short-coming in modern-day automatic image processing algorithms, highlighting the need for testing across a more diverse range of skin tones when developing automatic processing pipelines and the standards that test them.

Portraits are one of the most common use cases in photography, especially in smartphone photography. However, evaluating portrait quality in real portraits is costly, inconvenient, and difficult to reproduce. We propose a new method to evaluate a large range of detail preservation renditions on realistic mannequins. This laboratory setup can cover all commercial cameras from videoconference to high-end DSLRs. Our method is based on 1) the training of a machine learning method on a perceptual scale target 2) the usage of two different regions of interest per mannequin depending on the quality of the input portrait image 3) the merge of the two quality scales to produce the final wide range scale. On top of providing a fine-grained wide range detail preservation quality output, numerical experiments show that the proposed method is robust to noise and sharpening, unlike other commonly used methods such as the texture acutance on the Dead Leaves chart.

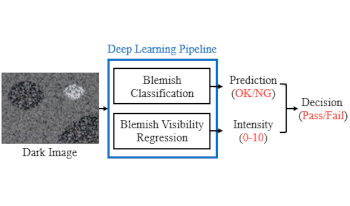

Nowadays, the quality of low-light pictures is becoming a competitive edge in mobile phones. To ensure this, the necessity to filter out dark defects that cause abnormalities in dark photos in advance is emerging, especially for dark blemish. However, high manpower is required to separate dark blemish patterns due to the low consistency problem of the existing scoring method. This paper proposes a novel deep learning-based screening method to solve this problem. The proposed pipeline uses two ResNet-D models with different depths to perform classification and regression of visibility, respectively. Then it derives a new score that combines the outputs of both models into one. In addition, we collect the large-scale image set from real manufacturing processes to train models and configure the dataset with two types of label systems suitable for each model. Experimental results show the performance of the deep learning models trained and validated with the presented datasets. Our classification model has significantly improved screening performance with respect to its accuracy and F1-score compared to the conventional handcraft method. Also, the visibility regression method shows a high Pearson correlation coefficient with 30 expert engineers, and the inference output of our regression model is consistent with it.

In complementary metal oxide semiconductor image sensor (CIS) industry, advances of techniques have been introduced and it led to unexpected artifacts in the photograph. The color dots, known as false color, also appear in images from CIS employing the modified color filter arrays and the remosaicing image signal processors (ISPs). Therefore, the objective metric for image quality assessments (IQAs) have become important to minimize artifacts for CIS manufacturers. In our study, we suggest a novel no-reference IQA metric to quantify the false color occurring in practical IQA scenarios. We propose a pseudo-reference to overcome the absence of reference image, inferring an ideal sensor output. As we detected the distorted pixels by specifying outlier colors with a statistical method, the pseudo-reference was generated while correcting outlier pixels with the appropriate colors according to an unsupervised clustering model. With the derived pseudo-reference, our method suggests a metric score based on the color difference from an input, as it reflects the results of our subjective false color visibility analysis.

In this paper, I present the proposal of a virtual reality subjective experiment to be performed at Texas State University, which is part of the VQEG-IMG test plan for the definition of a new recommendation for subjective assessment of eXtended Reality (XR) communications (work item ITU-T P.IXC). More specifically, I discuss the challenges of estimating the user quality of experience (QoE) for immersive applications and detail the VQEG-IMG test plan tasks for XR subjective QoE assessment. I also describe the experimental choices of the audio-visual experiment to be performed at Texas State University, which has the goal of comparing two possible scenarios for teleconference meetings: a virtual reality representation and a realistic representation.

With the fast-evolving video and display technologies, there is an interest in better understanding user preferences for video quality and the factors that impact these preferences. This study focuses on the subjective video quality assessment (VQA) of TV displays, considering a range of factors that influence viewer experience. We conducted two psychophysical experiments to investigate the latent factors affecting human-perceived video quality. Our results offer insights into how different factors contribute to video quality perception. This research guides researchers and developers aiming to improve display and environmental settings to give end-users an optimal viewing experience.

The introduction of the new edge-based spatial frequency response (e-SFR) feature, known as the slanted star, in ISO 12233:2023 marks a significant change to the standard. This feature offers four additional edge orientations compared to the previously used slanted square, enabling measurement of sagittal and tangential spatial frequency response (SFR) in addition to SFR derived from vertical and horizontal edges. However, the expanded utility provided by these additional edges presents challenges in reliably automating the placement of appropriate regions of interest (ROIs) for e-SFR analysis, thereby complicating the accurate comparison of resolution across various orientations. This paper addresses these challenges by providing recommendations for the efficient and precise detection and analysis of the ISO 12233 slanted star feature. Our recommendations are based on thorough simulations and experimentally validated results obtained under diverse and challenging conditions.