Panoramas are formed by stitching together two or more images of a scene viewed from different positions. Part of the solution to this stitching problem is ‘solving for the homography’: where the detail in one image is geometrically warped so it appears in the coordinate frame of another. In this paper, we view the spectral loci for a given camera and the human visual system (i.e. their respective chromaticity diagrams) as two pictures of the same ‘scene’ and warp one to the other by finding the best homography. When this geometric distortion renders the two loci to be identical then there exists a unique colour filter (that falls gracefully from the derivation without further calculation) which makes the camera colorimetric (the camera+filter measures RGBs that are exactly linearly related to XYZs). When the best homography is not exact the filter derived by this method still makes cameras approximately colorimetric. Experiments validate our method.

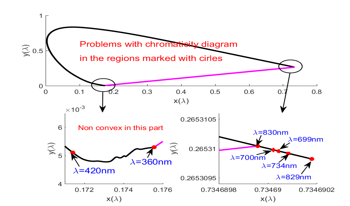

CIE colorimetry based on colour matching functions has been successfully applied in various industrial applications. In the past it was generally accepted that the chromaticity diagram based on either 1931 or 1964 colour matching functions (CMFs), contains all the chromaticity coordinates of stimuli, which means the domain (𝛺) enclosed by the spectrum locus and the purple line is convex. Also based on the chromaticity diagram, the Helmholtz coordinates (dominant wavelength and excitation purity) can be defined. In this paper, these properties of chromaticity coordinates based on CIE 1931, CIE 1964, CIE 2006 2- and 10-degree cone fundamentals (CFs) and cone fundamentals-based CMFs are evaluated. It is found that the domain 𝛺 does not contain the chromaticity coordinates of all stimuli, and spectral chromaticity coordinates do not distribute in the wavelength order along the spectrum locus, which results in no way to determine the Helmholtz coordinates for certain stimuli. Finally modified CIE 1931 CMFs, CIE 1964 CMFs, and CFs and CMFs based on CFs, are derived.

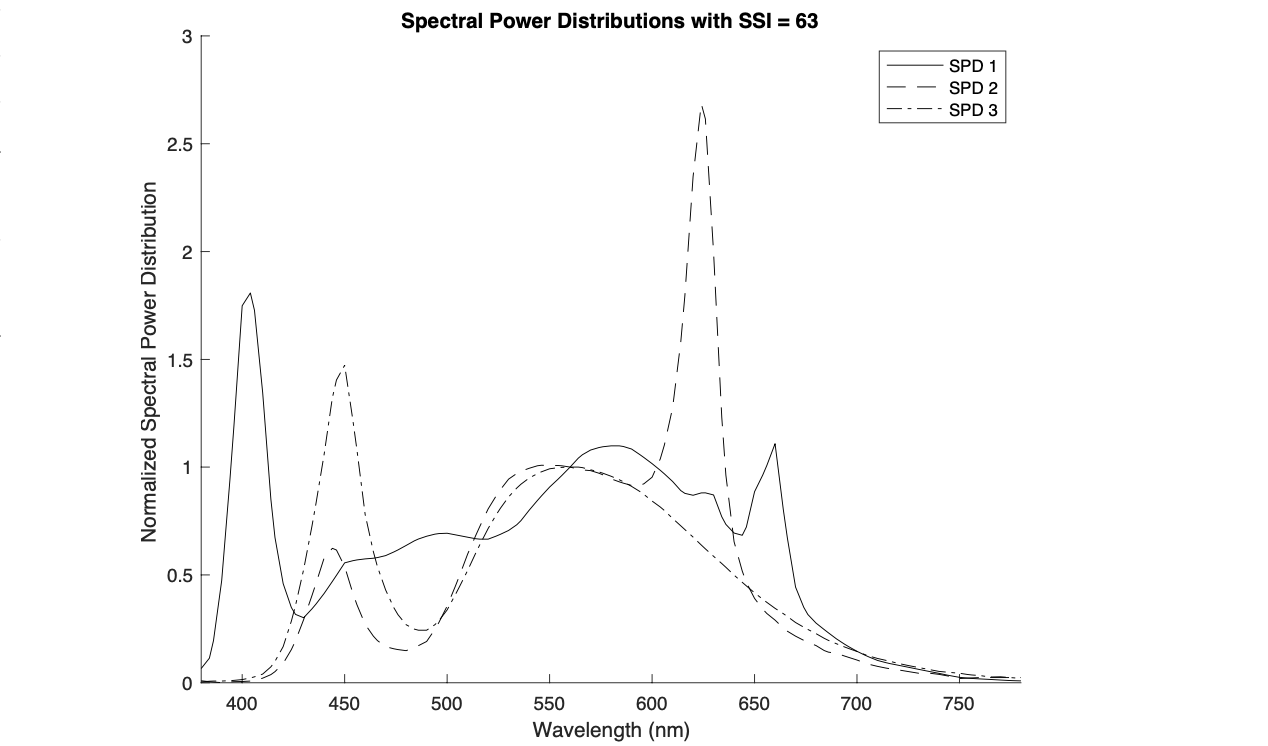

Consistent color-rendering in motion pictures is critical for creating natural scenes that enhance storytelling and don’t distract the audience’s attention. In today’s production environments, it is common to use a wide variety of light sources. Traditional tungsten-halogen sources—red, green, and blue lightemitting diode (RGB LED) sources and white light LEDs—are often mixed, leading to color-rendering issues. This paper introduces a new metric, the Camera Lighting Metamer Index (CLMI), rooted in the concept of metamer mismatching. The CLMI is for assessing the color-rendering differences of disparate sources when a single camera is used and the camera’s spectral sensitivities are known. By leveraging the known spectral sensitivities of the camera and the spectral power distributions (SPDs) of the light sources, CLMI quantifies the potential for color discrepancies between objects lit by the different sources. We propose that this metric can serve as a useful tool for cinematographers and visual effects artists, providing more predictable and precise control over color fidelity. The metric could also be used to supplement existing generalized metrics, such as Spectral Similarity Index (SSI), when detailed camera and light source spectral characteristics are available.

Several studies in the past have proposed models to characterize the colorimetry of displays, most of which have poor performance for OLED displays. This is primarily due to the dependency of the colorimetry of OLED panels on the Average Pixel Level (APL) of the content displayed on them. In this study, a workflow is proposed to characterize the colorimetry of APL dependent OLED panels based on the power consumption of actual pixel-content of the displayed scene. The method performed well with a mean of mean CIEDE2000 of 19 natural images as 2.18 units.

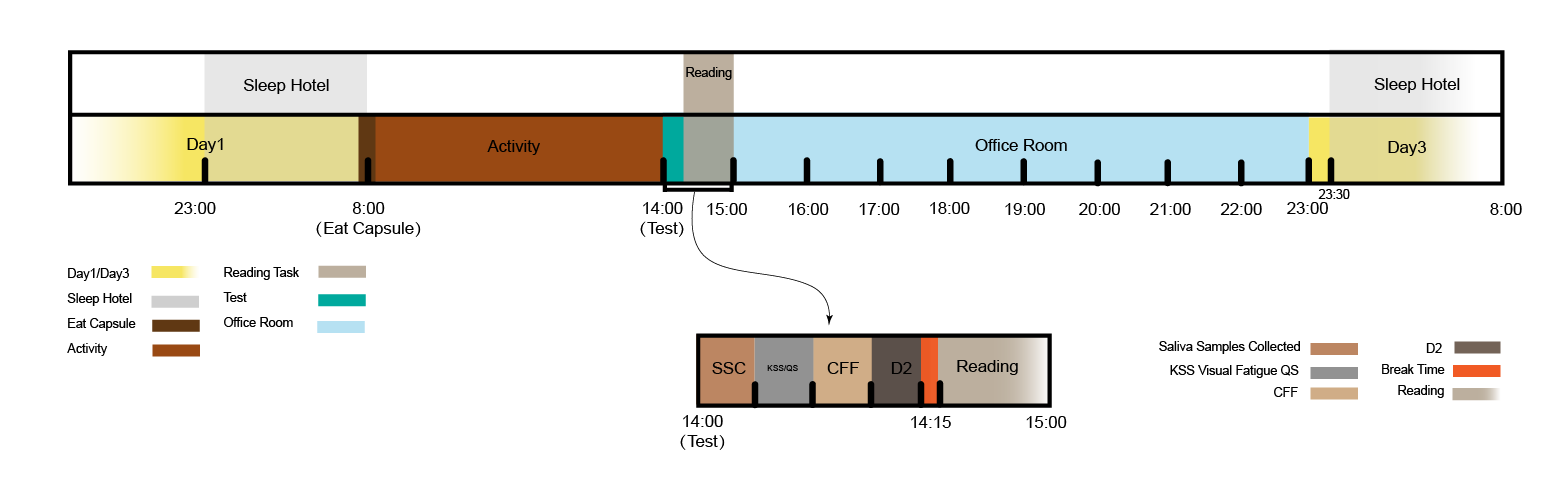

As displays become ubiquitous and increasingly integrated into daily life, their impact on human health is a major concern for academia and industry. The aim of this study was to investigate the effect of display backgrounds with different Correlated Colour Temperature (CCT) and Circadian Stimulus (CS) settings on human circadian rhythms and visual fatigue. Twelve participants underwent four 9-hour display lighting interventions over a 10-day period, including S1 (CCT at 4000K; CS from 0.29 to 0.15), S2 (CS at 0.2; CCT from 6500K to 4000K), S3 (CCT from 6500K to 4000K; CS from 0.30 to 0.15), and static S4 (CCT at 4000K; CS at 0.2). Participants' melatonin levels, visual fatigue, cognitive performance, sleep quality and 24-hour core body temperature were monitored. The results showed that S4 was the most circadian-friendly condition, with the least visual fatigue and the best sleep quality. In addition, the S3 intervention resulted in the lowest nighttime alertness. Therefore, static display backgrounds with low CCT and CS appear to be more beneficial for circadian health than dynamic display backgrounds. Furthermore, the results of several statistical tests showed that CS has a greater effect on rhythm than CCT.

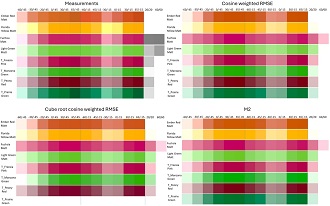

The Bidirectional Reflectance Distribution Function (BRDF) is one of the tools for characterising the appearance of real-world materials. However, bidirectional reflectance measurements and data processing can be time-consuming and challenging. This paper aims to estimate the BRDF values of eight matt samples using two portable, handheld devices, one for diffuse reflectance and one for specular reflectance measurements. The data is fitted to the Cook-Torrance BRDF model in the spectral domain to get the optimised parameters and the estimated spectral BRDF values using three different cost functions. The estimated BRDF is evaluated using a colour-difference metric. The results show that it would be possible to estimate spectral BRDF of a sample using measurements from two simple measurement devices having fewer angle combinations for both the diffuse and specular measurements. This results in a shorter measurement and processing time, lower storage usage, and estimations of spectral BRDF values. Moreover, the cube root cosine-weighted RMSE cost function shows more consistency in the colour reproduction estimated by the fitted BRDF model.

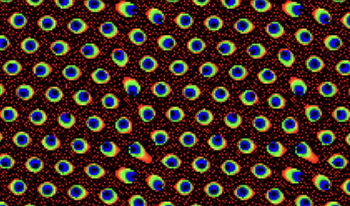

Pixel-wise color difference metrics like ΔE00 have long been used in image analysis, but it remains unclear how scores should be integrated over space. To highlight this, a psychophysical experiment was conducted to characterize visual sensitivity to differences in chromatic noise patterns in different color and pattern contexts. The results demonstrated that observers were more sensitive to chromatic noise pattern (CNP) differences when similar colors were spatially dispersed over the pattern as opposed to clustered. Further analysis with common image color and texture difference metrics showed that none were sensitive to this effect. This finding highlights the need for metrics which capture the perceptual interaction between color and texture.

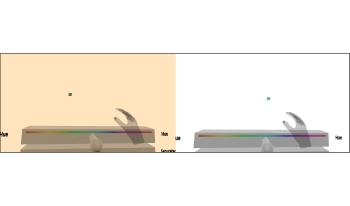

Corresponding colors data are necessary to evaluate and improve chromatic adaptation models. Virtual reality (VR) technologies offer a promising and flexible tool for studying chromatic adaptation. This work revisits the classic technique of haploscopic matching to collect corresponding colors data using VR. A virtual environment was designed in which the same visual scene was lit with a light of a different chromaticity for each eye, and an exploratory study was conducted, collecting corresponding colors data from 10 participants (5 color stimuli, 3 trials for each). The averaged CIEDE2000 standard deviation for individual observers, across 5 colors, was 2.94, showing adequate precision despite the low amount of repetitions. This work represents a proof of concept for an efficient and realistic VR haploscopic matching paradigm, which may be extended by future studies employing display color characterization and greater numbers of participants and trials.

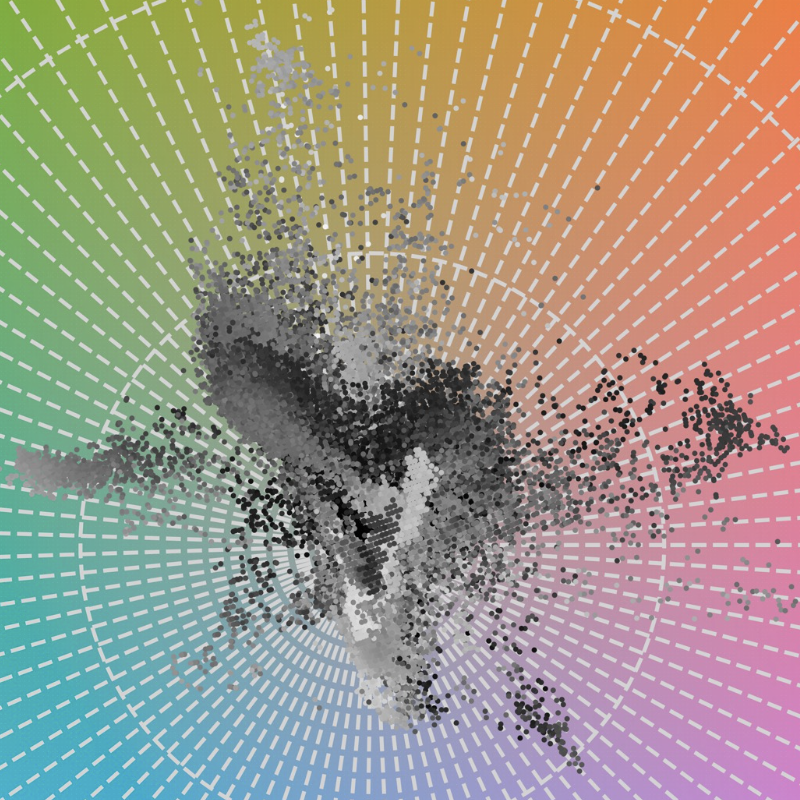

Neural image compression employs deep neural networks and generative models to achieve impressive compression rates and reconstruction qualities compared to traditional signal-processing-based compression algorithms such as JPEG. However, color artifacts that arise in an image as the amount of compression increases have not been formally analyzed for neural-based compression algorithms. This paper provides an initial investigation into the degradation of color when images are compressed at comparable bit rates using lossy neural image compression and variants of JPEG. Our findings indicate that neural image compression degrades color more gracefully than JPEG, JPEG 2000, and JPEG XL.