A design challenge in virtual reality (VR) is balancing users’ freedom to explore the virtual environment with the constraints of a guidance interface that focuses their attention without breaking the sense of immersion or encroaching on their freedom. In virtual exhibitions in which users may explore and engage with content freely, the design of guidance cues plays a critical role. This research explored the effectiveness of three different attention guidance cues in a scavenger-hunt-style multiple visual search task: an extended field of view through a rearview mirror (passive guidance), audio alerts (active guidance), and haptic alerts (active guidance) as well as a fourth control condition with no guidance. Participants were tasked with visually searching for seven specific paintings in a virtual rendering of the Louvre Museum. Performance was evaluated through qualitative surveys and two quantitative metrics: the frequency with which users checked the task list of seven paintings and the total time to complete the task. The results indicated that haptic and audio cues were significantly more effective at reducing the frequency of checking the task list when compared to the control condition while the rearview mirror was the least effective. Unexpectedly, none of the cues significantly reduced the task-completion time. The insights from this research provide VR designers with guidelines for constructing more responsive virtual exhibitions using seamless attentional guidance systems that enhance user experience and interaction in VR environments.

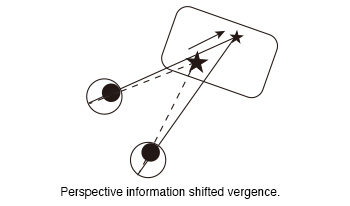

The vergence of subjects was measured while they observed 360-degree images of a virtual reality (VR) goggle. In our previous experiment, we observed a shift in vergence in response to the perspective information presented in 360-degree images when static targets were displayed within them. The aim of this study was to investigate whether a moving target that an observer was gazing at could also guide his vergence. We measured vergence when subjects viewed moving targets in 360-degree images. In the experiment, the subjects were instructed to gaze at the ball displayed in the 360-degree images while wearing the VR goggle. Two different paths were generated for the ball. One of the paths was the moving path that approached the subjects from a distance (Near path). The second path was the moving path at a distance from the subjects (Distant path). Two conditions were set regarding the moving distance (Short and Long). The moving distance of the left ball in the long distance condition was a factor of two greater than that in the short distance condition. These factors were combined to created four conditions (Near Short, Near Long, Distant Short and Distant Long). And two different movement time (5s and 10s) were designated for the movement of the ball only in the short distance conditions. The moving time of the long distance condition was always 10s. In total, six types of conditions were created. The results of the experiment demonstrated that the vergence was larger when the ball was in close proximity to the subjects than when it was at a distance. That was that the perspective information of 360-degree images shifted the subjects’ vergence. This suggests that the perspective information of the images provided observers with high-quality depth information that guided their vergence toward the target position. Furthermore, this effect was observed not only for static targets, but also for moving targets.

During the process of virtual and reality fusion interaction, accurately estimating and mapping real-world objects to their corresponding virtual counterparts is crucial for enhancing the overall interaction experience. This paper focuses on studying the pose estimation of real-world targets within this fusion context. To address the challenge of achieving precise pose estimation from single-view RGB images captured by basic devices, a high-resolution heatmap regression method is proposed. This algorithm strikes a balance between accuracy and complexity. To tackle issues stemming from inadequate utilization of semantic information in feature maps during heatmap regression, a lightweight upsampling method based on content awareness is introduced. Additionally, to mitigate resolution and accuracy loss due to quantization errors during pose calculation caused by predicted keypoints on the heatmap, a keypoint optimization module incorporating Gaussian dimensionality reduction and pose estimation strategy based on high-confidence keypoints is presented. Quantitative experimental results demonstrate that this method outperforms comparative algorithms on the LINEMOD dataset, achieving an accuracy rate of 85.7% based on the average distance index. Qualitative experiments further illustrate the successful achievement of precise real-to-virtual space pose estimation and mapping in interactive scene applications.

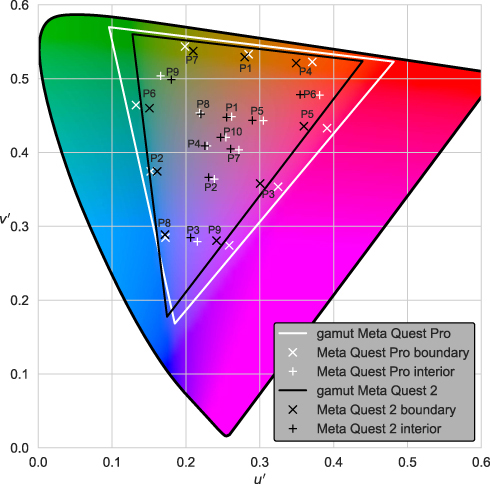

The utility and ubiquitousness of virtual reality make it an extremely popular tool in various scientific research areas. Owing to its ability to present naturalistic scenes in a controlled manner, virtual reality may be an effective option for conducting color science experiments and studying different aspects of color perception. However, head mounted displays have their limitations, and the investigator should choose the display device that meets the colorimetric requirements of their color science experiments. This paper presents a structured method to characterize the colorimetric profile of a head mounted display with the aid of color characterization models. By way of example, two commercially available head mounted displays (Meta Quest 2 and Meta Quest Pro) are characterized using four models (Look-up Table, Polynomial Regression, Artificial Neural Network, and Gain Gamma Offset), and the appropriateness of each of these models is investigated.

In this paper, I present the proposal of a virtual reality subjective experiment to be performed at Texas State University, which is part of the VQEG-IMG test plan for the definition of a new recommendation for subjective assessment of eXtended Reality (XR) communications (work item ITU-T P.IXC). More specifically, I discuss the challenges of estimating the user quality of experience (QoE) for immersive applications and detail the VQEG-IMG test plan tasks for XR subjective QoE assessment. I also describe the experimental choices of the audio-visual experiment to be performed at Texas State University, which has the goal of comparing two possible scenarios for teleconference meetings: a virtual reality representation and a realistic representation.

Concerns about head mounted displays have led to numerous studies about their potential impact on the visual system. Yet, none have investigated if the use of Virtual Reality (VR) Head Mounted Displays with their reduced field of view and visually soliciting visual environment, could reduce the spatial spread of the attentional window. To address this question, we measured the useful field of vision in 16 participants right before playing a VR game for 30 minutes and immediately afterwards. The test involves calculation of a presentation time threshold necessary for efficient perception of a target presented in the centre of the visual field and a target presented in the periphery. The test consists of three subtests with increasing difficulty. Data comparison did not show significant difference between pre-VR and post-VR session (subtest 2: F(1,11) = .7 , p = .44; subtest 3 F(1,11) = .9 , p = .38). However, participants’ performances for central target perception decreased in the most requiring subtest (F(1,11) = 8.1, p = .02). This result suggests that changes in spatial attention could be possible after prolonged VR presentation.

At present, the research on emotion in the virtual environment is limited to the subjective materials, and there are very few studies based on objective physiological signals. In this article, the authors conducted a user experiment to study the user emotion experience of virtual reality (VR) by comparing subjective feelings and physiological data in VR and two-dimensional display (2D) environments. First, they analyzed the data of self-report questionnaires, including Self-assessment Manikin (SAM), Positive And Negative Affect Schedule (PANAS) and Simulator Sickness Questionnaire (SSQ). The result indicated that VR causes a higher level of arousal than 2D, and easily evokes positive emotions. Both 2D and VR environments are prone to eye fatigue, but VR is more likely to cause symptoms of dizziness and vertigo. Second, they compared the differences of electrocardiogram (ECG), skin temperature (SKT) and electrodermal activity (EDA) signals in two circumstances. Through mathematical analysis, all three signals had significant differences. Participants in the VR environment had a higher degree of excitement, and the mood fluctuations are more frequent and more intense. In addition, the authors used different machine learning models for emotion detection, and compared the accuracies on VR and 2D datasets. The accuracies of all algorithms in the VR environment are higher than that of 2D, which corroborated that the volunteers in the VR environment have more obvious skin electrical signals, and had a stronger sense of immersion. This article effectively compensated for the inadequacies of existing work. The authors first used objective physiological signals for experience evaluation and used different types of subjective materials to make contrast. They hope their study can provide helpful guidance for the engineering reality of virtual reality.

Modern virtual reality (VR) headsets use lenses that distort the visual field, typically with distortion increasing with eccentricity. While content is pre-warped to counter this radial distortion, residual image distortions remain. Here we examine the extent to which such residual distortion impacts the perception of surface slant. In Experiment 1, we presented slanted surfaces in a head-mounted display and observers estimated the local surface slant at different locations. In Experiments 2 (slant estimation) and 3 (slant discrimination), we presented stimuli on a mirror stereoscope, which allowed us to more precisely control viewing and distortion parameters. Taken together, our results show that radial distortion has significant impact on perceived surface attitude, even following correction. Of the distortion levels we tested, 5% distortion results in significantly underestimated and less precise slant estimates relative to distortion-free surfaces. In contrast, Experiment 3 reveals that a level of 1% distortion is insufficient to produce significant changes in slant perception. Our results highlight the importance of adequately modeling and correcting lens distortion to improve VR user experience.

We analyzed the impact of common stereoscopic three-dimensional (S3D) depth distortion on S3D optic flow in virtual reality environments. The depth distortion is introduced by mismatches between the image acquisition and display parameters. The results show that such S3D distortions induce large S3D optic flow distortions and may even induce partial/full optic flow reversal within a certain depth range, depending on the viewer’s moving speed and the magnitude of S3D distortion. We hypothesize that the S3D optic flow distortion may be a source of intra-sensory conflict that could be a source of visually induced motion sickness.

This paper describes a comparison of user experience of virtual reality (VR) image format. The authors prepared the following four conditions and evaluated the user experience during viewing VR images with a headset by measuring subjective and objective indices; Condition 1: monoscopic 180-degree image, Condition 2: stereoscopic 180-degree image, Condition 3: monoscopic 360-degree image, Condition 4: stereoscopic 360-degree image. From the results of the subjective indices (reality, presence, and depth sensation), condition 4 was evaluated highest, and conditions 2 and 3 were evaluated to the same extent. In addition, from the results of the objective indices (eye and head tracking), a tendency to suppress head movement was found in 180-degree images.