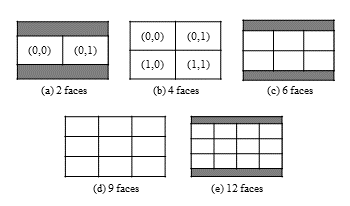

The quality of web conferencing services often degrades by network quality. Parametric video quality-estimation techniques are essential to detect quality degradation because they can estimate the quality of videos displayed to each user by using information about video encoding. To study these techniques, we have to consider display layouts such as single and grid views, which are layouts unique to web conferencing videos. Therefore, we investigated the subjective evaluation stability of both views. Then, we showed the evaluation of grid views is less stable because of the smaller size of face images comprising the display and wider quality distributions.

Current state-of-the-art pixel-based video quality models for 4K resolution do not have access to explicit meta information such as resolution and framerate and may not include implicit or explicit features that model the related effects on perceived video quality. In this paper, we propose a meta concept to extend state-of-the-art pixel-based models and develop hybrid models incorporating meta-data such as framerate and resolution. Our general approach uses machine learning to incooperate the meta-data to the overall video quality prediction. To this aim, in our study, we evaluate various machine learning approaches such as SVR, random forest, and extreme gradient boosting trees in terms of their suitability for hybrid model development. We use VMAF to demonstrate the validity of the meta-information concept. Our approach was tested on the publicly available AVT-VQDB-UHD-1 dataset. We are able to show an increase in the prediction accuracy for the hybrid models in comparison with the prediction accuracy of the underlying pixel-based model. While the proof-of-concept is applied to VMAF, it can also be used with other pixel-based models.

The research domain on the Quality of Experience (QoE) of 2D video streaming has been well established. However, a new video format is emerging and gaining popularity and availability: VR 360-degree video. The processing and transmission of 360-degree videos brings along new challenges such as large bandwidth requirements and the occurrence of different distortions. The viewing experience is also substantially different from 2D video, it offers more interactive freedom on the viewing angle but can also be more demanding and cause cybersickness. Further research on the QoE of 360-videos specifically is thus required. The goal of this study is to complement earlier research by (Tran, Ngoc, Pham, Jung, and Thank, 2017) testing the effects of quality degradation, freezing, and content on the QoE of 360-videos. Data will be gathered through subjective tests where participants watch degraded versions of 360-videos through an HMD. After each video they will answer questions regarding their quality perception, experience, perceptual load, and cybersickness. Results of the first part show overall rather low QoE ratings and it decreases even more as quality is degraded and freezing events are added. Cyber sickness was found not to be an issue.

This paper describes ongoing work within the video quality experts group (VQEG) to develop no-reference (NR) audiovisual video quality analysis (VQA) metrics. VQEG provides an open forum that encourages knowledge sharing and collaboration. The VQEG no-reference Metric (NORM) group’s goal is to develop open-source NR-VQA metrics that meet industry requirements for scope, accuracy, and capability. This paper presents industry specifications from discussions at VQEG face-to-face meetings among industry, academic, and government participants. This paper also announces an open software framework for collaborative development of NR image quality Analysis (IQA) and VQA metrics <ext-link ext-link-type="url" xlink:href="https://github.com/NTIA/NRMetricFramework"><https://github.com/NTIA/NRMetricFramework></ext-link>. This framework includes the support tools necessary to begin research and avoid common mistakes. VQEG’s goal is to produce a series of NR-VQA metrics with progressively improving scope and accuracy. This work draws upon and enables IQA metric research, as both use the human visual system to analyze the quality of audiovisual media on modern displays. Readers are invited to participate.

Video capture is becoming more and more widespread. The technical advances of consumer devices have led to improved video quality and to a variety of new use cases presented by social media and artificial intelligence applications. Device manufacturers and users alike need to be able to compare different cameras. These devices may be smartphones, automotive components, surveillance equipment, DSLRs, drones, action cameras, etc. While quality standards and measurement protocols exist for still images, there is still a need of measurement protocols for video quality. These need to include parts that are non-trivially adapted from photo protocols, particularly concerning the temporal aspects. This article presents a comprehensive hardware and software measurement protocol for the objective evaluation of the whole video acquisition and encoding pipeline, as well as its experimental validation.

Adaptive streaming is fast becoming the most widely used method for video delivery to the end users over the internet. The ITU-T P.1203 standard is the first standardized quality of experience model for audiovisual HTTP-based adaptive streaming. This recommendation has been trained and validated for H.264 and resolutions up to and including full-HD. The paper provides an extension for the existing standardized short-term video quality model mode 0 for new codecs i.e., H.265, VP9 and AV1 and resolutions larger than full-HD (e.g. UHD-1). The extension is based on two subjective video quality tests. In the tests, in total 13 different source contents of 10 seconds each were used. These sources were encoded with resolutions ranging from 360p to 2160p and various quality levels using the H.265, VP9 and AV1 codecs. The subjective results from the two tests were then used to derive a mapping/correction function for P.1203.1 to handle new codecs and resolutions. It should be noted that the standardized model was not re-trained with the new subjective data, instead only a mapping/correction function was derived from the two subjective test results so as to extend the existing standard to the new codecs and resolutions.