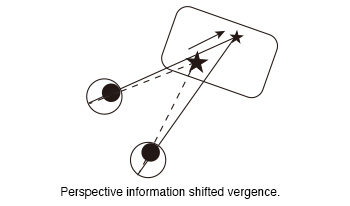

The vergence of subjects was measured while they observed 360-degree images of a virtual reality (VR) goggle. In our previous experiment, we observed a shift in vergence in response to the perspective information presented in 360-degree images when static targets were displayed within them. The aim of this study was to investigate whether a moving target that an observer was gazing at could also guide his vergence. We measured vergence when subjects viewed moving targets in 360-degree images. In the experiment, the subjects were instructed to gaze at the ball displayed in the 360-degree images while wearing the VR goggle. Two different paths were generated for the ball. One of the paths was the moving path that approached the subjects from a distance (Near path). The second path was the moving path at a distance from the subjects (Distant path). Two conditions were set regarding the moving distance (Short and Long). The moving distance of the left ball in the long distance condition was a factor of two greater than that in the short distance condition. These factors were combined to created four conditions (Near Short, Near Long, Distant Short and Distant Long). And two different movement time (5s and 10s) were designated for the movement of the ball only in the short distance conditions. The moving time of the long distance condition was always 10s. In total, six types of conditions were created. The results of the experiment demonstrated that the vergence was larger when the ball was in close proximity to the subjects than when it was at a distance. That was that the perspective information of 360-degree images shifted the subjects’ vergence. This suggests that the perspective information of the images provided observers with high-quality depth information that guided their vergence toward the target position. Furthermore, this effect was observed not only for static targets, but also for moving targets.

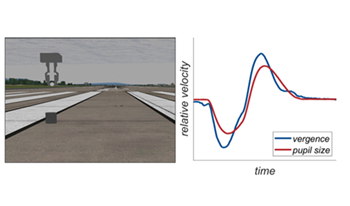

During natural viewing, the oculomotor system interacts with depth information through a correlated, tightly related linkage between convergence, accommodation, and pupil miosis known as the near response. When natural viewing breaks down, such as when depth distortions and cue conflicts are introduced in a stereoscopic remote vision system (sRVS), the individual elements of the near response may decouple (e.g., vergence-accommodation, or VA, mismatch), limiting the comfort and usability of the sRVS. Alternatively, in certain circumstances the near response may become more tightly linked to potentially preserve image quality in the presence of significant distortion. In this experiment, we measured two elements of the near response (vergence posture and pupil size) of participants using an sRVS. We manipulated the degree of depth distortion by changing the viewing distance, creating a perceptual compression of the image space, and increasing the VA mismatch. We found a strong positive cross-correlation of vergence posture and pupil size in all participants in both conditions. The response was significantly stronger and quicker in the near viewing condition, which may represent a physiological response to maintain image quality and increase the depth of focus through pupil miosis.