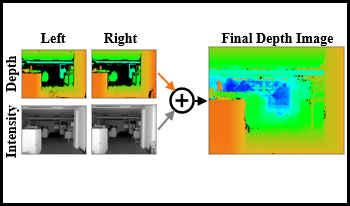

Solid-state lidar cameras produce 3D images, useful in applications such as robotics and self-driving vehicles. However, range is limited by the lidar laser power and features such as perpendicular surfaces and dark objects pose difficulties. We propose the use of intensity images, inherent in lidar camera data from the total laser and ambient light collected in each pixel, to extract additional depth information and boost ranging performance. Using a pair of off-the-shelf lidar cameras and a conventional stereo depth algorithm to process the intensity images, we demonstrate increase of the native lidar maximum depth range by 2× in an indoor environment and almost 10× outdoors. Depth information is also extracted from features in the environment such as dark objects, floors and ceiling which are otherwise not detected by the lidar sensor. While the specific technique presented is useful in applications involving multiple lidar cameras, the principle of extracting depth data from lidar camera intensity images could also be extended to standalone lidar cameras using monocular depth techniques.

A range image of a scene is produced with a solid-state time of- flight system that uses active illumination and a time-gated single photon avalanche diode (SPAD) array. The distance to a target from the imager is measured by delaying the time gate in small steps and counting the photons of the pixels in each delay step in successive measurements. To achieve a high frame rate, the number of delay steps needed is minimized by limiting the scanning of the depth only to the range of interest. To be able to measure scenes with objects in different ranges, the array has been divided into groups of pixels with independently controlled time gating. This paper demonstrates an algorithm that can be used to control the time gating of the pixel groups in the sensor array to achieve depth maps of the scene with the time-gated SPAD array in real time and at a 70 Hz frame rate.