In eye-tracking based 3D displays, system latency due to eye-tracking and 3D rendering causes an error between the actual eye position and the tracked position, which is proportional to the viewer’s movement. This discrepancy makes viewers to see 3D content from a non-optimal position, thereby increasing 3D crosstalk and degrading the quality of 3D images under dynamic viewing conditions. In this paper, we investigate the latency issue, distinguish each source of system latency and study the display margin of eye-tracking based 3D display. To reduce 3D crosstalk during viewer’s motion, we propose a motion compensation method by predicting viewer’s eye position. The effectiveness of our motion compensation method is validated by experiments using previously implemented 3D display prototype and the results show that the prediction error decreased to 24.6%, indicating that the accuracy of eye pupil position became 4 times higher, and crosstalk reduced to a level similar to that of a 1/4 latency system.

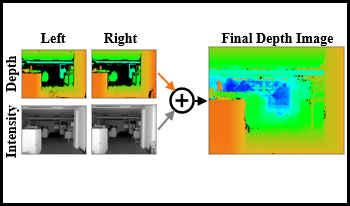

Solid-state lidar cameras produce 3D images, useful in applications such as robotics and self-driving vehicles. However, range is limited by the lidar laser power and features such as perpendicular surfaces and dark objects pose difficulties. We propose the use of intensity images, inherent in lidar camera data from the total laser and ambient light collected in each pixel, to extract additional depth information and boost ranging performance. Using a pair of off-the-shelf lidar cameras and a conventional stereo depth algorithm to process the intensity images, we demonstrate increase of the native lidar maximum depth range by 2× in an indoor environment and almost 10× outdoors. Depth information is also extracted from features in the environment such as dark objects, floors and ceiling which are otherwise not detected by the lidar sensor. While the specific technique presented is useful in applications involving multiple lidar cameras, the principle of extracting depth data from lidar camera intensity images could also be extended to standalone lidar cameras using monocular depth techniques.

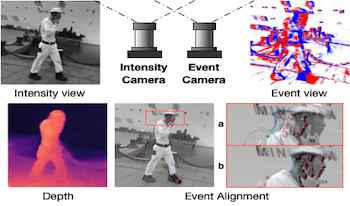

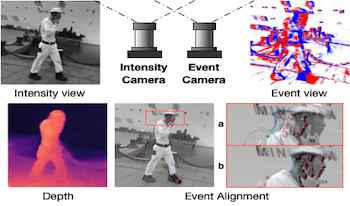

Event cameras are novel bio-inspired vision sensors that output pixel-level intensity changes in microsecond accuracy with high dynamic range and low power consumption. Despite these advantages, event cameras cannot be directly applied to computational imaging tasks due to the inability to obtain high-quality intensity and events simultaneously. This paper aims to connect a standalone event camera and a modern intensity camera so that applications can take advantage of both sensors. We establish this connection through a multi-modal stereo matching task. We first convert events to a reconstructed image and extend the existing stereo networks to this multi-modality condition. We propose a self-supervised method to train the multi-modal stereo network without using ground truth disparity data. The structure loss calculated on image gradients is used to enable self-supervised learning on such multi-modal data. Exploiting the internal stereo constraint between views with different modalities, we introduce general stereo loss functions, including disparity cross-consistency loss and internal disparity loss, leading to improved performance and robustness compared to existing approaches. Our experiments demonstrate the effectiveness of the proposed method, especially the proposed general stereo loss functions, on both synthetic and real datasets. Finally, we shed light on employing the aligned events and intensity images in downstream tasks, e.g., video interpolation application.

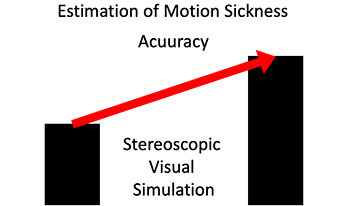

Automation of driving leads to decrease in driver agency, and there are concerns about motion sickness in automated vehicles. The automated driving agencies are closely related to virtual reality technology, which has been confirmed in relation to simulator sickness. Such motion sickness has a similar mechanism as sensory conflict. In this study, we investigated the use of deep learning for predicting motion. We conducted experiments using an actual vehicle and a stereoscopic image simulation. For each experiment, we predicted the occurrences of motion sickness by comparing the data from the stereoscopic simulation to an experiment with actual vehicles. Based on the results of the motion sickness prediction, we were able to extend the data on a stereoscopic simulation in improving the accuracy of predicting motion sickness in an actual vehicle. Through the performance of stereoscopic visual simulation, it is considered possible to utilize the data in deep learning.

Event cameras are novel bio-inspired vision sensors that output pixel-level intensity changes in microsecond accuracy with high dynamic range and low power consumption. Despite these advantages, event cameras cannot be directly applied to computational imaging tasks due to the inability to obtain high-quality intensity and events simultaneously. This paper aims to connect a standalone event camera and a modern intensity camera so that applications can take advantage of both sensors. We establish this connection through a multi-modal stereo matching task. We first convert events to a reconstructed image and extend the existing stereo networks to this multi-modality condition. We propose a self-supervised method to train the multi-modal stereo network without using ground truth disparity data. The structure loss calculated on image gradients is used to enable self-supervised learning on such multi-modal data. Exploiting the internal stereo constraint between views with different modalities, we introduce general stereo loss functions, including disparity cross-consistency loss and internal disparity loss, leading to improved performance and robustness compared to existing approaches. Our experiments demonstrate the effectiveness of the proposed method, especially the proposed general stereo loss functions, on both synthetic and real datasets. Finally, we shed light on employing the aligned events and intensity images in downstream tasks, e.g., video interpolation application.

Automation of driving leads to decrease in driver agency, and there are concerns about motion sickness in automated vehicles. The automated driving agencies are closely related to virtual reality technology, which has been confirmed in relation to simulator sickness. Such motion sickness has a similar mechanism as sensory conflict. In this study, we investigated the use of deep learning for predicting motion. We conducted experiments using an actual vehicle and a stereoscopic image simulation. For each experiment, we predicted the occurrences of motion sickness by comparing the data from the stereoscopic simulation to an experiment with actual vehicles. Based on the results of the motion sickness prediction, we were able to extend the data on a stereoscopic simulation in improving the accuracy of predicting motion sickness in an actual vehicle. Through the performance of stereoscopic visual simulation, it is considered possible to utilize the data in deep learning.

Stereo matching algorithms are useful for estimating a dense depth characteristic of a scene by finding corresponding points from stereo images of the scene. Several factors such as occlusion, noise, and illumination inconsistencies in the scene affect the disparity estimates and make this process challenging. Algorithms developed to overcome these challenges can be broadly categorized as learning-based and non-learning based disparity estimation algorithms. The learning-based approaches are more accurate but computationally expensive. In contrary, non-learning based algorithms are widely used and are computationally efficient algorithms. In this paper, we propose a new stereo matching algorithm using guided image filtering (GIF)-based cost aggregation. The main contribution of our approach is a cost calculation framework which is a hybrid of cross-correlation between stereo-image pairs and scene segmentation (HCS). The performance of our HCS technique was compared with state-ofthe- art techniques using version 3 of the benchmark Middlebury dataset. Our results confirm the effective performance of the HCS technique.