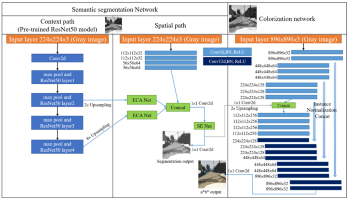

A fully automated colorization model that integrates image segmentation features to enhance both the accuracy and diversity of colorization is proposed. In the model, a multipath architecture is employed, with each path designed to address a specific objective in processing grayscale input images. The context path utilizes a pretrained ResNet50 model to identify object classes while the spatial path determines the locations of these objects. ResNet50 is a 50-layer deep convolutional neural network (CNN) that uses skip connections to address the challenges of training deep models. It is widely applied in image classification and feature extraction. The outputs from both paths are subsequently fused and fed into the colorization network to ensure precise representation of image structures and to prevent color spillover across object boundaries. The colorization network is designed to handle high-resolution inputs, enabling accurate colorization of small objects and enhancing overall color diversity. The proposed model demonstrates robust performance even when training with small datasets. Comparative evaluations with CNN-based and diffusion-based classification approaches show that the proposed model significantly improves colorization quality.

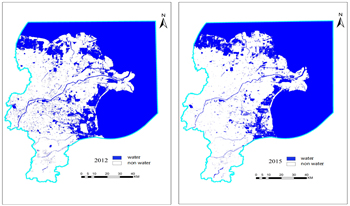

Currently, there is a relative insufficiency of research on the feature extraction of braided rivers and the river-scale water system evolution pattern under long time series in China. Therefore, continuous monitoring of surface water and analysis of its evolution process for the Yellow River Delta region have great application value to supplement and improve related knowledge and realize sustainable water resource management. The satellite remote sensing image is an important medium for obtaining surface water change data. Since free high-quality, long time-series high-resolution images are difficult to obtain, this paper selects the Landsat series of image data, which has a longer time span and better consistency, as the data source for the relevant research. In recent years, deep learning models have been gradually applied to the task of extracting surface water bodies from remote sensing images. However, deep learning methods usually have problems such as difficulty in capturing the fine contours of water bodies and poor extraction ability for fine water bodies. Based on this problem, this study proposes an Efficient Local Strip Convolutional Attention model for water system extraction and evolution analysis in the Yellow River Delta region. The experimental results show that the proposed model not only performs best in terms of overall accuracy but also obtains smoother water body boundaries and more complete extraction of small and medium-sized rivers, compared with the water body index methods MNDWI and AWEIsh, the machine learning method SVM, and the semantic segmentation models U-Net, SR-SegNet v2, and FWENet.

Recent advances in convolutional neural networks and vision transformers have brought about a revolution in the area of computer vision. Studies have shown that the performance of deep learning-based models is sensitive to image quality. The human visual system is trained to infer semantic information from poor quality images, but deep learning algorithms may find it challenging to perform this task. In this paper, we study the effect of image quality and color parameters on deep learning models trained for the task of semantic segmentation. One of the major challenges in benchmarking robust deep learning-based computer vision models is lack of challenging data covering different quality and colour parameters. In this paper, we have generated data using the subset of the standard benchmark semantic segmentation dataset (ADE20K) with the goal of studying the effect of different quality and colour parameters for the semantic segmentation task. To the best of our knowledge, this is one of the first attempts to benchmark semantic segmentation algorithms under different colour and quality parameters, and this study will motivate further research in this direction.

Recent advances in convolutional neural networks and vision transformers have brought about a revolution in the area of computer vision. Studies have shown that the performance of deep learning-based models is sensitive to image quality. The human visual system is trained to infer semantic information from poor quality images, but deep learning algorithms may find it challenging to perform this task. In this paper, we study the effect of image quality and color parameters on deep learning models trained for the task of semantic segmentation. One of the major challenges in benchmarking robust deep learning-based computer vision models is lack of challenging data covering different quality and colour parameters. In this paper, we have generated data using the subset of the standard benchmark semantic segmentation dataset (ADE20K) with the goal of studying the effect of different quality and colour parameters for the semantic segmentation task. To the best of our knowledge, this is one of the first attempts to benchmark semantic segmentation algorithms under different colour and quality parameters, and this study will motivate further research in this direction.

Our central goal was to create automatic methods for semantic segmentation of human figures in images of fine art paintings. This is a difficult problem because the visual properties and statistics of artwork differ markedly from the natural photographs widely used in research in automatic segmentation. We used a deep neural network to transfer artistic style from paintings across several centuries to modern natural photographs in order to create a large data set of surrogate art images. We then used this data set to train a separate deep network for semantic image segmentation of genuine art images. Such data augmentation led to great improvement in the segmentation of difficult genuine artworks, revealed both qualitatively and quantitatively. Our unique technique of creating surrogate artworks should find wide use in many tasks in the growing field of computational analysis of fine art.

Safe and comfortable travel on the train is only possible on tracks that are in the correct geometric position. For this reason, track tamping machines are used worldwide that carry out this important track maintenance task. Turnout-ta.mping refers to a complex procedure for the improvement and stabilization of the track situation in turnouts, which is carried out usually by experienced operators. This application paper describes the current state of development of a 3D laser line scanner-based sensor system for a new turnout-tamping assistance system, which is able to support and relieve the operator in complex tamping areas. A central task in this context is digital image processing, which carries out so-called semantic segmentation (based on deep learning algorithms) on the basis of 3D scanner data in order to detect essential and critical rail areas fully automatically.