Modern production and distribution workflows have allowed for high dynamic range (HDR) imagery to become widespread. It has made a positive impact in the creative industry and improved image quality on consumer devices. Akin to the dynamics of loudness in audio, it is predicted that the increased luminance range allowed by HDR ecosystems could introduce unintended, high-magnitude changes. These luminance changes could occur at program transitions, advertisement insertions, and channel change operations. In this article, we present findings from a psychophysical experiment conducted to evaluate three components of HDR luminance changes: the magnitude of the change, the direction of the change (darker or brighter), and the adaptation time. Results confirm that all three components exert significant influence. We find that increasing either the magnitude of the luminance or the adaptation time results in more discomfort at the unintended transition. We find that transitioning from brighter to darker stimuli has a non-linear relationship with adaptation time, falling off steeply with very short durations.

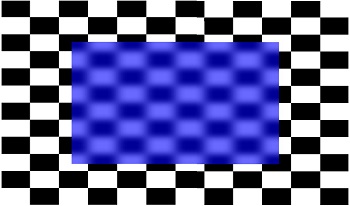

Translucency is an appearance attribute, which primarily results from subsurface scattering of light. The visual perception of translucency has gained attention in the past two decades. However, the studies mostly address thick and complex 3D objects that completely occlude the background. On the other hand, the perception of transparency of flat and thin see-through filters has been studied more extensively. Despite this, perception of translucency in see-through filters that do not completely occlude the background remains understudied. In this work, we manipulated the sharpness and contrast of black-and-white checkerboard patterns to simulate the impression of see-through filters. Afterward, we conducted paired-comparison psychophysical experiments to measure how the amount of background blur and contrast relates to perceived translucency. We found that while both blur and contrast affect translucency, the relationship is neither monotonic, nor straightforward.

The visual mechanisms behind our ability to distinguish translucent and opaque materials is not fully understood. Disentanglement of the contributions of surface reflectance and subsurface light transport to the still image structure is an ill-posed problem. While the overwhelming majority of the works addressing translucency perception use static stimuli, behavioral studies show that human observers tend to move objects to assess their translucency. Therefore, we hypothesize that translucent objects appear more translucent and less opaque when observed in motion than when shown as still images. In this manuscript, we report two psychophysical experiments that we conducted using static and dynamic visual stimuli to investigate how motion affects perceived translucency.

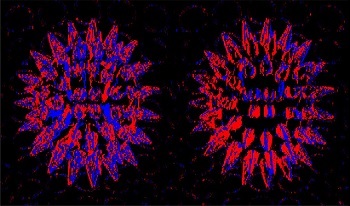

Gloss perception is a complex psychovisual phenomenon, whose mechanisms are not yet fully explained. Instrumentally measured surface reflectance is usually poor predictor of human perception of gloss. The state-of-the-art studies demonstrate that, in addition to surface reflectance, object's shape and illumination geometry also affect the magnitude of gloss perceived by the human visual system (HVS). Recent studies attribute this to image cues – the specific regularities in image statistics that are generated by a combination of these physical properties, and that, in their part, are proposedly used by the HVS for assessing gloss. Another study has recently demonstrated that subsurface scattering of light is an additional factor that can play the role in perceived gloss, but the study provides limited explanation of this phenomenon. In this work, we aimed to shed more light to this observation and explain why translucency impacts perceived gloss, and why this impact varies among shapes. We conducted four psychophysical experiments in order to explore whether image cues typical for opaque objects also explain the variation of perceived gloss in translucent objects and to quantify how these cues are modulated by the subsurface scattering properties. We found that perceived contrast, coverage area, and sharpness of the highlights can be combined to reliably predict perceived gloss. While sharpness is the most significant cue for assessing glossiness of spherical objects, coverage is more important for a complex Lucy shape. Both of these observations propose an explanation why subsurface scattering albedo impacts perceived gloss.

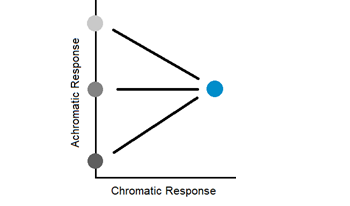

Luminance underestimates the brightness of chromatic visual stimuli. This phenomenon, known as the Helmholtz-Kohlrausch effect, is due to the different experimental methods—heterochromatic flicker photometry (luminance) and direct brightness matching (brightness)—from which these measures are derived. This paper probes the relationship between luminance and brightness through a psychophysical experiment that uses slowly oscillating visual stimuli and compares the results of such an experiment to the results of flicker photometry and direct brightness matching. The results show that the dimension of our internal color space corresponding with our achromatic response to stimuli is not a scale of brightness or lightness.

Vision is a component of a perceptual system whose function is to support purposeful behavior. In this project we studied the perceptual system that supports the visual perception of surface properties through manipulation. Observers were tasked with finding dents in simulated flat glossy surfaces. The surfaces were presented on a tangible display system implemented on an Apple iPad, that rendered the surfaces in real time and allowed observers to directly interact with them by tilting and rotating the device. On each trial we recorded the angular deviations indicated by the device's accelerometer and the images seen by the observer. The data reveal purposeful patterns of manipulation that serve the task by producing images that highlight the dent features. These investigations suggest the presence of an active visuo-motor perceptual system involved in the perception of surface properties, and provide a novel method for its study using tangible display systems

White balance is one of the key processes in a camera pipeline. Accuracy can be challenging when a scene is illuminated by multiple color light sources. We designed and built a studio which consisted of a controllable multiple LED light sources that produced a range of correlated color temperatures (CCTs) with high color fidelity that were used to illuminate test scenes. A two Alternative Forced Choice (2AFC) experiment was performed to evaluate the white balance appearance preference for images containing a model in the foreground and target objects in the background indoor scene. The foreground and background were lit by different combinations of cool to warm sources. The observers were asked to pick the one that was most aesthetically appealing to them. The results show that when the background is warm, the skin tones dominated observers' decisions and when the background is cool the preference shifts to scenes with same foreground and background CCT. The familiarity and unfamiliarity of objects in the background scene did not show a significant effect.

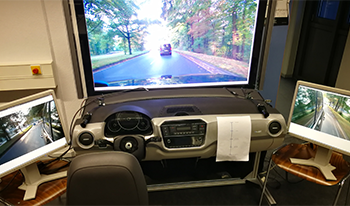

Aliasing effects due to time-discrete capturing of amplitude-modulated light with a digital image sensor are perceived as flicker by humans. Especially when observing these artifacts in digital mirror replacement systems, they are annoying and can pose a risk. Therefore, ISO 16505 requires flicker-free reproduction for 90% of people in these systems. Various psychophysical studies investigate the influence of large-area flickering of displays, environmental light, or flickering in television applications on perception and concentration. However, no detailed knowledge of subjective annoyance/irritation due to flicker from camera-monitor systems as a mirror replacement in vehicles exist so far, but the number of these systems is constantly increasing. This psychophysical study used a novel data set from real-world driving scenes and synthetic simulation with synthetic flicker. More than 25 test persons were asked to quantify the subjective annoyance level of different flicker frequencies, amplitudes, mean values, sizes, and positions. The results show that for digital mirror replacement systems, human subjective annoyance due to flicker is greatest in the 15 Hz range with increasing amplitude and magnitude. Additionally, the sensitivity to flicker artifacts increases with the duration of observation.

Modern virtual reality (VR) headsets use lenses that distort the visual field, typically with distortion increasing with eccentricity. While content is pre-warped to counter this radial distortion, residual image distortions remain. Here we examine the extent to which such residual distortion impacts the perception of surface slant. In Experiment 1, we presented slanted surfaces in a head-mounted display and observers estimated the local surface slant at different locations. In Experiments 2 (slant estimation) and 3 (slant discrimination), we presented stimuli on a mirror stereoscope, which allowed us to more precisely control viewing and distortion parameters. Taken together, our results show that radial distortion has significant impact on perceived surface attitude, even following correction. Of the distortion levels we tested, 5% distortion results in significantly underestimated and less precise slant estimates relative to distortion-free surfaces. In contrast, Experiment 3 reveals that a level of 1% distortion is insufficient to produce significant changes in slant perception. Our results highlight the importance of adequately modeling and correcting lens distortion to improve VR user experience.