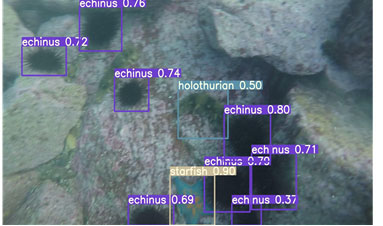

This paper proposes an underwater object detection algorithm based on lightweight structure optimization to address the low detection accuracy and difficult deployment in underwater robot dynamic inspection caused by low light, blurriness, and low contrast. The algorithm builds upon YOLOv7 by incorporating the attention mechanism of the convolutional module into the backbone network to enhance feature extraction in low light and blurred environments. Furthermore, the feature fusion enhancement module is optimized to control the shortest and longest gradient paths for fusion, improving the feature fusion ability while reducing network complexity and size. The output module of the network is also optimized to improve convergence speed and detection accuracy for underwater fuzzy objects. Experimental verification using real low-light underwater images demonstrates that the optimized network improves the object detection accuracy (mAP) by 11.7%, the detection rate by 2.9%, and the recall rate by 15.7%. Moreover, it reduces the model size by 20.2 MB with a compression ratio of 27%, making it more suitable for deployment in underwater robot applications.

Detecting changes in an uncontrolled environment using cameras mounted on a ground vehicle is critical for the detection of roadside Improvised Explosive Devices (IEDs). Hidden IEDs are often accompanied by visible markers, whose appearances are a priori unknown. Little work has been published on detecting unknown objects using deep learning. This article shows the feasibility of applying convolutional neural networks (CNNs) to predict the location of markers in real time, compared to an earlier reference recording. The authors investigate novel encoder–decoder Siamese CNN architectures and introduce a modified double-margin contrastive loss function, to achieve pixel-level change detection results. Their dataset consists of seven pairs of challenging real-world recordings, and they investigate augmentation with artificial object data. The proposed network architecture can compare two images of 1920 × 1440 pixels in 27 ms on an RTX Titan GPU and significantly outperforms state-of-the-art networks and algorithms on our dataset in terms of F-1 score by 0.28.