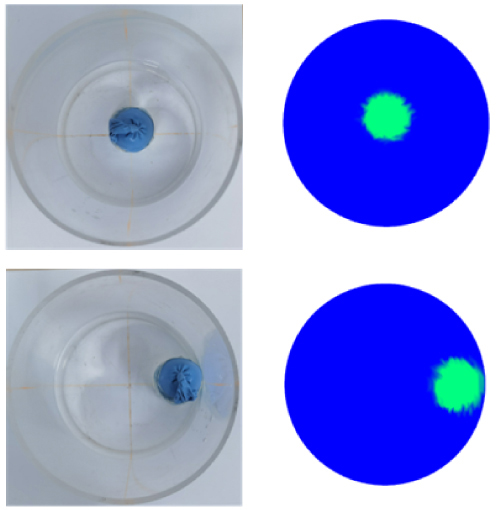

Magnetic induction tomography (MIT) is an emerging imaging technology holding significant promise in the field of cerebral hemorrhage monitoring. The commonly employed imaging method in MIT is time-difference imaging. However, this approach relies on magnetic field signals preceding cerebral hemorrhage, which are often challenging to obtain. Multiple bioelectrical impedance information with different frequencies is added to this study on the basis of single-frequency information, and the collected signals with different frequencies are identified to obtain the magnetic field signal generated by single-layer heterogeneous tissue. The Stacked Autoencoder (SAE) neural network algorithm is used to reconstruct the images of head multi-layer tissues. Both numerical simulation and phantom experiments are carried out. The results indicate that the relative error of the multi-frequency SAE reconstruction is only 7.82%, outperforming traditional algorithms. Moreover, under a noise level of 40 dB, the anti-interference capability of the MIT algorithm based on frequency identification and SAE is superior to traditional algorithms. This research explores a novel approach for the dynamic monitoring of cerebral hemorrhage and demonstrates the potential advantages of MIT in non-invasive monitoring.

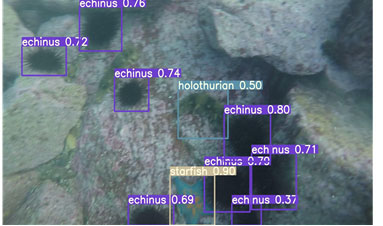

This paper proposes an underwater object detection algorithm based on lightweight structure optimization to address the low detection accuracy and difficult deployment in underwater robot dynamic inspection caused by low light, blurriness, and low contrast. The algorithm builds upon YOLOv7 by incorporating the attention mechanism of the convolutional module into the backbone network to enhance feature extraction in low light and blurred environments. Furthermore, the feature fusion enhancement module is optimized to control the shortest and longest gradient paths for fusion, improving the feature fusion ability while reducing network complexity and size. The output module of the network is also optimized to improve convergence speed and detection accuracy for underwater fuzzy objects. Experimental verification using real low-light underwater images demonstrates that the optimized network improves the object detection accuracy (mAP) by 11.7%, the detection rate by 2.9%, and the recall rate by 15.7%. Moreover, it reduces the model size by 20.2 MB with a compression ratio of 27%, making it more suitable for deployment in underwater robot applications.

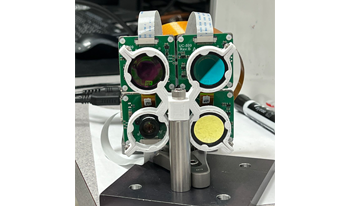

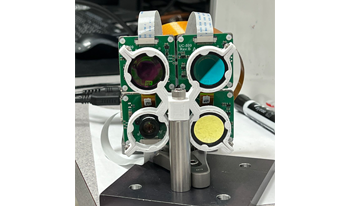

We demonstrate a physics-aware transformer for feature-based data fusion from cameras with diverse resolution, color spaces, focal planes, focal lengths, and exposure. We also demonstrate a scalable solution for synthetic training data generation for the transformer using open-source computer graphics software. We demonstrate image synthesis on arrays with diverse spectral responses, instantaneous field of view and frame rate.

Automation of driving leads to decrease in driver agency, and there are concerns about motion sickness in automated vehicles. The automated driving agencies are closely related to virtual reality technology, which has been confirmed in relation to simulator sickness. Such motion sickness has a similar mechanism as sensory conflict. In this study, we investigated the use of deep learning for predicting motion. We conducted experiments using an actual vehicle and a stereoscopic image simulation. For each experiment, we predicted the occurrences of motion sickness by comparing the data from the stereoscopic simulation to an experiment with actual vehicles. Based on the results of the motion sickness prediction, we were able to extend the data on a stereoscopic simulation in improving the accuracy of predicting motion sickness in an actual vehicle. Through the performance of stereoscopic visual simulation, it is considered possible to utilize the data in deep learning.

We demonstrate a physics-aware transformer for feature-based data fusion from cameras with diverse resolution, color spaces, focal planes, focal lengths, and exposure. We also demonstrate a scalable solution for synthetic training data generation for the transformer using open-source computer graphics software. We demonstrate image synthesis on arrays with diverse spectral responses, instantaneous field of view and frame rate.

Automation of driving leads to decrease in driver agency, and there are concerns about motion sickness in automated vehicles. The automated driving agencies are closely related to virtual reality technology, which has been confirmed in relation to simulator sickness. Such motion sickness has a similar mechanism as sensory conflict. In this study, we investigated the use of deep learning for predicting motion. We conducted experiments using an actual vehicle and a stereoscopic image simulation. For each experiment, we predicted the occurrences of motion sickness by comparing the data from the stereoscopic simulation to an experiment with actual vehicles. Based on the results of the motion sickness prediction, we were able to extend the data on a stereoscopic simulation in improving the accuracy of predicting motion sickness in an actual vehicle. Through the performance of stereoscopic visual simulation, it is considered possible to utilize the data in deep learning.

We demonstrate that a deep neural network can achieve near-perfect colour correction for the RGB signals from the sensors in a camera under a wide range of daylight illumination spectra. The network employs a fourth input signal representing the correlated colour temperature of the illumination. The network was trained entirely on synthetic spectra and applied to a set of RGB images derived from a hyperspectral image dataset under a range of daylight illumination with CCT from 2500K to 12500K. It produced an invariant output image as XYZ referenced to D65, with a mean colour error of approximately 1.0 ΔE*ab.

Many archival photos are unique, existed only in a single copy. Some of them are damaged due to improper archiving (e.g. affected by direct sunlight, humidity, insects, etc.) or have physical damage resulting in the appearance of cracks, scratches on photographs, non-necessary signs, spots, dust, and so on. This paper proposed a system for detection and removing image defects based on machine learning. The method for detecting damage to an image consists of two main steps: the first step is to use morphological filtering as a pre-processing, the second step is to use the machine learning method, which is necessary to classify pixels that have received a massive response in the preprocessing phase. The second part of the proposed method is based on the use of the adversarial convolutional neural network for the reconstruction of damages detected at the previous stage. The effectiveness of the proposed method in comparison with traditional methods of defects detection and removal was confirmed experimentally.

Synthetic aperture radar (SAR) images are corrupted by a specific noise-like phenomenon called speckle that prevents efficient processing of remote sensing data. There are many denoising methods already proposed including well known (local statistic) Lee filter. Its performance in terms of different criteria depends on several factors including image complexity where it sometimes occurs useless to process complex structure images (containing texture regions). We show that performance of the Lee filter can be predicted before starting image filtering and which can be done faster than the filtering itself. For this purpose, we propose to apply a trained neural network that employs analysis of image statistics and spectral features in a limited number of scanning windows. We show that many metrics including visual quality metrics can be predicted for SAR images acquired by Sentinel-1 sensor recently put into operation.

In this paper we introduce two new no-reference metrics and compare their performance to state-of-the-art metrics on six publicly available datasets having a large variety of distortions and characteristics. Our two metrics, based on neural networks, combine the following features: histogram of oriented gradients, edges detection, fast fourier transform, CPBD, blur and contrast measurement, temporal information, freeze detection, BRISQUE and Video BLIINDS. They perform better than Video BLIINDS and BRISQUE on the six datasets used in this study, including one made up of natural videos that have not been artificially distorted. Our metrics show a good generalization as they achieved high performance on the six datasets.