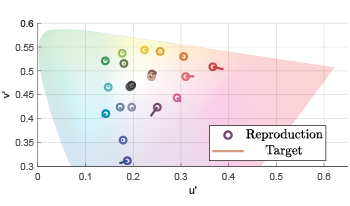

Virtual production stages with LED walls utilize illumination, display, and camera equipment which was not designed with this use case in mind. Because the spectral sensitivity of a camera is different from a human observer, a device specific calibration is required. Furthermore, the illumination spectrum emitted by the display contains large gaps in the cyan and yellow wavelength ranges and is dissimilar to the light sources for which cameras are designed. This causes object colors to be reproduced by the camera in an unnatural manner, making cinematographers hesitant to use LED walls as their primary light source. In this paper, a display calibration and camera color correction workflow for LED wall virtual production stages is proposed. A linear color correction matrix and the spectrum of a multi-channel LED fixture are jointly optimized to better reproduce object colors simultaneously illuminated by an LED display and the multi-channel fixture as they would appear under high CRI (Color Rendering Index) light sources. An alternative color correction method using root polynomials is found to further improve color reproduction. It is shown that the camera’s response to the display can be characterized by a linear 3×3 matrix and the display can be calibrated using the inverse of the color correction, allowing for a color accurate reproduction of a virtual environment.

This paper presents a study on Quality of Experience (QoE) evaluation of 3D objects in Mixed Reality (MR) scenarios. In particular, a subjective test was performed with Microsoft HoloLens, considering different degradations affecting the geometry and texture of the content. Apart from the analysis of the perceptual effects of these artifacts, given the need for recommendations for subjective assessment of immersive media, this study was also aimed at: 1) checking the appropriateness of a single stimulus methodology (ACR-HR) for these scenarios where observers have less references than with traditional media, and 2) analyzing the possible impact of environment lighting conditions on the quality evaluation of 3D objects in mixed reality (MR), and 3) benchmark state-of-the-art objective metrics in this context. The subjective results provide insights for recommendations for subjective testing in MR/AR, showing that ACR-HR can be used in similar QoE tests and reflecting the influence among the lighting conditions, the content characteristics, and the type of degradations. The objective results show an acceptable performance of perceptual metrics for geometry quantization artifacts and point out the need of further research on metrics covering both geometry and texture compression degradations.