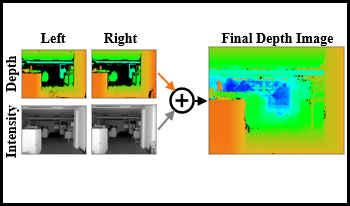

Solid-state lidar cameras produce 3D images, useful in applications such as robotics and self-driving vehicles. However, range is limited by the lidar laser power and features such as perpendicular surfaces and dark objects pose difficulties. We propose the use of intensity images, inherent in lidar camera data from the total laser and ambient light collected in each pixel, to extract additional depth information and boost ranging performance. Using a pair of off-the-shelf lidar cameras and a conventional stereo depth algorithm to process the intensity images, we demonstrate increase of the native lidar maximum depth range by 2× in an indoor environment and almost 10× outdoors. Depth information is also extracted from features in the environment such as dark objects, floors and ceiling which are otherwise not detected by the lidar sensor. While the specific technique presented is useful in applications involving multiple lidar cameras, the principle of extracting depth data from lidar camera intensity images could also be extended to standalone lidar cameras using monocular depth techniques.

Recently, privacy has a growing importance in several domains, especially in street-view images. The conventional way to achieve this is to automatically detect and blur sensitive information from these images. However, the processing cost of blurring increases with the ever growing resolution of images. We propose a system that is cost-effective even after increasing the resolution by a factor of 2.5. The new system utilizes depth data obtained from LiDAR to significantly reduce the search space for detection, thereby reducing the processing cost. Besides this, we test several detectors after reducing the detection space and provide an alternative solution based on state-of-the-art deep learning detectors to the existing HoG-SVM-Deep system that is faster and has a higher performance.