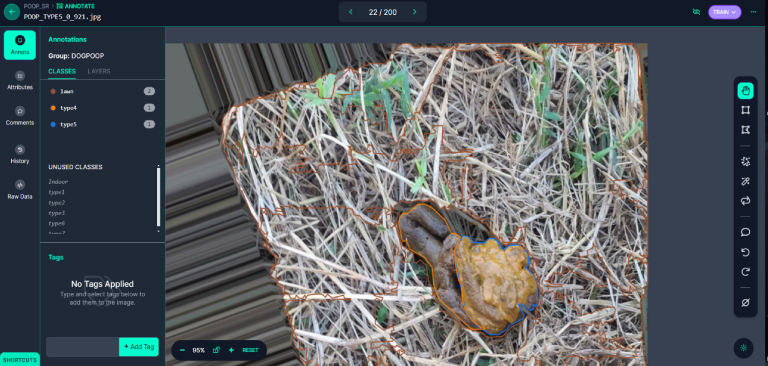

As pets now outnumber newborns in households, the demand for pet medical care and attention has surged. This has led to a significant burden for pet owners. To address this, our experiment utilizes image recognition technology to preliminarily assess the health condition of dogs, providing a rapid and economical health assessment method. By collaboration, we collected 2613 stool photos, which were enhanced to a total of 6079 images and analyzed using LabVIEW and the YOLOv8 segmentation model. The model performed excellently, achieving a precision of 86.805%, a recall rate of 74.672%, and an mAP50 of 83.354%. This proves its high recognition rate in determining the condition of dog stools. With the advancement of technology and the proliferation of mobile devices, the aim of this experiment is to develop an application that allows pet owners to assess their pets’ health anytime and manage it more conveniently. Additionally, the experiment aims to expand the database through cloud computing, optimize the model, and establish a global pet health interactive community. These developments not only propel innovation in the field of pet medical care but also provide practical health management tools for pet families, potentially offering substantial help to more pet owners in the future.

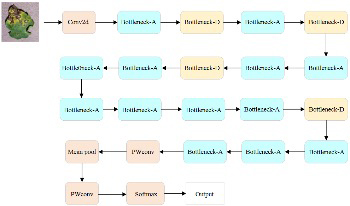

Crop diseases have always been a major threat to agricultural production, significantly reducing both yield and quality of agricultural products. Traditional methods for disease recognition suffer from high costs and low efficiency, making them inadequate for modern agricultural requirements. With the continuous development of artificial intelligence technology, utilizing deep learning for crop disease image recognition has become a research hotspot. Convolutional neural networks can automatically extract features for end-to-end learning, resulting in better recognition performance. However, they also face challenges such as high computational costs and difficulties in deployment on mobile devices. In this study, we aim to improve the recognition accuracy of models, reduce computational costs, and scale down for deployment on mobile platforms. Specifically targeting the recognition of tomato leaf diseases, we propose an innovative image recognition method based on a lightweight MCA-MobileNet and WGAN. By incorporating an improved multiscale feature fusion module and coordinate attention mechanism into MobileNetV2, we developed the lightweight MCA-MobileNet model. This model focuses more on disease spot information in tomato leaves while significantly reducing the model’s parameter count. We employ WGAN for data augmentation to address issues such as insufficient and imbalanced original sample data. Experimental results demonstrate that using the augmented dataset effectively improves the model’s recognition accuracy and enhances its robustness. Compared to traditional networks, MCA-MobileNet shows significant improvements in parameters such as accuracy, precision, recall, and F1-score. With a training parameter count of only 2.75M, it exhibits outstanding performance in recognizing tomato leaf diseases and can be widely applied in mobile or embedded devices.

It is very good to apply the saliency model in the visual selective attention mechanism to the preprocessing process of image recognition. However, the mechanism of visual perception is still unclear, so this visual saliency model is not ideal. To this end, this paper proposes a novel image recognition approach using multiscale saliency model and GoogLeNet. First, a multi-scale convolutional neural network was taken advantage of constructing multiscale salient maps, which could be used as filters. Second, an original image was combined with the salient maps to generate the filtered image, which highlighted the salient regions and suppressed the background in the image. Third, the image recognition task was implemented by adopting the classical GoogLeNet model. In this paper, many experiments were completed by comparing four commonly used evaluation indicators on the standard image database MSRA10K. The experimental results show that the recognition results of the test images based on the proposed method are superior to some stateof- the-art image recognition methods, and are also more approximate to the results of human eye observation.