The 3D extension of the High Efficiency Video Coding (3D-HEVC) standard has improved the coding efficiency for 3D videos significantly. However, this improvement has been achieved with a significant rise in computational complexity. Specifically, the encoding process for the depth map in the 3D-HEVC standard occupies 84% of the total encoding time. This extended time is primarily due to the need to traverse coding unit (CU) depth levels in depth map encoding to determine the most suitable CU size. Acknowledging the evident texture distribution patterns within a depth map and the strong correlation between encoding size selection and the texture complexity of the current encoding block, an adaptive depth early termination convolutional neural network, named ADET-CNN, is designed for the depth map in this paper. It takes an original 64 × 64 coding tree unit (CTU) as the input and provides segmentation probabilities for various CU sizes within the CTU, which eliminates the need for exhaustive calculations and the comparison for determining the optimal CU size, thereby enabling faster intra-coding for the depth map. Experimental results indicate that the proposed method achieves a time saving of 58% depth map encoding while maintaining the quality of synthetic views.

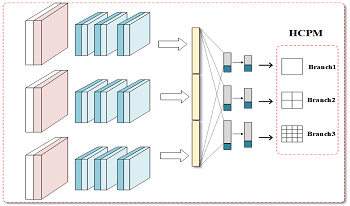

For solving the low completeness of scene reconstruction by existing methods in challenging areas such as weak texture, no texture, and non-diffuse reflection, this paper proposes a multiview stereo high-completeness network, which combined the light multiscale feature adaptive aggregation module (LightMFA2), SoftPool, and a sensitive global depth consistency checking method. In the proposed work, LightMFA2 is designed to adaptively learn critical information from the generated multiscale feature map, which can solve the troublesome problems of feature extraction in challenging areas. Furthermore, SoftPool is added to the regularization process to complete the downsampling of the 2D cost matching map, which reduces information redundancy, prevents the loss of useful information, and accelerates network computing. The purpose of the sensitive global depth consistency checking method is to filter the depth outliers. This method discards pixels with confidence less than 0.35 and uses the reprojection error calculation method to calculate the pixel reprojection error and depth reprojection error. The experimental results on the Technical University of Denmark dataset show that the proposed multiview stereo high-completeness 3D reconstruction network has significantly improved in terms of completeness and overall quality, with a completeness error of 0.2836 mm and an overall error of 0.3665 mm.