Color accuracy in conventional and digital printing processes relies on press characterization to establish the relationship between input device values and output colorimetric or spectral reflectance values. Conventional models, such as Murray–Davies, Clapper–Yule, Yule–Nielsen, and Yule–Nielsen modified spectral Neugebauer, are renowned for providing accurate chromatic and spectral predictions. However, they fall short of accounting for the effects of black ink use and struggle to predict light hues accurately. In order to predict more accurately the color fingerprint, spectral reflectance, of halftone printed images, this study introduces a novel machine-learning-based deep neural network combined with the improved particle swarm optimization algorithm. This enables implementing a spectral reflectance color prediction model for CMYK printing, which eliminates the need to adjust for dot gain during printing. By evaluating this model on a lithographic offset press, we demonstrate its superior performance evidenced by significantly lower root mean square error and color difference (ΔE ∗ 00) values compared to existing methods. This approach minimizes color deviations during printing and reduces material and energy consumption, thereby ultimately enhancing the quality of printed materials.

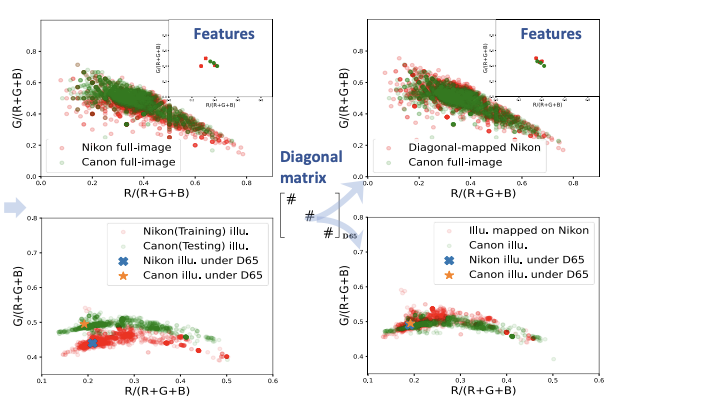

Deep Neural Networks (DNNs) have been widely used for illumination estimation, which is time-consuming and requires sensor-specific data collection. Our proposed method uses a dual-mapping strategy and only requires a simple white point from a test sensor under a D65 condition. This allows us to derive a mapping matrix, enabling the reconstructions of image data and illuminants. In the second mapping phase, we transform the reconstructed image data into sparse features, which are then optimized with a lightweight multi-layer perceptron (MLP) model using the re-constructed illuminants as ground truths. This approach effectively reduces sensor discrepancies and delivers performance on par with leading cross-sensor methods. It only requires a small amount of memory (∼0.003 MB), and takes ∼1 hour training on an RTX3070Ti GPU. More importantly, the method can be implemented very fast, with ∼0.3 ms and ∼1 ms on a GPU or CPU respectively, and is not sensitive to the input image resolution. Therefore, it offers a practical solution to the great challenges of data recollection that is faced by the industry.

We applied computational style transfer, specifically coloration and brush stroke style, to achromatic images of a ghost painting beneath Vincent van Gogh's <i>Still life with meadow flowers and roses</i>. Our method is an extension of our previous work in that it used representative artworks by the ghost painting\rq s author to train a Generalized Adversarial Network (GAN) for integrating styles learned from stylistically distinct groups of works. An effective amalgam of these learned styles is then transferred to the target achromatic work.

Illuminant estimation is critically important in computational color constancy, which has attracted great attentions and motivated the development of various statistical- and learning-based methods. Past studies, however, seldom investigated the performance of the methods on pure color images (i.e., an image that is dominated by a single pure color), which are actually very common in daily life. In this paper, we develop a lightweight feature-based Deep Neural Network (DNN) model—Pure Color Constancy (PCC). The model uses four color features (i.e., chromaticity of the maximal, mean, the brightest, and darkest pixels) as the inputs and only contains less than 0.5k parameters. It only takes 0.25ms for processing an image and has good cross-sensor performance. The angular errors on three standard datasets are generally comparable to the state-of-the-art methods. More importantly, the model results in significantly smaller angular errors on the pure color images in PolyU Pure Color dataset, which was recently collected by us.

The evolving algorithms for 2D facial landmark detection empower people to recognize faces, analyze facial expressions, etc. However, existing methods still encounter problems of unstable facial landmarks when applied to videos. Because previous research shows that the instability of facial landmarks is caused by the inconsistency of labeling quality among the public datasets, we want to have a better understanding of the influence of annotation noise in them. In this paper, we make the following contributions: 1) we propose two metrics that quantitatively measure the stability of detected facial landmarks, 2) we model the annotation noise in an existing public dataset, 3) we investigate the influence of different types of noise in training face alignment neural networks, and propose corresponding solutions. Our results demonstrate improvements in both accuracy and stability of detected facial landmarks.

Image aesthetic assessment has always been regarded as a challenging task because of the variability of subjective preference. Besides, the assessment of a photo is also related to its style, semantic content, etc. Conventionally, the estimations of aesthetic score and style for an image are treated as separate problems. In this paper, we explore the inter-relatedness between the aesthetics and image style, and design a neural network that can jointly categorize image by styles and give an aesthetic score distribution. To this end, we propose a multi-task network (MTNet) with an aesthetic column serving as a score predictor and a style column serving as a style classifier. The angular-softmax loss is applied in training primary style classifiers to maximize the margin among classes in single-label training data; the semi-supervised method is applied to improve the network’s generalization ability iteratively. We combine the regression loss and classification loss in training aesthetic score. Experiments on the AVA dataset show the superiority of our network in both image attributes classification and aesthetic ranking tasks.