An ideal archival storage system combines longevity, accessibility, low cost, high capacity, and human readability to ensure the persistence and future readability of stored data. At Archiving 2024 [B. M. Lunt, D. Kemp, M. R. Linford, and W. Chiang, “How long is long-term? An update,” Archiving (2024)], the authors’ research group presented a paper that summarized several efforts in this area, including magnetic tapes, optical disks, hard disk drives, solid-state drives, Project Silica (a Microsoft project), DNA, and projects C-PROM, Nano Libris, and Mil Chispa (the last three being the authors’ research). Each storage option offers unique advantages in each of the desirable characteristics. This paper provides information on other efforts in this area, including the work by Cerabyte, Norsam Technologies, and Group 47 DOTS, and an update on the authors’ projects C-PROM, Nano Libris, and Mil Chispa.

An ideal archival storage system combines longevity, accessibility, low cost, high capacity, and human readability to ensure the persistence and future readability of stored data. At Archiving 2024 [B. M. Lunt, D. Kemp, M. R. Linford, and W. Chiang, “How long is long-term? An update,” Archiving (2024)], the authors’ research group presented a paper that summarized several efforts in this area, including magnetic tapes, optical disks, hard disk drives, solid-state drives, Project Silica (a Microsoft project), DNA, and projects C-PROM, Nano Libris, and Mil Chispa (the last three being the authors’ research). Each storage option offers unique advantages in each of the desirable characteristics. This paper provides information on other efforts in this area, including the work by Cerabyte, Norsam Technologies, and Group 47 DOTS, and an update on the authors’ projects C-PROM, Nano Libris, and Mil Chispa.

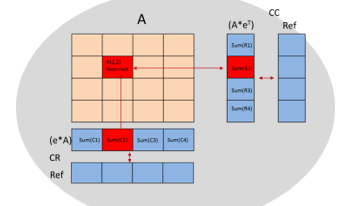

With artificial-intelligence (AI) becoming the mainstream approach to solve a myriad of problems across industrial, automotive, medical, military, wearables and cloud, the need for high-performance, low-power embedded devices are stronger than ever. Innovations around designing an efficient hardware accelerator to perform AI tasks also involves making them fault-tolerant to work reliability under varying stressful environmental conditions. These embedded devices could be deployed under varying thermal and electromagnetic interference conditions which require both the processing blocks and on-device memories to recover from faults and provide a reliable quality of service. Particularly in the automotive context, ASIL-B compliant AI systems typically implement error-correction-code (ECC) which takes care of single-error-correction, double-error detection (SECDED) faults. ASIL-D based AI systems implement dual lock step compute blocks and builds processing redundancy to reinforce prediction certainty, on top of protecting its memories. Fault-tolerant systems take it one level higher by tripling the processing blocks, where fault detected by one processing element is corrected and reinforced by the other two elements. This becomes a significant silicon area adder and makes the solution an expensive proposition. In this paper we propose novel techniques that can be applied to a typical deep-learning based embedded solution with many processing stages such as memory load, matrix-multiply, accumulate, activation functions and others to build a robust fault tolerant system without linearly tripling compute area and hence the cost of the solution.