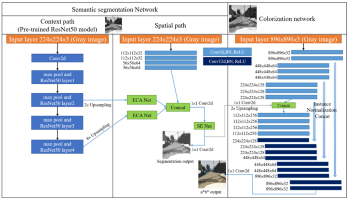

A fully automated colorization model that integrates image segmentation features to enhance both the accuracy and diversity of colorization is proposed. In the model, a multipath architecture is employed, with each path designed to address a specific objective in processing grayscale input images. The context path utilizes a pretrained ResNet50 model to identify object classes while the spatial path determines the locations of these objects. ResNet50 is a 50-layer deep convolutional neural network (CNN) that uses skip connections to address the challenges of training deep models. It is widely applied in image classification and feature extraction. The outputs from both paths are subsequently fused and fed into the colorization network to ensure precise representation of image structures and to prevent color spillover across object boundaries. The colorization network is designed to handle high-resolution inputs, enabling accurate colorization of small objects and enhancing overall color diversity. The proposed model demonstrates robust performance even when training with small datasets. Comparative evaluations with CNN-based and diffusion-based classification approaches show that the proposed model significantly improves colorization quality.

The 3D extension of the High Efficiency Video Coding (3D-HEVC) standard has improved the coding efficiency for 3D videos significantly. However, this improvement has been achieved with a significant rise in computational complexity. Specifically, the encoding process for the depth map in the 3D-HEVC standard occupies 84% of the total encoding time. This extended time is primarily due to the need to traverse coding unit (CU) depth levels in depth map encoding to determine the most suitable CU size. Acknowledging the evident texture distribution patterns within a depth map and the strong correlation between encoding size selection and the texture complexity of the current encoding block, an adaptive depth early termination convolutional neural network, named ADET-CNN, is designed for the depth map in this paper. It takes an original 64 × 64 coding tree unit (CTU) as the input and provides segmentation probabilities for various CU sizes within the CTU, which eliminates the need for exhaustive calculations and the comparison for determining the optimal CU size, thereby enabling faster intra-coding for the depth map. Experimental results indicate that the proposed method achieves a time saving of 58% depth map encoding while maintaining the quality of synthetic views.

Image quality metrics have become invaluable tools for image processing and display system development. These metrics are typically developed for and tested on images and videos of natural content. Text, on the other hand, has unique features and supports a distinct visual function: reading. It is therefore not clear if these image quality metrics are efficient or optimal as measures of text quality. Here, we developed a domain-specific image quality metric for text and compared its performance against quality metrics developed for natural images. To develop our metric, we first trained a deep neural network to perform text classification on a data set of distorted letter images. We then compute the responses of internal layers of the network to uncorrupted and corrupted images of text, respectively. We used the cosine dissimilarity between the responses as a measure of text quality. Preliminary results indicate that both our model and more established quality metrics (e.g., SSIM) are able to predict general trends in participants’ text quality ratings. In some cases, our model is able to outperform SSIM. We further developed our model to predict response data in a two-alternative forced choice experiment, on which only our model achieved very high accuracy.

The performance of a convolutional neural network (CNN) on an image texture detection task as a function of linear image processing and the number of training images is investigated. Performance is quantified by the area under (AUC) the receiver operating characteristic (ROC) curve. The Ideal Observer (IO) maximizes AUC but depends on high-dimensional image likelihoods. In many cases, the CNN performance can approximate the IO performance. This work demonstrates counterexamples where a full-rank linear transform degrades the CNN performancebelow the IO in the limit of large quantities of training dataand network layers. A subsequent linear transform changes theimages’ correlation structure, improves the AUC, and again demonstrates the CNN dependence on linear processing. Compression strictly decreases or maintains the IO detection performance while compression can increase the CNN performance especially for small quantities of training data. Results indicate an optimal compression ratio for the CNN based on task difficulty, compression method, and number of training images. c 2020 Society for Imaging Science and Technology.

At public space such as a zoo and sports facilities, the presence of fence often annoys tourists and professional photographers. There is a demand for a post-processing tool to produce a non-occluded view from an image or video. This “de-fencing” task is divided into two stages: one is to detect fence regions and the other is to fill the missing part. For a decade or more, various methods have been proposed for video-based de-fencing. However, only a few single-image-based methods are proposed. In this paper, we mainly focus on single-image fence removal. Conventional approaches suffer from inaccurate and non-robust fence detection and inpainting due to less content information. To solve these problems, we combine novel methods based on a deep convolutional neural network (CNN) and classical domain knowledge in image processing. In the training process, we are required to obtain both fence images and corresponding non-fence ground truth images. Therefore, we synthesize natural fence image from real images. Moreover, spacial filtering processing (e.g. a Laplacian filter and a Gaussian filter) improves the performance of the CNN for detecting and inpainting. Our proposed method can automatically detect a fence and generate a clean image without any user input. Experimental results demonstrate that our method is effective for a broad range of fence images.

Facial landmark localization plays a critical role in many face analysis tasks. In this paper, we present a novel local-global aggregate network (LGA-Net) for robust facial landmark localization of faces in the wild. The network consists of two convolutional neural network levels which aggregate local and global information for better prediction accuracy and robustness. Experimental results show our method overcomes typical problems of cascaded networks and outperforms state-of-the-art methods on the 300-W [1] benchmark.

Latest trend in image sensor technology allowing submicron pixel size for high-end mobile devices comes at very high image resolutions and with irregularly sampled Quad Bayer color filter array (CFA). Sustaining image quality becomes a challenge for the image signal processor (ISP), namely for demosaicing. Inspired by the success of deep learning approach to standard Bayer demosaicing, we aim to investigate how artifacts-prone Quad Bayer array can benefit from it. We found that deeper networks are capable to improve image quality and reduce artifacts; however, deeper networks can be hardly deployed on mobile devices given very high image resolutions: 24MP, 36MP, 48MP. In this article, we propose an efficient end-to-end solution to bridge this gap—a duplex pyramid network (DPN). Deep hierarchical structure, residual learning, and linear feature map depth growth allow very large receptive field, yielding better details restoration and artifacts reduction, while staying computationally efficient. Experiments show that the proposed network outperforms state of the art for standard and Quad Bayer demosaicing. For the challenging Quad Bayer CFA, the proposed method reduces visual artifacts better than state-of-the-art deep networks including artifacts existing in conventional commercial solutions. While superior in image quality, it is 2–25 times faster than state-of-the-art deep neural networks and therefore feasible for deployment on mobile devices, paving the way for a new era of on-device deep ISPs.

As the development of interactive robots and machines, studies to understand and reproduce facial emotions by computers have become important research areas. For achieving this goal, several deep learning-based facial image analysis and synthesis techniques recently have been proposed. However, there are difficulties in the construction of facial image dataset having accurate emotion tags (annotations, metadata), because such emotion tags significantly depend on human perception and cognition. In this study, we constructed facial image dataset having accurate emotion tags through subjective experiments. First, based on image retrieval using the emotion terms, we collected more than 1,600,000 facial images from SNS. Next, based on a face detection image processing, we obtained approximately 380,000 facial region images as “big data.” Then, through subjective experiments, we manually checked the facial expression and the corresponding emotion tags of the facial regions. Finally, we achieved approximately 5,500 facial images having accurate emotion tags as “good data.” For validating our facial image dataset in deep learning-based facial image analysis and synthesis, we applied our dataset to CNN-based facial emotion recognition and GAN-based facial emotion reconstruction. Through these experiments, we confirmed the feasibility of our facial image dataset in deep learning-based emotion recognition and reconstruction.

JPEG compression is one of image degradations that often occurs in image storing and retouching process. Estimating JPEG compression degradation property is important for JPEG deblocking algorithm and image forensic analysis. JPEG degradation exists not only in JPEG file format but also in other image formats because JPEG distortion remains after converting to another image format. Moreover, JPEG degradation property is not always uniform within an image in case that the image is collaged from different JPEG-compressed photos. In this paper, pixelwise detection of JPEG-compression degradation and estimation of JPEG quality factor using a convolutional neural network is proposed. The proposed network outputs an estimated JPEG quality factor map and a compression flag map from an input image. Experimental results show that the proposed network successfully infers the quality factors and discriminates between non-JPEG-compressed images and JPEG-compressed images. We also demonstrate that the proposed network can spot a collaged region in a fake image which is comprised of images that have different JPEG compression properties. Additionally, the network reveals that image datasets Set5 and Set14, often used to evaluate super-resolution algorithms, contain JPEG-compressed low quality images, which are inappropriate for such evaluation.