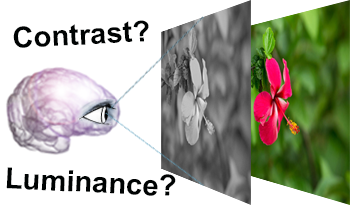

Grayscale images are essential in image processing and computer vision tasks. They effectively emphasize luminance and contrast, highlighting important visual features, while also being easily compatible with other algorithms. Moreover, their simplified representation makes them efficient for storage and transmission purposes. While preserving contrast is important for maintaining visual quality, other factors such as preserving information relevant to the specific application or task at hand may be more critical for achieving optimal performance. To evaluate and compare different decolorization algorithms, we designed a psychological experiment. During the experiment, participants were instructed to imagine color images in a hypothetical ”colorless world” and select the grayscale image that best resembled their mental visualization. We conducted a comparison between two types of algorithms: (i) perceptual-based simple color space conversion algorithms, and (ii) spatial contrast-based algorithms, including iteration-based methods. Our experimental findings indicate that CIELAB exhibited superior performance on average, providing further evidence for the effectiveness of perception-based decolorization algorithms. On the other hand, the spatial contrast-based algorithms showed relatively poorer performance, possibly due to factors such as DC-offset and artificial contrast generation. However, these algorithms demonstrated shorter selection times. Notably, no single algorithm consistently outperformed the others across all test images. In this paper, we will delve into a comprehensive discussion on the significance of contrast and luminance in color-to-grayscale mapping based on our experimental results and analysis.

This conference on computer image analysis in the study of art presents leading research in the application of image analysis, computer vision, and pattern recognition to problems of interest to art historians, curators and conservators. A number of recent questions and controversies have highlighted the value of rigorous image analysis in the service of the analysis of art, particularly painting. Consider these examples: the fractal image analysis for the authentication of drip paintings possibly by Jackson Pollock; sophisticated perspective, shading and form analysis to address claims that early Renaissance masters such as Jan van Eyck or Baroque masters such as Georges de la Tour traced optically projected images; automatic multi-scale analysis of brushstrokes for the attribution of portraits within a painting by Perugino; and multi-spectral, x-ray and infra-red scanning and image analysis of the Mona Lisa to reveal the painting techniques of Leonardo. The value of image analysis to these and other questions strongly suggests that current and future computer methods will play an ever larger role in the scholarship of visual arts.

This conference brings together real-world practitioners and researchers in intelligent robots and computer vision to share recent applications and developments. Topics of interest include the integration of imaging sensors supporting hardware, computers, and algorithms for intelligent robots, manufacturing inspection, characterization, and/or control. The decreased cost of computational power and vision sensors has motivated the rapid proliferation of machine vision technology in a variety of industries, including aluminum, automotive, forest products, textiles, glass, steel, metal casting, aircraft, chemicals, food, fishing, agriculture, archaeological products, medical products, artistic products, etc. Other industries, such as semiconductor and electronics manufacturing, have been employing machine vision technology for several decades. Machine vision supporting handling robots is another main topic. With respect to intelligent robotics another approach is sensor fusion – combining multi-modal sensors in audio, location, image and video data for signal processing, machine learning and computer vision, and additionally other 3D capturing devices. There is a need for accurate, fast, and robust detection of objects and their position in space. Their surface, background, and illumination are uncontrolled, and in most cases the objects of interest are within a bulk of many others. For both new and existing industrial users of machine vision, there are numerous innovative methods to improve productivity, quality, and compliance with product standards. There are several broad problem areas that have received significant attention in recent years. For example, some industries are collecting enormous amounts of image data from product monitoring systems. New and efficient methods are required to extract insight and to perform process diagnostics based on this historical record. Regarding the physical scale of the measurements, microscopy techniques are nearing resolution limits in fields such as semiconductors, biology, and other nano-scale technologies. Techniques such as resolution enhancement, model-based methods, and statistical imaging may provide the means to extend these systems beyond current capabilities. Furthermore, obtaining real-time and robust measurements in-line or at-line in harsh industrial environments is a challenge for machine vision researchers, especially when the manufacturer cannot make significant changes to their facility or process.

This conference on computer image analysis in the study of art presents leading research in the application of image analysis, computer vision, and pattern recognition to problems of interest to art historians, curators and conservators. A number of recent questions and controversies have highlighted the value of rigorous image analysis in the service of the analysis of art, particularly painting. Consider these examples: the fractal image analysis for the authentication of drip paintings possibly by Jackson Pollock; sophisticated perspective, shading and form analysis to address claims that early Renaissance masters such as Jan van Eyck or Baroque masters such as Georges de la Tour traced optically projected images; automatic multi-scale analysis of brushstrokes for the attribution of portraits within a painting by Perugino; and multi-spectral, x-ray and infra-red scanning and image analysis of the Mona Lisa to reveal the painting techniques of Leonardo. The value of image analysis to these and other questions strongly suggests that current and future computer methods will play an ever larger role in the scholarship of visual arts.

Estimating the pose from fiducial markers is a widely researched topic with practical importance for computer vision, robotics and photogrammetry. In this paper, we aim at quantifying the accuracy of pose estimation in real-world scenarios. More specifically, we investigate six different factors, which impact the accuracy of pose estimation, namely: number of points, depth offset, planar offset, manufacturing error, detection error, and constellation size. Their influence is quantified for four non-iterative pose estimation algorithms, employing direct linear transform, direct least squares, robust perspective n-point, and infinitesimal planar pose estimation, respectively. We present empirical results which are instructive for selecting a well-performing pose estimation method and rectifying the factors causing errors and degrading the rotational and translational accuracy of pose estimation.

This conference brings together real-world practitioners and researchers in intelligent robots and computer vision to share recent applications and developments. Topics of interest include the integration of imaging sensors supporting hardware, computers, and algorithms for intelligent robots, manufacturing inspection, characterization, and/or control. The decreased cost of computational power and vision sensors has motivated the rapid proliferation of machine vision technology in a variety of industries, including aluminum, automotive, forest products, textiles, glass, steel, metal casting, aircraft, chemicals, food, fishing, agriculture, archaeological products, medical products, artistic products, etc. Other industries, such as semiconductor and electronics manufacturing, have been employing machine vision technology for several decades. Machine vision supporting handling robots is another main topic. With respect to intelligent robotics another approach is sensor fusion – combining multi-modal sensors in audio, location, image and video data for signal processing, machine learning and computer vision, and additionally other 3D capturing devices. There is a need for accurate, fast, and robust detection of objects and their position in space. Their surface, background, and illumination are uncontrolled, and in most cases the objects of interest are within a bulk of many others. For both new and existing industrial users of machine vision, there are numerous innovative methods to improve productivity, quality, and compliance with product standards. There are several broad problem areas that have received significant attention in recent years. For example, some industries are collecting enormous amounts of image data from product monitoring systems. New and efficient methods are required to extract insight and to perform process diagnostics based on this historical record. Regarding the physical scale of the measurements, microscopy techniques are nearing resolution limits in fields such as semiconductors, biology, and other nano-scale technologies. Techniques such as resolution enhancement, model-based methods, and statistical imaging may provide the means to extend these systems beyond current capabilities. Furthermore, obtaining real-time and robust measurements in-line or at-line in harsh industrial environments is a challenge for machine vision researchers, especially when the manufacturer cannot make significant changes to their facility or process.

Self-driving cars are gradually making their way into road traffic and represent the main component of the new form of mobility. Major companies such as Tesla, Google, and Uber are researching the continuous improvement of self-driving vehicles and their reliability. Therefore, it is of great interest for trained professionals to deal with and understand the principles and requirements for autonomous driving. This paper aims to describe the new concept of a Bachelor / Master level university course for automotive technology students to address new mobility and self-driving cars. For the practice-oriented course, hardware in a low budget range (US $80) was used, which nevertheless has all the necessary sensors and requirements for a comprehensive practical introduction to the topic of self-driving automotive technology. The modular structure of the course contains lectures and exercises on the following topics: The first Exercise deals with the construction and modification of the Car-Kit, followed by the setup of the used Raspberry Pi. Since the car kit and Raspberry Pi are ready to use, the third exercise will steer the car remotely. The autonomous lane lectures and exercises follow this navigation with color spaces and masking, the Canny edge detection, Hough transform, steering, and stabilization.

In this work, we explore the ability to estimate vehicle fuel consumption using imagery from overhead fisheye lens cameras deployed as traffic sensors. We utilize this information to simulate vision-based control of a traffic intersection, with a goal of improving fuel economy with minimal impact to mobility. We introduce the ORNL Overhead Vehicle Data set (OOVD), consisting of a data set of paired, labeled vehicle images from a ground-based camera and an overhead fisheye lens traffic camera. The data set includes segmentation masks based on Gaussian mixture models for vehicle detection. We show the data set utility through three applications: estimation of fuel consumption based on segmentation bounding boxes, vehicle discrimination for vehicles with large bounding boxes, and fine-grained classification on a limited number of vehicle makes and models using a pre-trained set of convolutional neural network models. We compare these results with estimates based on a large open-source data set of web-scraped imagery. Finally, we show the utility of the approach using reinforcement learning in a traffic simulator using the open source Simulation of Urban Mobility (SUMO) package. Our results demonstrate the feasibility of the approach for controlling traffic lights for better fuel efficiency based solely on visual vehicle estimates from commercial, fisheye lens cameras.

In this paper we present a Cluster Aggregation Network (CAN) for face set recognition. This network takes a set of face images, which could be either face videos or clusters with a different number of face images as its input, and then it is able to produce a compact and fixed-dimensional feature representation for the face set for the purpose of recognition. The whole network is made up of two modules, among which the first one is a face feature embedding module and the second one is the face feature aggregation module. The first module is a deep Convolutional Neural Network (CNN) which maps each of the face images to a fixed-dimensional vector. The second module is also a CNN which is trained to be able to automatically assess the quality of input face images and thus assign various weights to the images’ corresponding feature vectors. Then the one aggregated feature vector representing the input set is formed inside the convex hull formed by the input single face image features. Due to the mechanism that quality assessment is invariant to the order of one image in a set and the number of images in the set, the aggregation is invariant to these factors. Our CAN is trained with standard classification loss without any other supervision information and we found that our network is automatically attracted to high quality face images, while repelling low quality images, such as blurred, blocked, and non-frontal face images. We trained our networks with CASIA and YouTube Face datasets and the experiments on IJB-C video face recognition benchmark show that our method outperforms the current state-of-the-art feature aggregation methods and our challenging baseline aggregation method.