Magnetic induction tomography (MIT) is an emerging imaging technology holding significant promise in the field of cerebral hemorrhage monitoring. The commonly employed imaging method in MIT is time-difference imaging. However, this approach relies on magnetic field signals preceding cerebral hemorrhage, which are often challenging to obtain. Multiple bioelectrical impedance information with different frequencies is added to this study on the basis of single-frequency information, and the collected signals with different frequencies are identified to obtain the magnetic field signal generated by single-layer heterogeneous tissue. The Stacked Autoencoder (SAE) neural network algorithm is used to reconstruct the images of head multi-layer tissues. Both numerical simulation and phantom experiments are carried out. The results indicate that the relative error of the multi-frequency SAE reconstruction is only 7.82%, outperforming traditional algorithms. Moreover, under a noise level of 40 dB, the anti-interference capability of the MIT algorithm based on frequency identification and SAE is superior to traditional algorithms. This research explores a novel approach for the dynamic monitoring of cerebral hemorrhage and demonstrates the potential advantages of MIT in non-invasive monitoring.

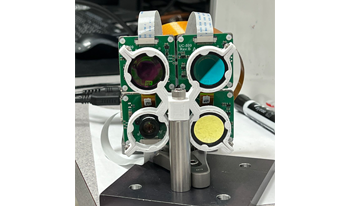

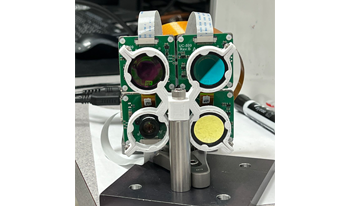

We demonstrate a physics-aware transformer for feature-based data fusion from cameras with diverse resolution, color spaces, focal planes, focal lengths, and exposure. We also demonstrate a scalable solution for synthetic training data generation for the transformer using open-source computer graphics software. We demonstrate image synthesis on arrays with diverse spectral responses, instantaneous field of view and frame rate.

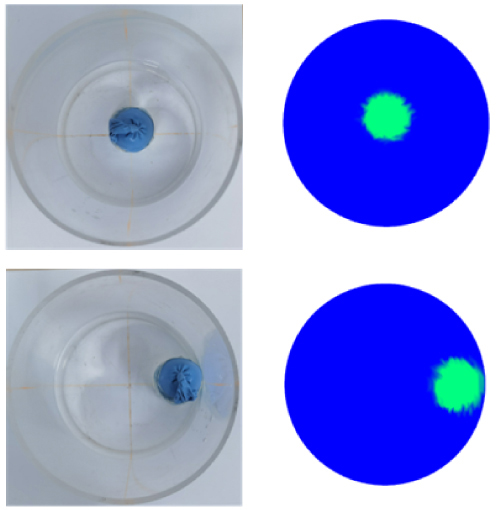

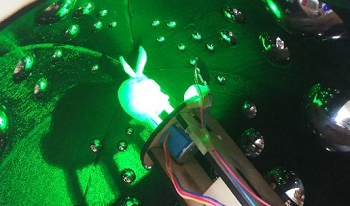

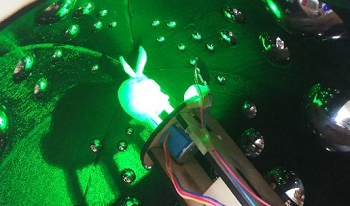

Light field cameras have been used for 3-dimensional geometrical measurement or refocusing of captured photo. In this paper, we propose the light field acquiring method using a spherical mirror array. By employing a mirror array and two cameras, a virtual camera array that captures an object from around it can be generated. Since large number of virtual cameras can be constructed from two real cameras, an affordable high-density camera array can be achieved using this method. Furthermore, the spherical mirrors enable the capturing of large objects as compared to the previous methods. We conducted simulations to capture the light field, and synthesized arbitrary viewpoint images of the object with observation from 360 degrees around it. The ability of this system to refocus assuming a large aperture is also confirmed. We have also built a prototype which approximates the proposal to conduct a capturing experiment in order to ensure the system’s feasibility.

Light field cameras have been used for 3-dimensional geometrical measurement or refocusing of captured photo. In this paper, we propose the light field acquiring method using a spherical mirror array. By employing a mirror array and two cameras, a virtual camera array that captures an object from around it can be generated. Since large number of virtual cameras can be constructed from two real cameras, an affordable high-density camera array can be achieved using this method. Furthermore, the spherical mirrors enable the capturing of large objects as compared to the previous methods. We conducted simulations to capture the light field, and synthesized arbitrary viewpoint images of the object with observation from 360 degrees around it. The ability of this system to refocus assuming a large aperture is also confirmed. We have also built a prototype which approximates the proposal to conduct a capturing experiment in order to ensure the system’s feasibility.

We demonstrate a physics-aware transformer for feature-based data fusion from cameras with diverse resolution, color spaces, focal planes, focal lengths, and exposure. We also demonstrate a scalable solution for synthetic training data generation for the transformer using open-source computer graphics software. We demonstrate image synthesis on arrays with diverse spectral responses, instantaneous field of view and frame rate.

Translucency optically results from subsurface light transport and plays a considerable role in how objects and materials appear. Absorption and scattering coefficients parametrize the distance a photon travels inside the medium before it gets absorbed or scattered, respectively. Stimuli produced by a material for a distinct viewing condition are perceptually non-uniform w.r.t. these coefficients. In this work, we use multi-grid optimization to embed a non-perceptual absorption-scattering space into a perceptually more uniform space for translucency and lightness. In this process, we rely on A (alpha) as a perceptual translucency metric. Small Euclidean distances in the new space are roughly proportional to lightness and apparent translucency differences measured with A. This makes picking A more practical and predictable, and is a first step toward a perceptual translucency space.

The performance of a convolutional neural network (CNN) on an image texture detection task as a function of linear image processing and the number of training images is investigated. Performance is quantified by the area under (AUC) the receiver operating characteristic (ROC) curve. The Ideal Observer (IO) maximizes AUC but depends on high-dimensional image likelihoods. In many cases, the CNN performance can approximate the IO performance. This work demonstrates counterexamples where a full-rank linear transform degrades the CNN performancebelow the IO in the limit of large quantities of training dataand network layers. A subsequent linear transform changes theimages’ correlation structure, improves the AUC, and again demonstrates the CNN dependence on linear processing. Compression strictly decreases or maintains the IO detection performance while compression can increase the CNN performance especially for small quantities of training data. Results indicate an optimal compression ratio for the CNN based on task difficulty, compression method, and number of training images. c 2020 Society for Imaging Science and Technology.

We attempted to predict an individual’s preferences for movie posters using a machine-learning algorithm based on the posters’ graphic elements. We transformed perceptually essential graphic elements into features for machine learning computation. Fifteen university students participated in a survey designed to assess their movie poster designs (Nposter = 619). Based on the movie posters’ feature information and participants’ judgments, we modeled individual algorithms using an XGBoost classifier. We achieved prediction accuracies for these individual models that ranged between 44.70 and 71.70%, while the repeated human judgments ranged between 61.90 and 87.50%. We discussed technical challenges to advance prediction algorithm and summarized reflections on using machine learning-driven algorithms in creative work.