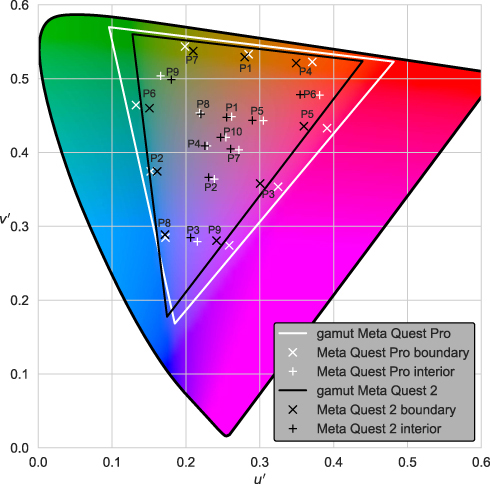

The utility and ubiquitousness of virtual reality make it an extremely popular tool in various scientific research areas. Owing to its ability to present naturalistic scenes in a controlled manner, virtual reality may be an effective option for conducting color science experiments and studying different aspects of color perception. However, head mounted displays have their limitations, and the investigator should choose the display device that meets the colorimetric requirements of their color science experiments. This paper presents a structured method to characterize the colorimetric profile of a head mounted display with the aid of color characterization models. By way of example, two commercially available head mounted displays (Meta Quest 2 and Meta Quest Pro) are characterized using four models (Look-up Table, Polynomial Regression, Artificial Neural Network, and Gain Gamma Offset), and the appropriateness of each of these models is investigated.

The London Imaging Meeting is a yearly topics-based conference organized by the Society of Imaging Science and technology (IS&T), in collaboration with the Institute of Physics (IOP) and the Royal Photographic Society. This year's topic was "Imaging for Deep Learning". At the heart of our conference were five focal talks given by worldrenowned experts in the field (who then also organised the related sessions). Focal speakers were Dr. Seyed Ali Amirshahi, NTNU, Norway (Image Quality); Prof. Jonas Unger, Linköping University, Sweden (Datasets for Deep Learning); Prof. Simone Bianco, Università degli Studi di Milano-Bicocca, Italy (Color Constancy); Dr. Valentina Donzella, University of Warwick, UK (Imaging Performance); and Dr. Ray Ptucha, Apple Inc. US (Characterization and Optimization). We also had two superb keynote speakers. Thanks to Dr. Robin Jenkin, Nvidia, for his talk on "Camera Metrics for Autonomous Vision" and to Dr. Joyce Farrell, Stanford University, for her talk on "Soft Prototyping Camera Designs for Autonomous Driving". As a new innovation this year—and to support the remit of LIM to reach out to students in the field—we included an invited tutorial research lecture. Given by Prof. Stephen Westland, University of Leeds, the presentation titled "Using Imaging Data for Efficient Colour Design" looked at deep learning techniques in the field of design and demonstrated that simple applications of deep learning can deliver excellent results. There were many strong contenders for the LIM Best Paper Award. Noteworthy, honourable mentions include "Portrait Quality Assessment using Multi-scale CNN", N. Chahine and S. Belkarfa, DXOMARK' "HDR4CV: High dynamic range dataset with adversarial illumination for testing computer vision methods", P. Hanjil et al., University of Cambridge; "Natural Scene Derived Camera Edge Spatial Frequency Response for Autonomous Vision Systems", O. van Zwanenberg et al., University of Westminster; and "Towards a Generic Neural Network Architecture for Approximating Tone Mapping Algorithms", J. McVey and G. Finlayson, University of East Anglia. But, by a unanimous vote, this year's Best Paper was awarded to "Impact of the Windshield's Optical Aberrations on Visual Range Camera-based Classification Tasks Performed by CNNs", C. Krebs, P. Müller, and A. Braun, (Hochschule Düsseldorf) (University of Applied Sciences Düsseldorf), Germany. We thank everyone who helped make LIM a success including the IS&T office, and the LIM presenters, reviewers, focal speakers, and keynotes, as well as the audience, who participated in making the event engaging and vibrant. This year, the conference was run by the IOP and we are extremely grateful for their help in hosting the event. A final special thanks go to the Engineering and Physical Sciences Research Council (EPSRC) who provided funding through the grant EP/S028730/1. Finally, we are pleased to announce that next year's LIM conference will be in the area of "Displays"; the conference chair is Dr. Rafal Mantiuk, University of Cambridge. —Prof. Graham Finlayson, LIM series chair, and Prof. Sophie Triantaphillidou, LIM2021 conference chair

ACES is the Academy Color Encoding System established by the Academy of Motion Picture Arts and Science (A.M.P.A.S). Since its introduction (version 1.0 in December 2014), it has been widely used in the film industry. The interaction of four modules makes the system flexible and leaves room for own developments and modifications. Nevertheless, improvements are possible for various practical applications. This paper analyzes some of the problems frequently encountered in practice in order to identify possible solutions. These include improvements in importing still images, white point conversions problems and test lighting. The results should be applicable in practice and take into account above all the workflow with commercially available software programs. The goal of this paper is to record the spectral distribution of a GretagMacbeth ColorChecker using a spectrometer and also photography it with different cameras like RED Scarlet M-X, Blackmagic URSA Mini Pro and Canon EOS 5D Mark III under the same lighting conditions. The recorded imagery is then converted to the ACES2065-1 color space. The positions of the patches of the ColorChecker in CIE Yxy color space are then compared to the positions of the patches captured by the spectral device. Using several built-in converters the goal is to match the positions of the spectral data as close as possible