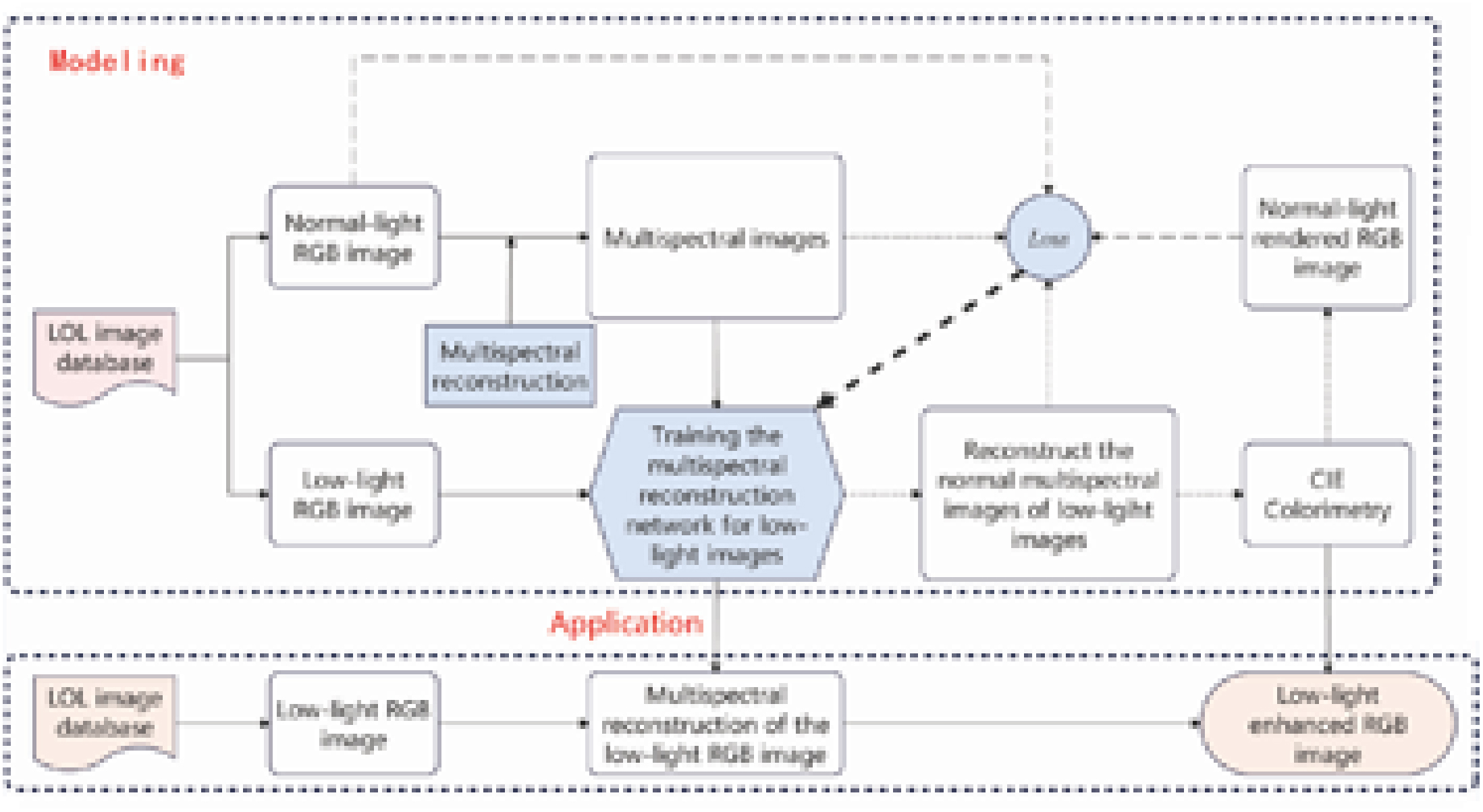

Low-light images often fail to accurately capture color and texture, limiting their practical applications in imaging technology. The use of low-light image enhancement technology can effectively restore the color and texture information contained in the image. However, current low-light image enhancement is directly calculated from low-light to normal-light images, ignoring the basic principles of imaging, and the image enhancement effect is limited. The Retinex model splits the image into illumination components and reflection components, and uses the decomposed illumination and reflection components to achieve end-to-end enhancement of low-light images. Inspired by the Retinex theory, this study proposes a low-light image enhancement method based on multispectral reconstruction. This method first uses a multispectral reconstruction algorithm to reconstruct a metameric multispectral image of a normal-light RGB image. Then it uses a deep learning network to learn the end-to-end mapping relationship from a low-light RGB image to a normal-light multispectral image. In this way, any low-light image can be reconstructed into a normal-light multispectral image. Finally, the corresponding normal-light RGB image is calculated according to the colorimetry theory. To test the proposed method, the popular dataset for low-light image enhancement, LOw-Light (LOL) is adopted to compare the proposed method and the existing methods. During the test, a multispectral reconstruction method based on reversing the image signal processing of RGB imaging is used to reconstruct the corresponding metameric multispectral image of each normal-light RGB image in LOL. The deep learning architecture proposed by Zhang et al. with the convolutional block attention module added is used to establish the mapping relationship between the low-light RGB images and the corresponding reconstructed multispectral images. The proposed method is compared to existing methods such as self-supervised, RetinexNet, RRM, KinD, RUAS, and URetinex-Net. In the context of the LOL dataset and an illuminant chosen for rendering, the results show that the low-light image enhancement method proposed in this study is better than the existing methods.

The accurate digitization of film using high-resolution digital cameras, especially historic positive and negative film, presents a difficult challenge for cultural-heritage imaging. Approaches used for reflecting materials—e.g., profiling using color targets—are difficult to apply to transparent materials due to a paucity of film-specific targets, measurement challenges of small patch sizes, and the inadequacy of these targets for historical films and negatives. Research was carried out to design, construct, and verify a new transmission target. Simulation was used to select 80 filters, optimized from a 476-filter set of absorption filters with criteria including colorimetric performance for the 80 filters and four validation spectral datasets, color gamut, and spectral diversity. A prototype target was constructed, measured, and imaged. All criteria were met. Future research will refine the target and validate its performance using independent targets and color-challenging photographs.

Color-capture systems use color-correction processing operations to deliver expected results in the saved image files. For cultural heritage imaging projects, establishing and monitoring such operations are important when meeting imaging requirements and guidelines. To reduce unwanted variations, it is common to evaluate imaging performance, and adjust hardware and software settings. In most cases these include the use of ICC Color profiling software and supporting measurements. While advice on the subject by experts can be deftly persuasive, discussions of color goodness for capture are clouded by many imaging variables. This makes claims of a single, color-profiling approach or engine moot in the context of a greater workflow environment. We suggest looking outward and considering alternative profiling practices and evaluation methods that could improve color image capture accuracy and consistency.