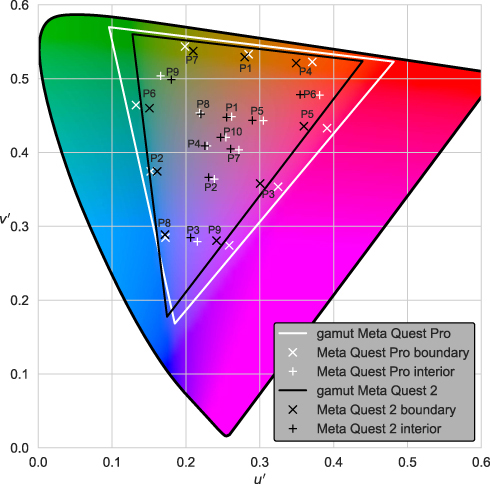

The utility and ubiquitousness of virtual reality make it an extremely popular tool in various scientific research areas. Owing to its ability to present naturalistic scenes in a controlled manner, virtual reality may be an effective option for conducting color science experiments and studying different aspects of color perception. However, head mounted displays have their limitations, and the investigator should choose the display device that meets the colorimetric requirements of their color science experiments. This paper presents a structured method to characterize the colorimetric profile of a head mounted display with the aid of color characterization models. By way of example, two commercially available head mounted displays (Meta Quest 2 and Meta Quest Pro) are characterized using four models (Look-up Table, Polynomial Regression, Artificial Neural Network, and Gain Gamma Offset), and the appropriateness of each of these models is investigated.

In this study, the third order polynomial regression (PR) and deep neural networks (DNN) were used to perform color characterization from CMYK to CIELAB color space, based on a dataset consisting of 2016 color samples which were produced using a Stratasys J750 3D color printer. Five output variables including CIE XYZ, the logarithm of CIE XYZ, CIELAB, spectra reflectance and the principal components of spectra were compared for the performance of printer color characterization. The 10-fold cross validation was used to evaluate the accuracy of the models developed using different approaches, and CIELAB color differences were calculated with D65 illuminant. In addition, the effect of different training data sizes on predictive accuracy was investigated. The results showed that the DNN method produced much smaller color differences than the PR method, but it is highly dependent on the amount of training data. In addition, the logarithm of CIE XYZ as the output provided higher accuracy than CIE XYZ.