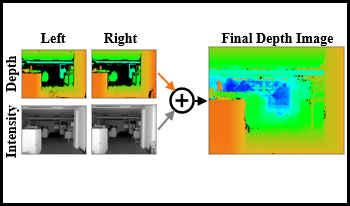

Solid-state lidar cameras produce 3D images, useful in applications such as robotics and self-driving vehicles. However, range is limited by the lidar laser power and features such as perpendicular surfaces and dark objects pose difficulties. We propose the use of intensity images, inherent in lidar camera data from the total laser and ambient light collected in each pixel, to extract additional depth information and boost ranging performance. Using a pair of off-the-shelf lidar cameras and a conventional stereo depth algorithm to process the intensity images, we demonstrate increase of the native lidar maximum depth range by 2× in an indoor environment and almost 10× outdoors. Depth information is also extracted from features in the environment such as dark objects, floors and ceiling which are otherwise not detected by the lidar sensor. While the specific technique presented is useful in applications involving multiple lidar cameras, the principle of extracting depth data from lidar camera intensity images could also be extended to standalone lidar cameras using monocular depth techniques.

Color imaging has historically been treated as a phenomenon sufficiently described by three independent parameters. Recent advances in computational resources and in the understanding of the human aspects are leading to new approaches that extend the purely metrological view of color towards a perceptual approach describing the appearance of objects, documents and displays. Part of this perceptual view is the incorporation of spatial aspects, adaptive color processing based on image content, and the automation of color tasks, to name a few. This dynamic nature applies to all output modalities, including hardcopy devices, but to an even larger extent to soft-copy displays with their even larger options of dynamic processing. Spatially adaptive gamut and tone mapping, dynamic contrast, and color management continue to support the unprecedented development of display hardware covering everything from mobile displays to standard monitors, and all the way to large size screens and emerging technologies. The scope of inquiry is also broadened by the desire to match not only color, but complete appearance perceived by the user. This conference provides an opportunity to present, to interact, and to learn about the most recent developments in color imaging and material appearance researches, technologies and applications. Focus of the conference is on color basic research and testing, color image input, dynamic color image output and rendering, color image automation, emphasizing color in context and color in images, and reproduction of images across local and remote devices. The conference covers also software, media, and systems related to color and material appearance. Special attention is given to applications and requirements created by and for multidisciplinary fields involving color and/or vision.

Color imaging has historically been treated as a phenomenon sufficiently described by three independent parameters. Recent advances in computational resources and in the understanding of the human aspects are leading to new approaches that extend the purely metrological view of color towards a perceptual approach in documents and displays. Part of this perceptual view is the incorporation of spatial aspects, adaptive color processing based on image content, and the automation of color tasks, to name a few. This dynamic nature applies to all output modalities, including hardcopy devices, but to an even larger extent to soft-copy displays with their even larger options of dynamic processing. Spatially adaptive gamut and tone mapping, dynamic contrast, and color management continue to support the unprecedented development of display hardware covering everything from mobile displays to standard monitors, and all the way to large size screens and emerging technologies. This conference provides an opportunity to present, to interact, and to learn about the most recent developments in color imaging researches, technologies and applications. Focus of the conference is on color basic research and testing, color image input, dynamic color image output and rendering, color image automation, emphasizing color in context and color in images, and reproduction of images across local and remote devices. The conference covers also software, media, and systems related to color. Special attention is given to applications and requirements created by and for multidisciplinary fields involving color and/or vision.