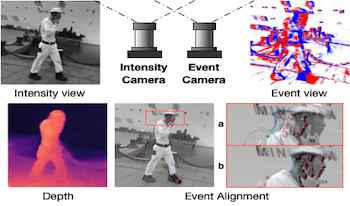

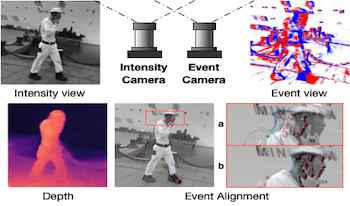

Event cameras are novel bio-inspired vision sensors that output pixel-level intensity changes in microsecond accuracy with high dynamic range and low power consumption. Despite these advantages, event cameras cannot be directly applied to computational imaging tasks due to the inability to obtain high-quality intensity and events simultaneously. This paper aims to connect a standalone event camera and a modern intensity camera so that applications can take advantage of both sensors. We establish this connection through a multi-modal stereo matching task. We first convert events to a reconstructed image and extend the existing stereo networks to this multi-modality condition. We propose a self-supervised method to train the multi-modal stereo network without using ground truth disparity data. The structure loss calculated on image gradients is used to enable self-supervised learning on such multi-modal data. Exploiting the internal stereo constraint between views with different modalities, we introduce general stereo loss functions, including disparity cross-consistency loss and internal disparity loss, leading to improved performance and robustness compared to existing approaches. Our experiments demonstrate the effectiveness of the proposed method, especially the proposed general stereo loss functions, on both synthetic and real datasets. Finally, we shed light on employing the aligned events and intensity images in downstream tasks, e.g., video interpolation application.

Light field cameras have been used for 3-dimensional geometrical measurement or refocusing of captured photo. In this paper, we propose the light field acquiring method using a spherical mirror array. By employing a mirror array and two cameras, a virtual camera array that captures an object from around it can be generated. Since large number of virtual cameras can be constructed from two real cameras, an affordable high-density camera array can be achieved using this method. Furthermore, the spherical mirrors enable the capturing of large objects as compared to the previous methods. We conducted simulations to capture the light field, and synthesized arbitrary viewpoint images of the object with observation from 360 degrees around it. The ability of this system to refocus assuming a large aperture is also confirmed. We have also built a prototype which approximates the proposal to conduct a capturing experiment in order to ensure the system’s feasibility.

Light field cameras have been used for 3-dimensional geometrical measurement or refocusing of captured photo. In this paper, we propose the light field acquiring method using a spherical mirror array. By employing a mirror array and two cameras, a virtual camera array that captures an object from around it can be generated. Since large number of virtual cameras can be constructed from two real cameras, an affordable high-density camera array can be achieved using this method. Furthermore, the spherical mirrors enable the capturing of large objects as compared to the previous methods. We conducted simulations to capture the light field, and synthesized arbitrary viewpoint images of the object with observation from 360 degrees around it. The ability of this system to refocus assuming a large aperture is also confirmed. We have also built a prototype which approximates the proposal to conduct a capturing experiment in order to ensure the system’s feasibility.

Event cameras are novel bio-inspired vision sensors that output pixel-level intensity changes in microsecond accuracy with high dynamic range and low power consumption. Despite these advantages, event cameras cannot be directly applied to computational imaging tasks due to the inability to obtain high-quality intensity and events simultaneously. This paper aims to connect a standalone event camera and a modern intensity camera so that applications can take advantage of both sensors. We establish this connection through a multi-modal stereo matching task. We first convert events to a reconstructed image and extend the existing stereo networks to this multi-modality condition. We propose a self-supervised method to train the multi-modal stereo network without using ground truth disparity data. The structure loss calculated on image gradients is used to enable self-supervised learning on such multi-modal data. Exploiting the internal stereo constraint between views with different modalities, we introduce general stereo loss functions, including disparity cross-consistency loss and internal disparity loss, leading to improved performance and robustness compared to existing approaches. Our experiments demonstrate the effectiveness of the proposed method, especially the proposed general stereo loss functions, on both synthetic and real datasets. Finally, we shed light on employing the aligned events and intensity images in downstream tasks, e.g., video interpolation application.

A database of realizable filters is created and searched to obtain the best filter that, when placed in front of an existing camera, results in improved colorimetric capabilities for the system. The image data with the external filter is combined with image data without the filter to provide a six-band system. The colorimetric accuracy of the system is quantified using simulations that include a realistic signal-dependent noise model. Using a training data set, we selected the optimal filter based on four criteria: Vora Value, Figure of Merit, training average ΔE, and training maximum ΔE. Each selected filter was used on testing data. The filters chosen using the training ΔE criteria consistently outperformed the theoretical criteria.

The performance of autonomous agents in both commercial and consumer applications increases along with their situational awareness. Tasks such as obstacle avoidance, agent to agent interaction, and path planning are directly dependent upon their ability to convert sensor readings into scene understanding. Central to this is the ability to detect and recognize objects. Many object detection methodologies operate on a single modality such as vision or LiDAR. Camera-based object detection models benefit from an abundance of feature-rich information for classifying different types of objects. LiDAR-based object detection models use sparse point clouds, where each point contains accurate 3D position of object surfaces. Camera-based methods lack accurate object to lens distance measurements, while LiDAR-based methods lack dense feature-rich details. By utilizing information from both camera and LiDAR sensors, advanced object detection and identification is possible. In this work, we introduce a deep learning framework for fusing these modalities and produce a robust real-time 3D bounding box object detection network. We demonstrate qualitative and quantitative analysis of the proposed fusion model on the popular KITTI dataset.

Nonlinear complementary metal-oxide semiconductor (CMOS) image sensors (CISs), such as logarithmic (log) and linearâ–”logarithmic (linlog) sensors, achieve high/wide dynamic ranges in single exposures at video frame rates. As with linear CISs, fixed pattern noise (FPN) correction and salt-and-pepper noise (SPN) filtering are required to achieve high image quality. This paper presents a method to generate digital integrated circuits, suitable for any monotonic nonlinear CIS, to correct FPN in hard real time. It also presents a method to generate digital integrated circuits, suitable for any monochromatic nonlinear CIS, to filter SPN in hard real time. The methods are validated by implementing and testing generated circuits using field-programmable gate array (FPGA) tools from both Xilinx and Altera. Generated circuits are shown to be efficient, in terms of logic elements, memory bits, and power consumption. Scalability of the methods to full high-definition (FHD) video processing is also demonstrated. In particular, FPN correction and SPN filtering of over 140 megapixels per second are feasible, in hard real time, irrespective of the degree of nonlinearity. c 2018 Society for Imaging Science and Technology.