Predicting the perceived brightness and lightness of image elements using color appearance models is important for the design and evaluation of HDR displays. This paper presents a series of experiments to examine perceived brightness/lightness for displayed stimuli of differing sizes. The number of observers in the first pilot experiment was 7, in the second and third pilot experiments was 6, and in the main experiment was 14. The target and test stimuli in the main experiment were 10∘ and 1∘ field of view, respectively. The results indicate a small, but consistent, effect that brightness increases with stimulus size. The effect is dependent on the stimulus lightness level but not on the hue or saturation of the stimuli. A preliminary model is also introduced to enhance models such as CIECAM16 with the capability of predicting brightness and lightness as a function of stimulus size. The proposed model yields good performance in terms of perceived brightness/lightness prediction.

Rudd and Zemach analyzed brightness/lightness matches performed with disk/annulus stimuli under four contrast polarity conditions, in which the disk was either a luminance increment or decrement with respect to the annulus, and the annulus was either an increment or decrement with respect to the background. In all four cases, the disk brightness—measured by the luminance of a matching disk—exhibited a parabolic dependence on the annulus luminance when plotted on a log-log scale. Rudd further showed that the shape of this parabolic relationship can be influenced by instructions to match the disk’s brightness (perceived luminance), brightness contrast (perceived disk/annulus luminance ratio), or lightness (perceived reflectance) under different assumptions about the illumination. Here, I compare the results of those experiments to results of other, recent, experiments in which the match disk used to measure the target disk appearance was not surrounded by an annulus. I model the entire body of data with a neural model involving edge integration and contrast gain control in which top-down influences controlling the weights given to edges in the edge integration process act either before or after the contrast gain control stage of the model, depending on the stimulus configuration and the observer’s assumptions about the nature of the illumination.

A psychophysical experiment was conducted in which observers compared the saturation and brightness between high-dynamic-range images that had been modulated in chroma and achromatic lightness. Models of brightness which account for the Helmholtz-Kohlrausch effect include both chromatic and achromatic inputs into brightness metrics, and this experiment was an exploration of whether these metrics could be expanded to images. The observers consistently judged saturation in agreement with the predictions of our color appearance modeling. However, some unexpected results and differences between observers in their methods for judging brightness indicates that further modeling, including spatial effects of color perception, need to be included to apply our model of the Helmholtz-Kohlrausch effect to images.

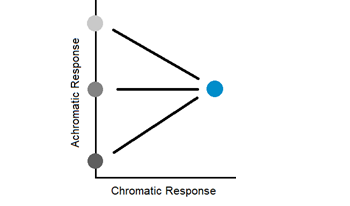

Luminance underestimates the brightness of chromatic visual stimuli. This phenomenon, known as the Helmholtz-Kohlrausch effect, is due to the different experimental methods—heterochromatic flicker photometry (luminance) and direct brightness matching (brightness)—from which these measures are derived. This paper probes the relationship between luminance and brightness through a psychophysical experiment that uses slowly oscillating visual stimuli and compares the results of such an experiment to the results of flicker photometry and direct brightness matching. The results show that the dimension of our internal color space corresponding with our achromatic response to stimuli is not a scale of brightness or lightness.

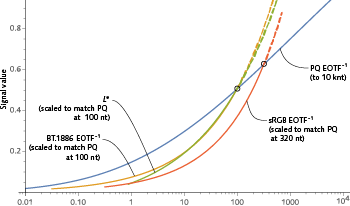

High dynamic range (HDR) technology enables a much wider range of luminances – both relative and absolute – than standard dynamic range (SDR). HDR extends black to lower levels, and white to higher levels, than SDR. HDR enables higher absolute luminance at the display to be used to portray specular highlights and direct light sources, a capability that was not available in SDR. In addition, HDR programming is mastered with wider color gamut, usually DCI P3, wider than the BT.1886 (“BT.709”) gamut of SDR. The capabilities of HDR strain the usual SDR methods of specifying color range. New methods are needed. A proposal has been made to use CIE LAB to quantify HDR gamut. We argue that CIE L* is only appropriate for applications having contrast range not exceeding 100:1, so CIELAB is not appropriate for HDR. In practice, L* cannot accurately represent lightness that significantly exceeds diffuse white – that is, L* cannot reasonably represent specular reflections and direct light sources. In brief: L* is inappropriate for HDR. We suggest using metrics based upon ST 2084/BT.2100 PQ and its associated color encoding, IC<sub>T</sub>C<sub>P</sub>.